Zhihan Cui

Augmenting Clinical Decision-Making with an Interactive and Interpretable AI Copilot: A Real-World User Study with Clinicians in Nephrology and Obstetrics

Jan 31, 2026Abstract:Clinician skepticism toward opaque AI hinders adoption in high-stakes healthcare. We present AICare, an interactive and interpretable AI copilot for collaborative clinical decision-making. By analyzing longitudinal electronic health records, AICare grounds dynamic risk predictions in scrutable visualizations and LLM-driven diagnostic recommendations. Through a within-subjects counterbalanced study with 16 clinicians across nephrology and obstetrics, we comprehensively evaluated AICare using objective measures (task completion time and error rate), subjective assessments (NASA-TLX, SUS, and confidence ratings), and semi-structured interviews. Our findings indicate AICare's reduced cognitive workload. Beyond performance metrics, qualitative analysis reveals that trust is actively constructed through verification, with interaction strategies diverging by expertise: junior clinicians used the system as cognitive scaffolding to structure their analysis, while experts engaged in adversarial verification to challenge the AI's logic. This work offers design implications for creating AI systems that function as transparent partners, accommodating diverse reasoning styles to augment rather than replace clinical judgment.

The Cultural Psychology of Large Language Models: Is ChatGPT a Holistic or Analytic Thinker?

Aug 28, 2023

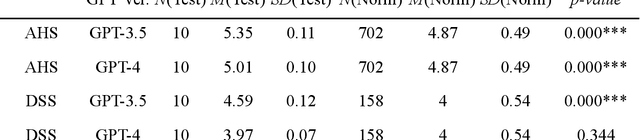

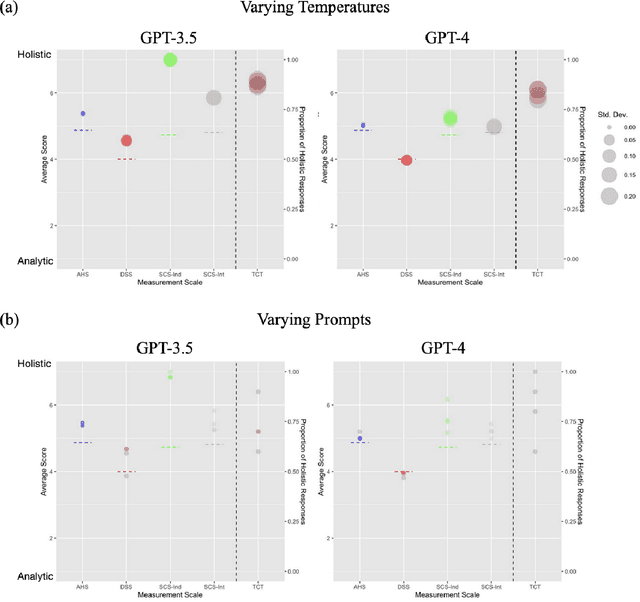

Abstract:The prevalent use of Large Language Models (LLMs) has necessitated studying their mental models, yielding noteworthy theoretical and practical implications. Current research has demonstrated that state-of-the-art LLMs, such as ChatGPT, exhibit certain theory of mind capabilities and possess relatively stable Big Five and/or MBTI personality traits. In addition, cognitive process features form an essential component of these mental models. Research in cultural psychology indicated significant differences in the cognitive processes of Eastern and Western people when processing information and making judgments. While Westerners predominantly exhibit analytical thinking that isolates things from their environment to analyze their nature independently, Easterners often showcase holistic thinking, emphasizing relationships and adopting a global viewpoint. In our research, we probed the cultural cognitive traits of ChatGPT. We employed two scales that directly measure the cognitive process: the Analysis-Holism Scale (AHS) and the Triadic Categorization Task (TCT). Additionally, we used two scales that investigate the value differences shaped by cultural thinking: the Dialectical Self Scale (DSS) and the Self-construal Scale (SCS). In cognitive process tests (AHS/TCT), ChatGPT consistently tends towards Eastern holistic thinking, but regarding value judgments (DSS/SCS), ChatGPT does not significantly lean towards the East or the West. We suggest that the result could be attributed to both the training paradigm and the training data in LLM development. We discuss the potential value of this finding for AI research and directions for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge