Zhenhan Huang

Modular Prompt Learning Improves Vision-Language Models

Feb 19, 2025Abstract:Pre-trained vision-language models are able to interpret visual concepts and language semantics. Prompt learning, a method of constructing prompts for text encoders or image encoders, elicits the potentials of pre-trained models and readily adapts them to new scenarios. Compared to fine-tuning, prompt learning enables the model to achieve comparable or better performance using fewer trainable parameters. Besides, prompt learning freezes the pre-trained model and avoids the catastrophic forgetting issue in the fine-tuning. Continuous prompts inserted into the input of every transformer layer (i.e. deep prompts) can improve the performances of pre-trained models on downstream tasks. For i-th transformer layer, the inserted prompts replace previously inserted prompts in the $(i-1)$-th layer. Although the self-attention mechanism contextualizes newly inserted prompts for the current layer and embeddings from the previous layer's output, removing all inserted prompts from the previous layer inevitably loses information contained in the continuous prompts. In this work, we propose Modular Prompt Learning (MPL) that is designed to promote the preservation of information contained in the inserted prompts. We evaluate the proposed method on base-to-new generalization and cross-dataset tasks. On average of 11 datasets, our method achieves 0.7% performance gain on the base-to-new generalization task compared to the state-of-the-art method. The largest improvement on the individual dataset is 10.7% (EuroSAT dataset).

Differentiable Prompt Learning for Vision Language Models

Dec 31, 2024

Abstract:Prompt learning is an effective way to exploit the potential of large-scale pre-trained foundational models. Continuous prompts parameterize context tokens in prompts by turning them into differentiable vectors. Deep continuous prompts insert prompts not only in the input but also in the intermediate hidden representations. Manually designed deep continuous prompts exhibit a remarkable improvement compared to the zero-shot pre-trained model on downstream tasks. How to automate the continuous prompt design is an underexplored area, and a fundamental question arises, is manually designed deep prompt strategy optimal? To answer this question, we propose a method dubbed differentiable prompt learning (DPL). The DPL method is formulated as an optimization problem to automatically determine the optimal context length of the prompt to be added to each layer, where the objective is to maximize the performance. We test the DPL method on the pre-trained CLIP. We empirically find that by using only limited data, our DPL method can find deep continuous prompt configuration with high confidence. The performance on the downstream tasks exhibits the superiority of the automatic design: our method boosts the average test accuracy by 2.60% on 11 datasets compared to baseline methods. Besides, our method focuses only on the prompt configuration (i.e. context length for each layer), which means that our method is compatible with the baseline methods that have sophisticated designs to boost the performance. The DPL method can be deployed to large language models or computer vision models at no cost.

TabSketchFM: Sketch-based Tabular Representation Learning for Data Discovery over Data Lakes

Jun 28, 2024

Abstract:Enterprises have a growing need to identify relevant tables in data lakes; e.g. tables that are unionable, joinable, or subsets of each other. Tabular neural models can be helpful for such data discovery tasks. In this paper, we present TabSketchFM, a neural tabular model for data discovery over data lakes. First, we propose a novel pre-training sketch-based approach to enhance the effectiveness of data discovery techniques in neural tabular models. Second, to further finetune the pretrained model for several downstream tasks, we develop LakeBench, a collection of 8 benchmarks to help with different data discovery tasks such as finding tasks that are unionable, joinable, or subsets of each other. We then show on these finetuning tasks that TabSketchFM achieves state-of-the art performance compared to existing neural models. Third, we use these finetuned models to search for tables that are unionable, joinable, or can be subsets of each other. Our results demonstrate improvements in F1 scores for search compared to state-of-the-art techniques (even up to 70% improvement in a joinable search benchmark). Finally, we show significant transfer across datasets and tasks establishing that our model can generalize across different tasks over different data lakes

Graph is all you need? Lightweight data-agnostic neural architecture search without training

May 02, 2024

Abstract:Neural architecture search (NAS) enables the automatic design of neural network models. However, training the candidates generated by the search algorithm for performance evaluation incurs considerable computational overhead. Our method, dubbed nasgraph, remarkably reduces the computational costs by converting neural architectures to graphs and using the average degree, a graph measure, as the proxy in lieu of the evaluation metric. Our training-free NAS method is data-agnostic and light-weight. It can find the best architecture among 200 randomly sampled architectures from NAS-Bench201 in 217 CPU seconds. Besides, our method is able to achieve competitive performance on various datasets including NASBench-101, NASBench-201, and NDS search spaces. We also demonstrate that nasgraph generalizes to more challenging tasks on Micro TransNAS-Bench-101.

A Scalable Reinforcement Learning-based System Using On-Chain Data for Cryptocurrency Portfolio Management

Jul 04, 2023

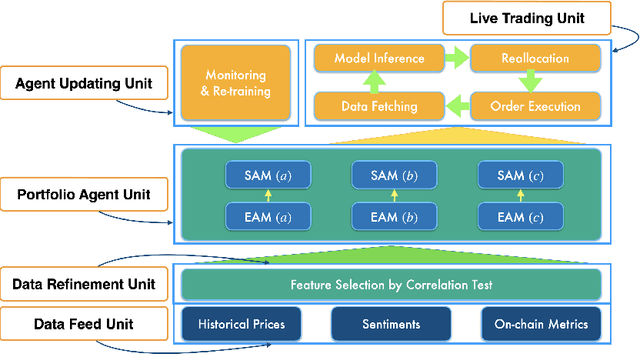

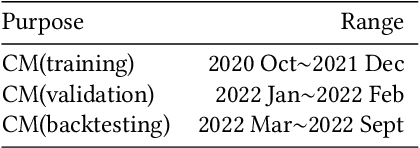

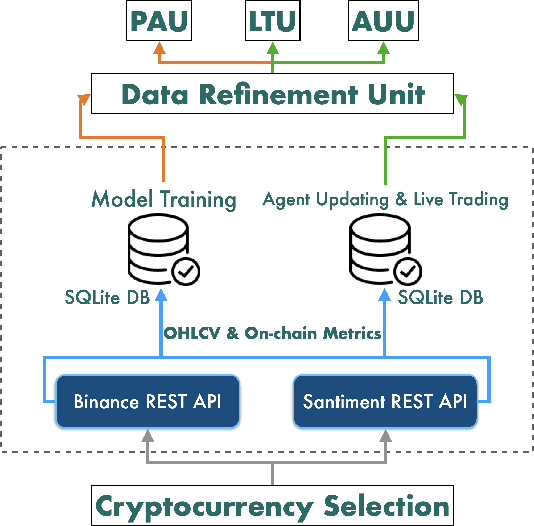

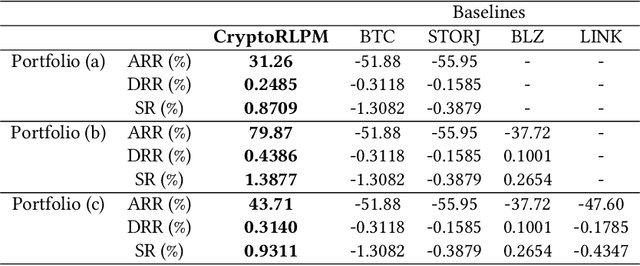

Abstract:On-chain data (metrics) of blockchain networks, akin to company fundamentals, provide crucial and comprehensive insights into the networks. Despite their informative nature, on-chain data have not been utilized in reinforcement learning (RL)-based systems for cryptocurrency (crypto) portfolio management (PM). An intriguing subject is the extent to which the utilization of on-chain data can enhance an RL-based system's return performance compared to baselines. Therefore, in this study, we propose CryptoRLPM, a novel RL-based system incorporating on-chain data for end-to-end crypto PM. CryptoRLPM consists of five units, spanning from information comprehension to trading order execution. In CryptoRLPM, the on-chain data are tested and specified for each crypto to solve the issue of ineffectiveness of metrics. Moreover, the scalable nature of CryptoRLPM allows changes in the portfolios' cryptos at any time. Backtesting results on three portfolios indicate that CryptoRLPM outperforms all the baselines in terms of accumulated rate of return (ARR), daily rate of return (DRR), and Sortino ratio (SR). Particularly, when compared to Bitcoin, CryptoRLPM enhances the ARR, DRR, and SR by at least 83.14%, 0.5603%, and 2.1767 respectively.

Network Graph Based Neural Architecture Search

Dec 15, 2021

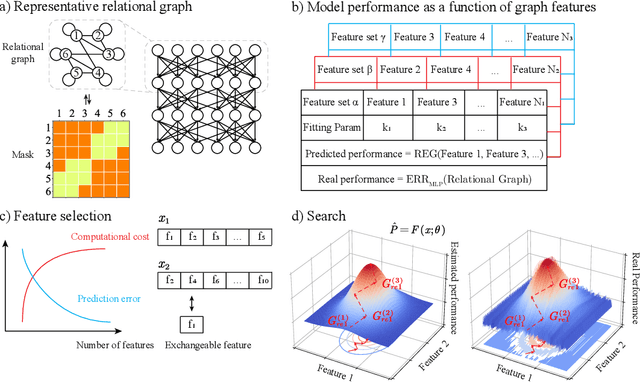

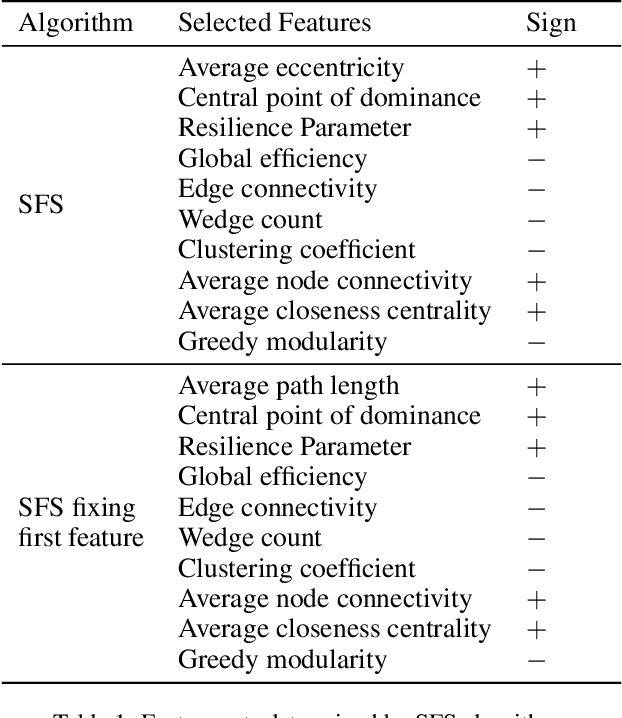

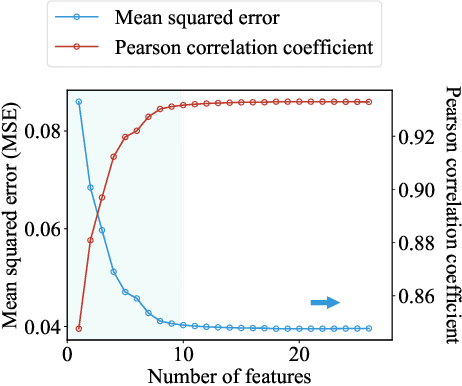

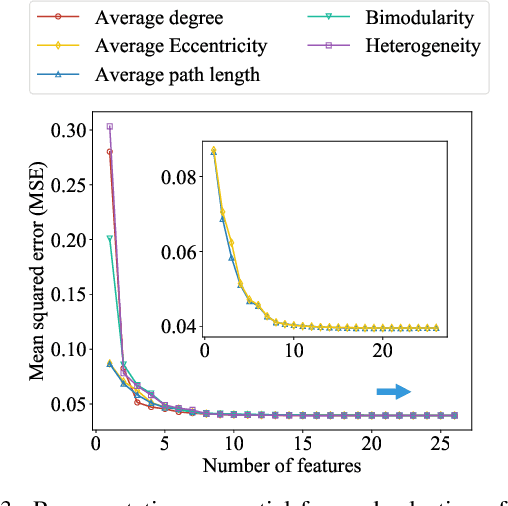

Abstract:Neural architecture search enables automation of architecture design. Despite its success, it is computationally costly and does not provide an insight on how to design a desirable architecture. Here we propose a new way of searching neural network where we search neural architecture by rewiring the corresponding graph and predict the architecture performance by graph properties. Because we do not perform machine learning over the entire graph space and use predicted architecture performance to search architecture, the searching process is remarkably efficient. We find graph based search can give a reasonably good prediction of desirable architecture. In addition, we find graph properties that are effective to predict architecture performance. Our work proposes a new way of searching neural architecture and provides insights on neural architecture design.

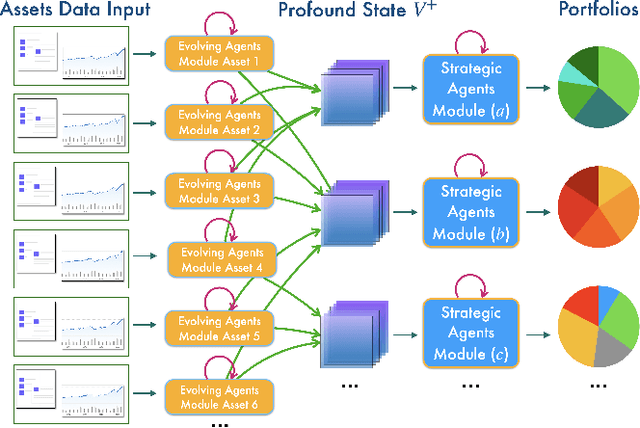

A Modularized and Scalable Multi-Agent Reinforcement Learning-based System for Financial Portfolio Management

Feb 09, 2021

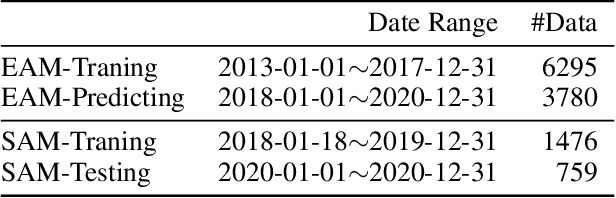

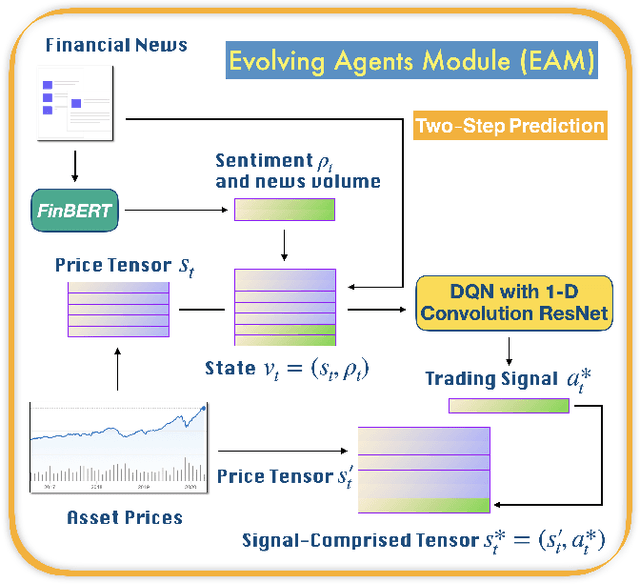

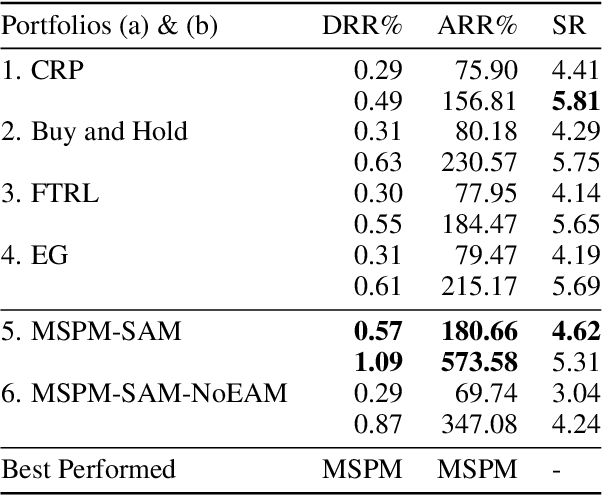

Abstract:Financial Portfolio Management is one of the most applicable problems in Reinforcement Learning (RL) by its sequential decision-making nature. Existing RL-based approaches, while inspiring, often lack scalability, reusability, or profundity of intake information to accommodate the ever-changing capital markets. In this paper, we design and develop MSPM, a novel Multi-agent Reinforcement learning-based system with a modularized and scalable architecture for portfolio management. MSPM involves two asynchronously updated units: Evolving Agent Module (EAM) and Strategic Agent Module (SAM). A self-sustained EAM produces signal-comprised information for a specific asset using heterogeneous data inputs, and each EAM possesses its reusability to have connections to multiple SAMs. A SAM is responsible for the assets reallocation of a portfolio using profound information from the EAMs connected. With the elaborate architecture and the multi-step condensation of the volatile market information, MSPM aims to provide a customizable, stable, and dedicated solution to portfolio management that existing approaches do not. We also tackle data-shortage issue of newly-listed stocks by transfer learning, and validate the necessity of EAM. Experiments on 8-year U.S. stock markets data prove the effectiveness of MSPM in profits accumulation by its outperformance over existing benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge