Zhanyuan Yang

Few-Shot Classification with Contrastive Learning

Sep 17, 2022

Abstract:A two-stage training paradigm consisting of sequential pre-training and meta-training stages has been widely used in current few-shot learning (FSL) research. Many of these methods use self-supervised learning and contrastive learning to achieve new state-of-the-art results. However, the potential of contrastive learning in both stages of FSL training paradigm is still not fully exploited. In this paper, we propose a novel contrastive learning-based framework that seamlessly integrates contrastive learning into both stages to improve the performance of few-shot classification. In the pre-training stage, we propose a self-supervised contrastive loss in the forms of feature vector vs. feature map and feature map vs. feature map, which uses global and local information to learn good initial representations. In the meta-training stage, we propose a cross-view episodic training mechanism to perform the nearest centroid classification on two different views of the same episode and adopt a distance-scaled contrastive loss based on them. These two strategies force the model to overcome the bias between views and promote the transferability of representations. Extensive experiments on three benchmark datasets demonstrate that our method achieves competitive results.

DONet: Dual-Octave Network for Fast MR Image Reconstruction

May 12, 2021

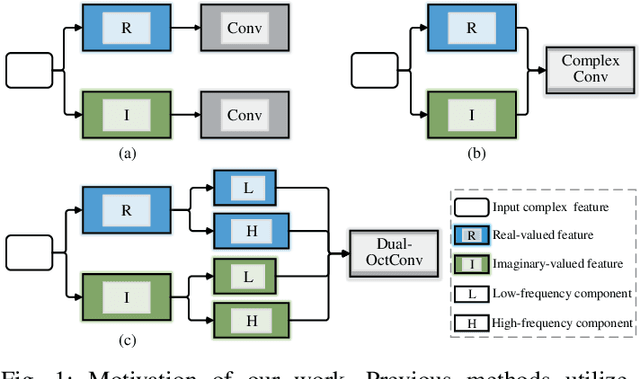

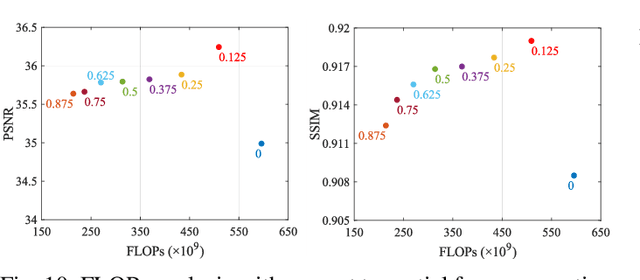

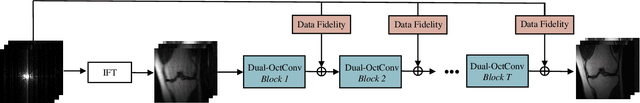

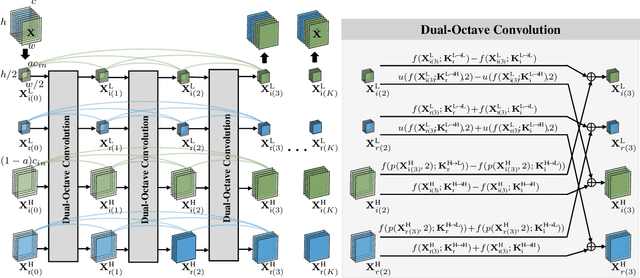

Abstract:Magnetic resonance (MR) image acquisition is an inherently prolonged process, whose acceleration has long been the subject of research. This is commonly achieved by obtaining multiple undersampled images, simultaneously, through parallel imaging. In this paper, we propose the Dual-Octave Network (DONet), which is capable of learning multi-scale spatial-frequency features from both the real and imaginary components of MR data, for fast parallel MR image reconstruction. More specifically, our DONet consists of a series of Dual-Octave convolutions (Dual-OctConv), which are connected in a dense manner for better reuse of features. In each Dual-OctConv, the input feature maps and convolutional kernels are first split into two components (ie, real and imaginary), and then divided into four groups according to their spatial frequencies. Then, our Dual-OctConv conducts intra-group information updating and inter-group information exchange to aggregate the contextual information across different groups. Our framework provides three appealing benefits: (i) It encourages information interaction and fusion between the real and imaginary components at various spatial frequencies to achieve richer representational capacity. (ii) The dense connections between the real and imaginary groups in each Dual-OctConv make the propagation of features more efficient by feature reuse. (iii) DONet enlarges the receptive field by learning multiple spatial-frequency features of both the real and imaginary components. Extensive experiments on two popular datasets (ie, clinical knee and fastMRI), under different undersampling patterns and acceleration factors, demonstrate the superiority of our model in accelerated parallel MR image reconstruction.

Dual-Octave Convolution for Accelerated Parallel MR Image Reconstruction

Apr 12, 2021

Abstract:Magnetic resonance (MR) image acquisition is an inherently prolonged process, whose acceleration by obtaining multiple undersampled images simultaneously through parallel imaging has always been the subject of research. In this paper, we propose the Dual-Octave Convolution (Dual-OctConv), which is capable of learning multi-scale spatial-frequency features from both real and imaginary components, for fast parallel MR image reconstruction. By reformulating the complex operations using octave convolutions, our model shows a strong ability to capture richer representations of MR images, while at the same time greatly reducing the spatial redundancy. More specifically, the input feature maps and convolutional kernels are first split into two components (i.e., real and imaginary), which are then divided into four groups according to their spatial frequencies. Then, our Dual-OctConv conducts intra-group information updating and inter-group information exchange to aggregate the contextual information across different groups. Our framework provides two appealing benefits: (i) it encourages interactions between real and imaginary components at various spatial frequencies to achieve richer representational capacity, and (ii) it enlarges the receptive field by learning multiple spatial-frequency features of both the real and imaginary components. We evaluate the performance of the proposed model on the acceleration of multi-coil MR image reconstruction. Extensive experiments are conducted on an {in vivo} knee dataset under different undersampling patterns and acceleration factors. The experimental results demonstrate the superiority of our model in accelerated parallel MR image reconstruction. Our code is available at: github.com/chunmeifeng/Dual-OctConv.

* Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI) 2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge