Yvonne Rogers

Understanding, Protecting, and Augmenting Human Cognition with Generative AI: A Synthesis of the CHI 2025 Tools for Thought Workshop

Aug 28, 2025Abstract:Generative AI (GenAI) radically expands the scope and capability of automation for work, education, and everyday tasks, a transformation posing both risks and opportunities for human cognition. How will human cognition change, and what opportunities are there for GenAI to augment it? Which theories, metrics, and other tools are needed to address these questions? The CHI 2025 workshop on Tools for Thought aimed to bridge an emerging science of how the use of GenAI affects human thought, from metacognition to critical thinking, memory, and creativity, with an emerging design practice for building GenAI tools that both protect and augment human thought. Fifty-six researchers, designers, and thinkers from across disciplines as well as industry and academia, along with 34 papers and portfolios, seeded a day of discussion, ideation, and community-building. We synthesize this material here to begin mapping the space of research and design opportunities and to catalyze a multidisciplinary community around this pressing area of research.

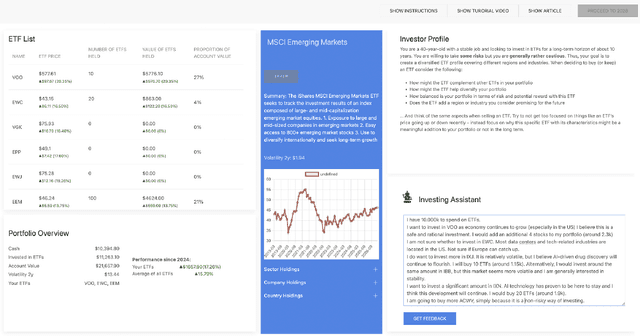

AI, Help Me Think$\unicode{x2014}$but for Myself: Assisting People in Complex Decision-Making by Providing Different Kinds of Cognitive Support

Apr 09, 2025

Abstract:How can we design AI tools that effectively support human decision-making by complementing and enhancing users' reasoning processes? Common recommendation-centric approaches face challenges such as inappropriate reliance or a lack of integration with users' decision-making processes. Here, we explore an alternative interaction model in which the AI outputs build upon users' own decision-making rationales. We compare this approach, which we call ExtendAI, with a recommendation-based AI. Participants in our mixed-methods user study interacted with both AIs as part of an investment decision-making task. We found that the AIs had different impacts, with ExtendAI integrating better into the decision-making process and people's own thinking and leading to slightly better outcomes. RecommendAI was able to provide more novel insights while requiring less cognitive effort. We discuss the implications of these and other findings along with three tensions of AI-assisted decision-making which our study revealed.

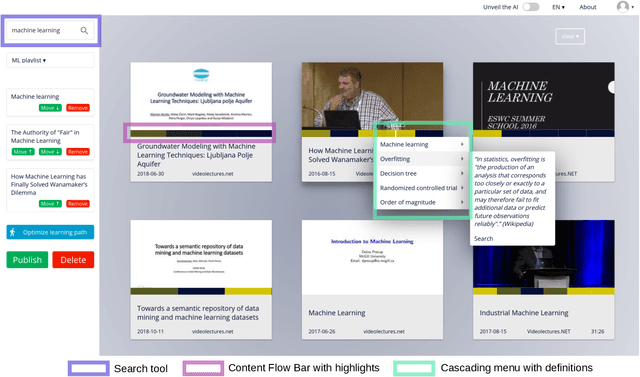

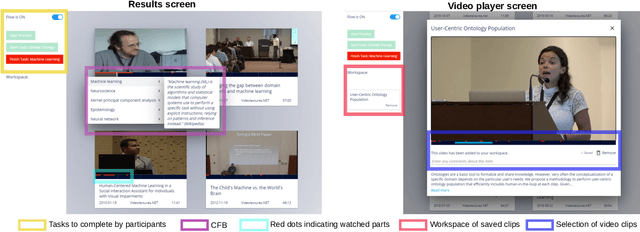

Watch Less and Uncover More: Could Navigation Tools Help Users Search and Explore Videos?

Jan 10, 2022

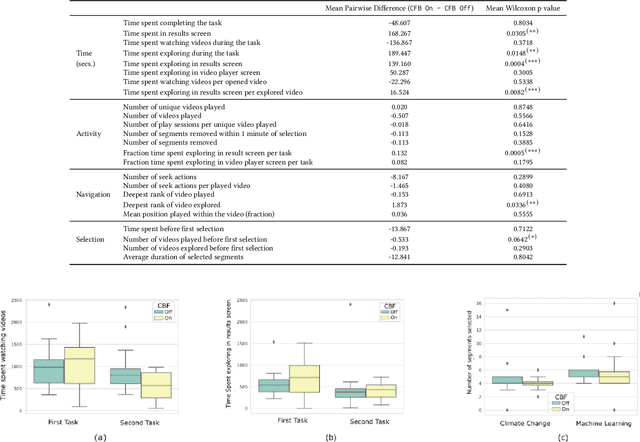

Abstract:Prior research has shown how 'content preview tools' improve speed and accuracy of user relevance judgements across different information retrieval tasks. This paper describes a novel user interface tool, the Content Flow Bar, designed to allow users to quickly identify relevant fragments within informational videos to facilitate browsing, through a cognitively augmented form of navigation. It achieves this by providing semantic "snippets" that enable the user to rapidly scan through video content. The tool provides visually-appealing pop-ups that appear in a time series bar at the bottom of each video, allowing to see in advance and at a glance how topics evolve in the content. We conducted a user study to evaluate how the tool changes the users search experience in video retrieval, as well as how it supports exploration and information seeking. The user questionnaire revealed that participants found the Content Flow Bar helpful and enjoyable for finding relevant information in videos. The interaction logs of the user study, where participants interacted with the tool for completing two informational tasks, showed that it holds promise for enhancing discoverability of content both across and within videos. This discovered potential could leverage a new generation of navigation tools in search and information retrieval.

MotionInput v2.0 supporting DirectX: A modular library of open-source gesture-based machine learning and computer vision methods for interacting and controlling existing software with a webcam

Aug 10, 2021

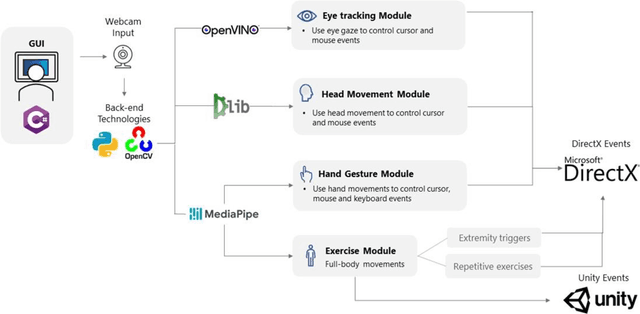

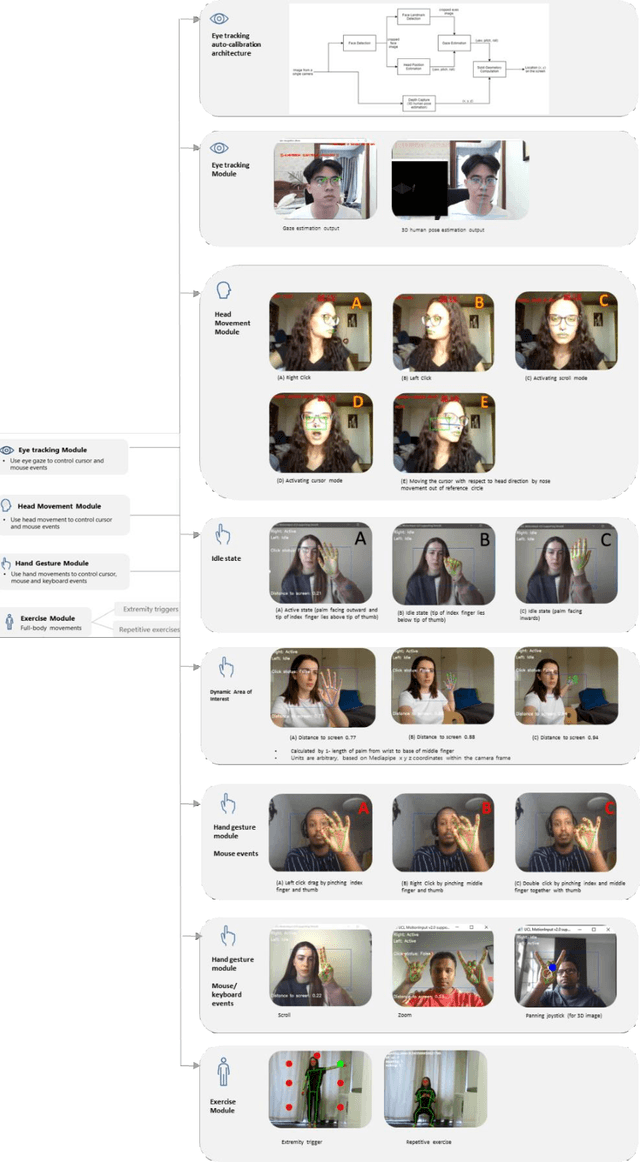

Abstract:Touchless computer interaction has become an important consideration during the COVID-19 pandemic period. Despite progress in machine learning and computer vision that allows for advanced gesture recognition, an integrated collection of such open-source methods and a user-customisable approach to utilising them in a low-cost solution for touchless interaction in existing software is still missing. In this paper, we introduce the MotionInput v2.0 application. This application utilises published open-source libraries and additional gesture definitions developed to take the video stream from a standard RGB webcam as input. It then maps human motion gestures to input operations for existing applications and games. The user can choose their own preferred way of interacting from a series of motion types, including single and bi-modal hand gesturing, full-body repetitive or extremities-based exercises, head and facial movements, eye tracking, and combinations of the above. We also introduce a series of bespoke gesture recognition classifications as DirectInput triggers, including gestures for idle states, auto calibration, depth capture from a 2D RGB webcam stream and tracking of facial motions such as mouth motions, winking, and head direction with rotation. Three use case areas assisted the development of the modules: creativity software, office and clinical software, and gaming software. A collection of open-source libraries has been integrated and provide a layer of modular gesture mapping on top of existing mouse and keyboard controls in Windows via DirectX. With ease of access to webcams integrated into most laptops and desktop computers, touchless computing becomes more available with MotionInput v2.0, in a federated and locally processed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge