Yuyi Zhong

Expediting Neural Network Verification via Network Reduction

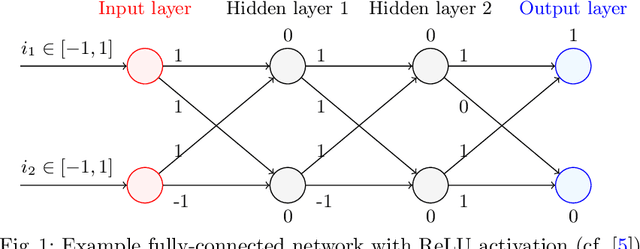

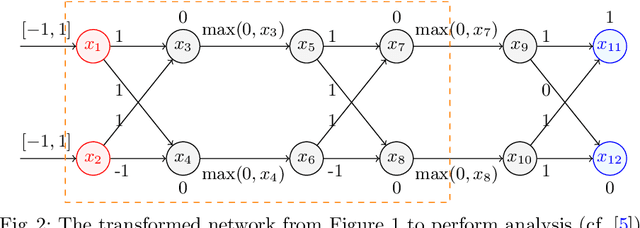

Aug 14, 2023Abstract:A wide range of verification methods have been proposed to verify the safety properties of deep neural networks ensuring that the networks function correctly in critical applications. However, many well-known verification tools still struggle with complicated network architectures and large network sizes. In this work, we propose a network reduction technique as a pre-processing method prior to verification. The proposed method reduces neural networks via eliminating stable ReLU neurons, and transforming them into a sequential neural network consisting of ReLU and Affine layers which can be handled by the most verification tools. We instantiate the reduction technique on the state-of-the-art complete and incomplete verification tools, including alpha-beta-crown, VeriNet and PRIMA. Our experiments on a large set of benchmarks indicate that the proposed technique can significantly reduce neural networks and speed up existing verification tools. Furthermore, the experiment results also show that network reduction can improve the availability of existing verification tools on many networks by reducing them into sequential neural networks.

ExploreADV: Towards exploratory attack for Neural Networks

Jan 01, 2023Abstract:Although deep learning has made remarkable progress in processing various types of data such as images, text and speech, they are known to be susceptible to adversarial perturbations: perturbations specifically designed and added to the input to make the target model produce erroneous output. Most of the existing studies on generating adversarial perturbations attempt to perturb the entire input indiscriminately. In this paper, we propose ExploreADV, a general and flexible adversarial attack system that is capable of modeling regional and imperceptible attacks, allowing users to explore various kinds of adversarial examples as needed. We adapt and combine two existing boundary attack methods, DeepFool and Brendel\&Bethge Attack, and propose a mask-constrained adversarial attack system, which generates minimal adversarial perturbations under the pixel-level constraints, namely ``mask-constraints''. We study different ways of generating such mask-constraints considering the variance and importance of the input features, and show that our adversarial attack system offers users good flexibility to focus on sub-regions of inputs, explore imperceptible perturbations and understand the vulnerability of pixels/regions to adversarial attacks. We demonstrate our system to be effective based on extensive experiments and user study.

Scalable and Modular Robustness Analysis of Deep Neural Networks

Aug 31, 2021

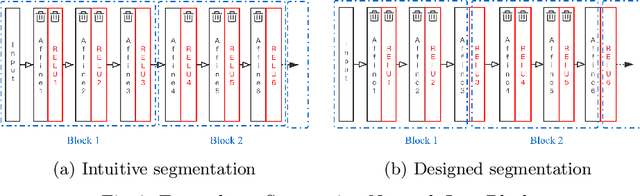

Abstract:As neural networks are trained to be deeper and larger, the scalability of neural network analyzers is urgently required. The main technical insight of our method is modularly analyzing neural networks by segmenting a network into blocks and conduct the analysis for each block. In particular, we propose the network block summarization technique to capture the behaviors within a network block using a block summary and leverage the summary to speed up the analysis process. We instantiate our method in the context of a CPU-version of the state-of-the-art analyzer DeepPoly and name our system as Bounded-Block Poly (BBPoly). We evaluate BBPoly extensively on various experiment settings. The experimental result indicates that our method yields comparable precision as DeepPoly but runs faster and requires less computational resources. For example, BBPoly can analyze really large neural networks like SkipNet or ResNet which contain up to one million neurons in less than around 1 hour per input image, while DeepPoly needs to spend even 40 hours to analyze one image.

VulDeePecker: A Deep Learning-Based System for Vulnerability Detection

Jan 05, 2018

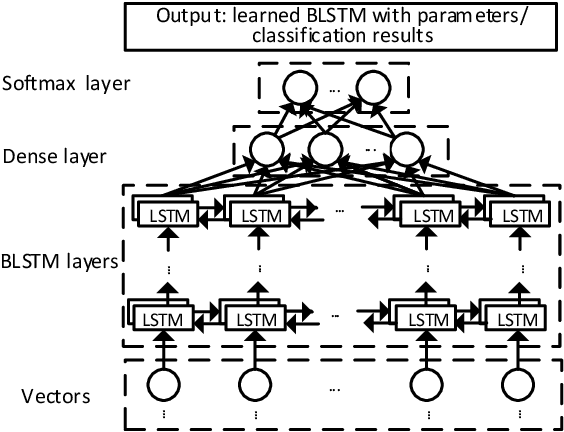

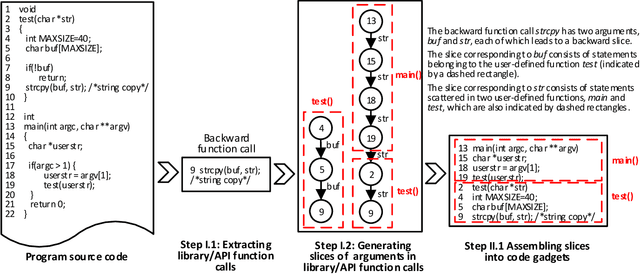

Abstract:The automatic detection of software vulnerabilities is an important research problem. However, existing solutions to this problem rely on human experts to define features and often miss many vulnerabilities (i.e., incurring high false negative rate). In this paper, we initiate the study of using deep learning-based vulnerability detection to relieve human experts from the tedious and subjective task of manually defining features. Since deep learning is motivated to deal with problems that are very different from the problem of vulnerability detection, we need some guiding principles for applying deep learning to vulnerability detection. In particular, we need to find representations of software programs that are suitable for deep learning. For this purpose, we propose using code gadgets to represent programs and then transform them into vectors, where a code gadget is a number of (not necessarily consecutive) lines of code that are semantically related to each other. This leads to the design and implementation of a deep learning-based vulnerability detection system, called Vulnerability Deep Pecker (VulDeePecker). In order to evaluate VulDeePecker, we present the first vulnerability dataset for deep learning approaches. Experimental results show that VulDeePecker can achieve much fewer false negatives (with reasonable false positives) than other approaches. We further apply VulDeePecker to 3 software products (namely Xen, Seamonkey, and Libav) and detect 4 vulnerabilities, which are not reported in the National Vulnerability Database but were "silently" patched by the vendors when releasing later versions of these products; in contrast, these vulnerabilities are almost entirely missed by the other vulnerability detection systems we experimented with.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge