Scalable and Modular Robustness Analysis of Deep Neural Networks

Paper and Code

Aug 31, 2021

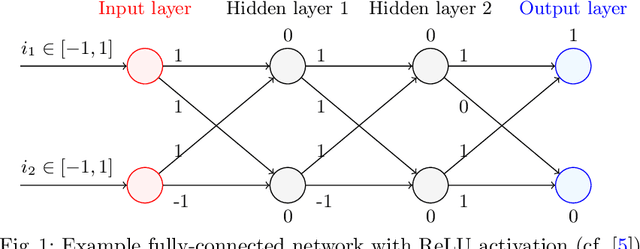

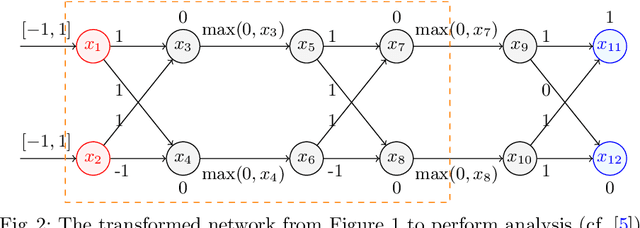

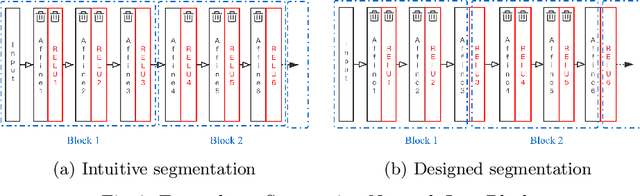

As neural networks are trained to be deeper and larger, the scalability of neural network analyzers is urgently required. The main technical insight of our method is modularly analyzing neural networks by segmenting a network into blocks and conduct the analysis for each block. In particular, we propose the network block summarization technique to capture the behaviors within a network block using a block summary and leverage the summary to speed up the analysis process. We instantiate our method in the context of a CPU-version of the state-of-the-art analyzer DeepPoly and name our system as Bounded-Block Poly (BBPoly). We evaluate BBPoly extensively on various experiment settings. The experimental result indicates that our method yields comparable precision as DeepPoly but runs faster and requires less computational resources. For example, BBPoly can analyze really large neural networks like SkipNet or ResNet which contain up to one million neurons in less than around 1 hour per input image, while DeepPoly needs to spend even 40 hours to analyze one image.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge