Ruiwei Wang

Expediting Neural Network Verification via Network Reduction

Aug 14, 2023Abstract:A wide range of verification methods have been proposed to verify the safety properties of deep neural networks ensuring that the networks function correctly in critical applications. However, many well-known verification tools still struggle with complicated network architectures and large network sizes. In this work, we propose a network reduction technique as a pre-processing method prior to verification. The proposed method reduces neural networks via eliminating stable ReLU neurons, and transforming them into a sequential neural network consisting of ReLU and Affine layers which can be handled by the most verification tools. We instantiate the reduction technique on the state-of-the-art complete and incomplete verification tools, including alpha-beta-crown, VeriNet and PRIMA. Our experiments on a large set of benchmarks indicate that the proposed technique can significantly reduce neural networks and speed up existing verification tools. Furthermore, the experiment results also show that network reduction can improve the availability of existing verification tools on many networks by reducing them into sequential neural networks.

Correlation Heuristics for Constraint Programming

May 24, 2018

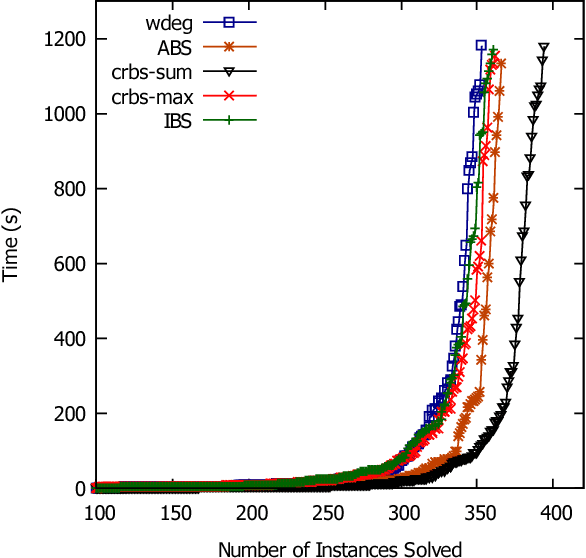

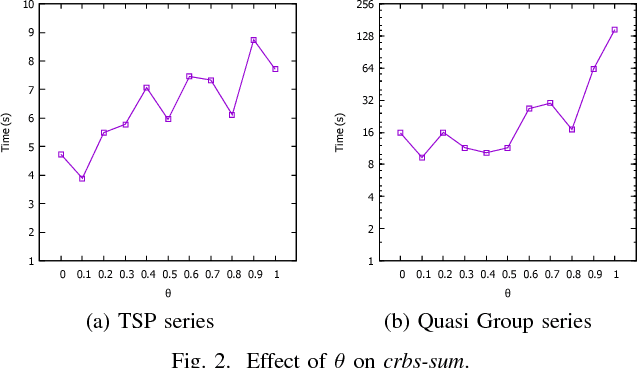

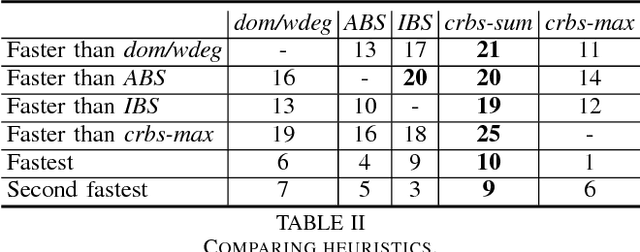

Abstract:Effective general-purpose search strategies are an important component in Constraint Programming. We introduce a new idea, namely, using correlations between variables to guide search. Variable correlations are measured and maintained by using domain changes during constraint propagation. We propose two variable heuristics based on the correlation matrix, crbs-sum and crbs-max. We evaluate our correlation heuristics with well known heuristics, namely, dom/wdeg, impact-based search and activity-based search. Experiments on a large set of benchmarks show that our correlation heuristics are competitive with the other heuristics, and can be the fastest on many series.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge