Yusuf Brima

Robustness and Scalability Of Machine Learning for Imbalanced Clinical Data in Emergency and Critical Care

Dec 25, 2025

Abstract:Emergency and intensive care environments require predictive models that are both accurate and computationally efficient, yet clinical data in these settings are often severely imbalanced. Such skewness undermines model reliability, particularly for rare but clinically crucial outcomes, making robustness and scalability essential for real-world usage. In this paper, we systematically evaluate the robustness and scalability of classical machine learning models on imbalanced tabular data from MIMIC-IV-ED and eICU. Class imbalance was quantified using complementary metrics, and we compared the performance of tree-based methods, the state-of-the-art TabNet deep learning model, and a custom lightweight residual network. TabResNet was designed as a computationally efficient alternative to TabNet, replacing its complex attention mechanisms with a streamlined residual architecture to maintain representational capacity for real-time clinical use. All models were optimized via a Bayesian hyperparameter search and assessed on predictive performance, robustness to increasing imbalance, and computational scalability. Our results, on seven clinically vital predictive tasks, show that tree-based methods, particularly XGBoost, consistently achieved the most stable performance across imbalance levels and scaled efficiently with sample size. Deep tabular models degraded more sharply under imbalance and incurred higher computational costs, while TabResNet provided a lighter alternative to TabNet but did not surpass ensemble benchmarks. These findings indicate that in emergency and critical care, robustness to imbalance and computational scalability could outweigh architectural complexity. Tree-based ensemble methods currently offer the most practical and clinically feasible choice, equipping practitioners with a framework for selecting models suited to high-stakes, time-sensitive environments.

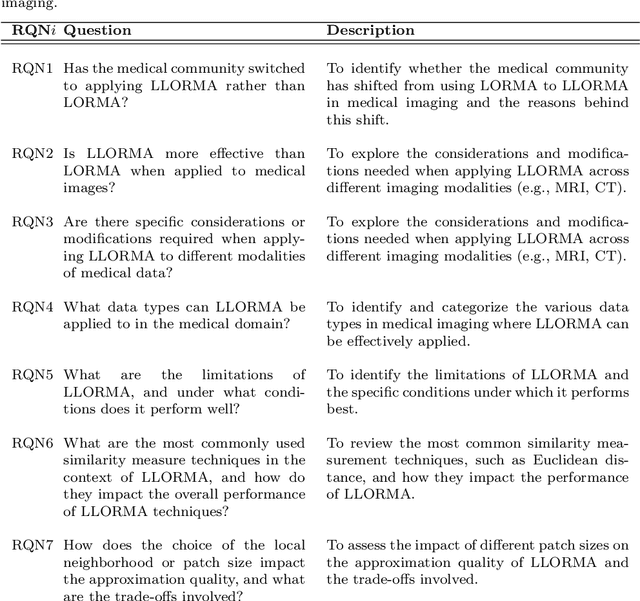

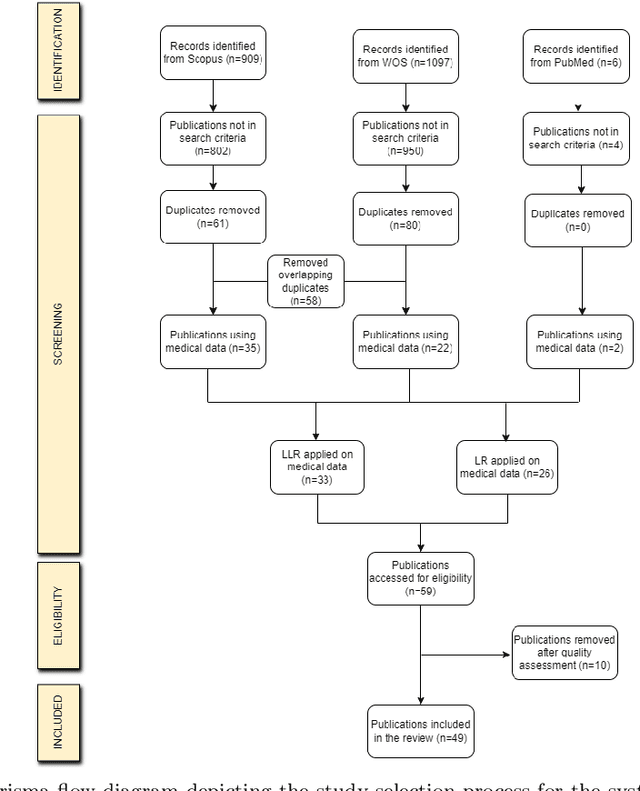

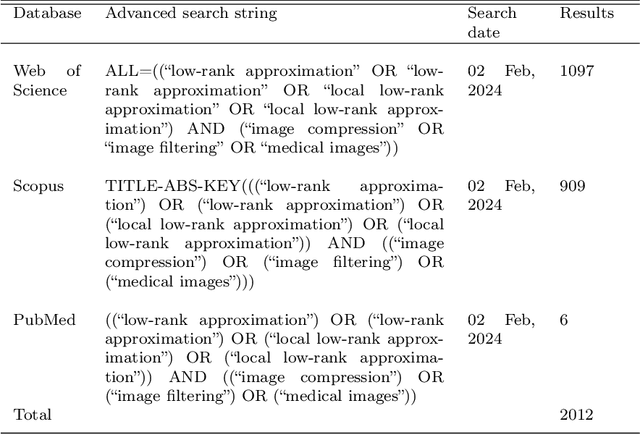

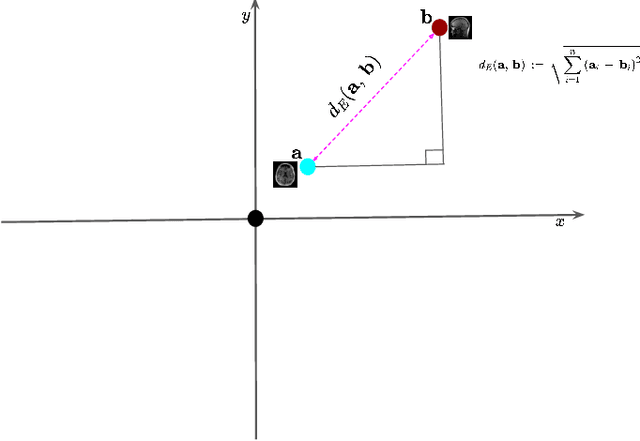

Advancing Low-Rank and Local Low-Rank Matrix Approximation in Medical Imaging: A Systematic Literature Review and Future Directions

Feb 21, 2024

Abstract:The large volume and complexity of medical imaging datasets are bottlenecks for storage, transmission, and processing. To tackle these challenges, the application of low-rank matrix approximation (LRMA) and its derivative, local LRMA (LLRMA) has demonstrated potential. This paper conducts a systematic literature review to showcase works applying LRMA and LLRMA in medical imaging. A detailed analysis of the literature identifies LRMA and LLRMA methods applied to various imaging modalities. This paper addresses the challenges and limitations associated with existing LRMA and LLRMA methods. We note a significant shift towards a preference for LLRMA in the medical imaging field since 2015, demonstrating its potential and effectiveness in capturing complex structures in medical data compared to LRMA. Acknowledging the limitations of shallow similarity methods used with LLRMA, we suggest advanced semantic image segmentation for similarity measure, explaining in detail how it can measure similar patches and their feasibility. We note that LRMA and LLRMA are mainly applied to unstructured medical data, and we propose extending their application to different medical data types, including structured and semi-structured. This paper also discusses how LRMA and LLRMA can be applied to regular data with missing entries and the impact of inaccuracies in predicting missing values and their effects. We discuss the impact of patch size and propose the use of random search (RS) to determine the optimal patch size. To enhance feasibility, a hybrid approach using Bayesian optimization and RS is proposed, which could improve the application of LRMA and LLRMA in medical imaging.

Learning Disentangled Audio Representations through Controlled Synthesis

Feb 16, 2024Abstract:This paper tackles the scarcity of benchmarking data in disentangled auditory representation learning. We introduce SynTone, a synthetic dataset with explicit ground truth explanatory factors for evaluating disentanglement techniques. Benchmarking state-of-the-art methods on SynTone highlights its utility for method evaluation. Our results underscore strengths and limitations in audio disentanglement, motivating future research.

Learning Disentangled Speech Representations

Nov 04, 2023

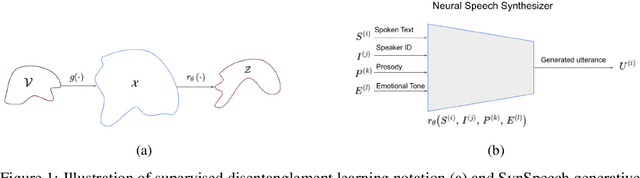

Abstract:Disentangled representation learning from speech remains limited despite its importance in many application domains. A key challenge is the lack of speech datasets with known generative factors to evaluate methods. This paper proposes SynSpeech: a novel synthetic speech dataset with ground truth factors enabling research on disentangling speech representations. We plan to present a comprehensive study evaluating supervised techniques using established supervised disentanglement metrics. This benchmark dataset and framework address the gap in the rigorous evaluation of state-of-the-art disentangled speech representation learning methods. Our findings will provide insights to advance this underexplored area and enable more robust speech representations.

Understanding Self-Supervised Learning of Speech Representation via Invariance and Redundancy Reduction

Sep 07, 2023Abstract:The choice of the objective function is crucial in emerging high-quality representations from self-supervised learning. This paper investigates how different formulations of the Barlow Twins (BT) objective impact downstream task performance for speech data. We propose Modified Barlow Twins (MBT) with normalized latents to enforce scale-invariance and evaluate on speaker identification, gender recognition and keyword spotting tasks. Our results show MBT improves representation generalization over original BT, especially when fine-tuning with limited target data. This highlights the importance of designing objectives that encourage invariant and transferable representations. Our analysis provides insights into how the BT learning objective can be tailored to produce speech representations that excel when adapted to new downstream tasks. This study is an important step towards developing reusable self-supervised speech representations.

What do Deep Neural Networks Learn in Medical Images?

Aug 01, 2022

Abstract:Deep learning is increasingly gaining rapid adoption in healthcare to help improve patient outcomes. This is more so in medical image analysis which requires extensive training to gain the requisite expertise to become a trusted practitioner. However, while deep learning techniques have continued to provide state-of-the-art predictive performance, one of the primary challenges that stands to hinder this progress in healthcare is the opaque nature of the inference mechanism of these models. So, attribution has a vital role in building confidence in stakeholders for the predictions made by deep learning models to inform clinical decisions. This work seeks to answer the question: what do deep neural network models learn in medical images? In that light, we present a novel attribution framework using adaptive path-based gradient integration techniques. Results show a promising direction of building trust in domain experts to improve healthcare outcomes by allowing them to understand the input-prediction correlative structures, discover new bio-markers, and reveal potential model biases.

Deep Transfer Learning for Brain Magnetic Resonance Image Multi-class Classification

Jun 15, 2021

Abstract:Magnetic Resonance Imaging (MRI) is a principal diagnostic approach used in the field of radiology to create images of the anatomical and physiological structure of patients. MRI is the prevalent medical imaging practice to find abnormalities in soft tissues. Traditionally they are analyzed by a radiologist to detect abnormalities in soft tissues, especially the brain. The process of interpreting a massive volume of patient's MRI is laborious. Hence, the use of Machine Learning methodologies can aid in detecting abnormalities in soft tissues with considerable accuracy. In this research, we have curated a novel dataset and developed a framework that uses Deep Transfer Learning to perform a multi-classification of tumors in the brain MRI images. In this paper, we adopted the Deep Residual Convolutional Neural Network (ResNet50) architecture for the experiments along with discriminative learning techniques to train the model. Using the novel dataset and two publicly available MRI brain datasets, this proposed approach attained a classification accuracy of 86.40% on the curated dataset, 93.80% on the Harvard Whole Brain Atlas dataset, and 97.05% accuracy on the School of Biomedical Engineering dataset. Results of our experiments significantly demonstrate our proposed framework for transfer learning is a potential and effective method for brain tumor multi-classification tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge