Yuquan Le

SEBERTNets: Sequence Enhanced BERT Networks for Event Entity Extraction Tasks Oriented to the Finance Field

Jan 21, 2024

Abstract:Event extraction lies at the cores of investment analysis and asset management in the financial field, and thus has received much attention. The 2019 China conference on knowledge graph and semantic computing (CCKS) challenge sets up a evaluation competition for event entity extraction task oriented to the finance field. In this task, we mainly focus on how to extract the event entity accurately, and recall all the corresponding event entity effectively. In this paper, we propose a novel model, Sequence Enhanced BERT Networks (SEBERTNets for short), which can inherit the advantages of the BERT,and while capturing sequence semantic information. In addition, motivated by recommendation system, we propose Hybrid Sequence Enhanced BERT Networks (HSEBERTNets for short), which uses a multi-channel recall method to recall all the corresponding event entity. The experimental results show that, the F1 score of SEBERTNets is 0.905 in the first stage, and the F1 score of HSEBERTNets is 0.934 in the first stage, which demonstarate the effectiveness of our methods.

Building Robust Spoken Language Understanding by Cross Attention between Phoneme Sequence and ASR Hypothesis

Mar 22, 2022

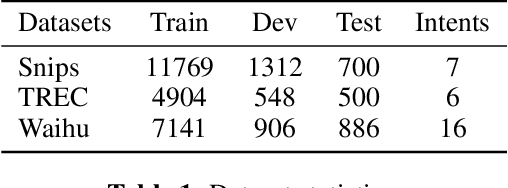

Abstract:Building Spoken Language Understanding (SLU) robust to Automatic Speech Recognition (ASR) errors is an essential issue for various voice-enabled virtual assistants. Considering that most ASR errors are caused by phonetic confusion between similar-sounding expressions, intuitively, leveraging the phoneme sequence of speech can complement ASR hypothesis and enhance the robustness of SLU. This paper proposes a novel model with Cross Attention for SLU (denoted as CASLU). The cross attention block is devised to catch the fine-grained interactions between phoneme and word embeddings in order to make the joint representations catch the phonetic and semantic features of input simultaneously and for overcoming the ASR errors in downstream natural language understanding (NLU) tasks. Extensive experiments are conducted on three datasets, showing the effectiveness and competitiveness of our approach. Additionally, We also validate the universality of CASLU and prove its complementarity when combining with other robust SLU techniques.

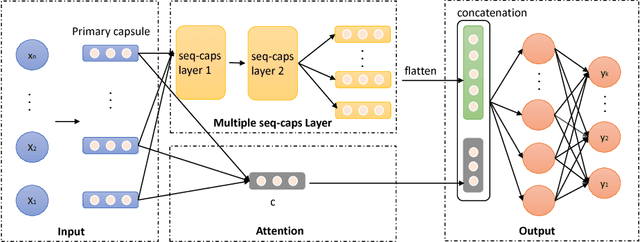

SECaps: A Sequence Enhanced Capsule Model for Charge Prediction

Oct 10, 2018

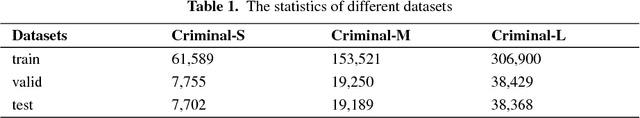

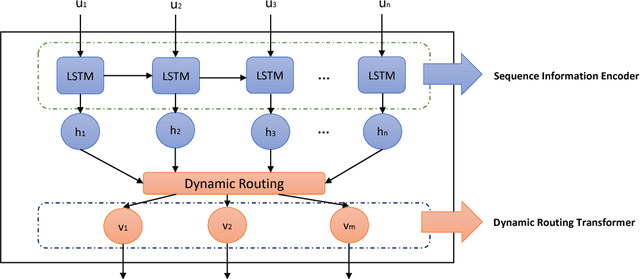

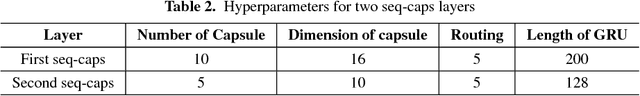

Abstract:Automatic charge prediction aims to predict appropriate final charges according to the fact descriptions for a given criminal case. Automatic charge pre-diction plays an important role in assisting judges and lawyers to improve the effi-ciency of legal decisions, and thus has received much attention. Nevertheless, most existing works on automatic charge prediction perform adequately on those high-frequency charges but are not yet capable of predicting few-shot charges with lim-ited cases. On the other hand, some works have shown the benefits of capsule net-work, which is a powerful technique. This motivates us to propose a Sequence En-hanced Capsule model, dubbed as SECaps model, to relieve this problem. More specifically, we propose a new basic structure, seq-caps layer, to enhance capsule by taking sequence information in to account. In addition, we construct our SE-Caps model by making use of seq-caps layer. Comparing the state-of-the-art meth-ods, our SECaps model achieves 4.5% and 6.4% F1 promotion in two real-world datasets, Criminal-S and Criminal-L, respectively. The experimental results consis-tently demonstrate the superiorities and competitiveness of our proposed model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge