Congqing He

School of Computer Sciences, Universiti Sains Malaysia, Penang, Malaysia

ESNERA: Empirical and semantic named entity alignment for named entity dataset merging

Aug 09, 2025Abstract:Named Entity Recognition (NER) is a fundamental task in natural language processing. It remains a research hotspot due to its wide applicability across domains. Although recent advances in deep learning have significantly improved NER performance, they rely heavily on large, high-quality annotated datasets. However, building these datasets is expensive and time-consuming, posing a major bottleneck for further research. Current dataset merging approaches mainly focus on strategies like manual label mapping or constructing label graphs, which lack interpretability and scalability. To address this, we propose an automatic label alignment method based on label similarity. The method combines empirical and semantic similarities, using a greedy pairwise merging strategy to unify label spaces across different datasets. Experiments are conducted in two stages: first, merging three existing NER datasets into a unified corpus with minimal impact on NER performance; second, integrating this corpus with a small-scale, self-built dataset in the financial domain. The results show that our method enables effective dataset merging and enhances NER performance in the low-resource financial domain. This study presents an efficient, interpretable, and scalable solution for integrating multi-source NER corpora.

Can GPT-O1 Kill All Bugs? An Evaluation of GPT-Family LLMs on QuixBugs

Sep 17, 2024Abstract:LLMs have long demonstrated remarkable effectiveness in automatic program repair (APR), with OpenAI's ChatGPT being one of the most widely used models in this domain. Through continuous iterations and upgrades of GPT-family models, their performance in fixing bugs has already reached state-of-the-art levels. However, there are few works comparing the effectiveness and variations of different versions of GPT-family models on APR. In this work, inspired by the recent public release of the GPT-o1 models, we conduct the first study to compare the effectiveness of different versions of the GPT-family models in APR. We evaluate the performance of the latest version of the GPT-family models (i.e., O1-preview and O1-mini), GPT-4o, and the historical version of ChatGPT on APR. We conduct an empirical study of the four GPT-family models against other LLMs and APR techniques on the QuixBugs benchmark from multiple evaluation perspectives, including repair success rate, repair cost, response length, and behavior patterns. The results demonstrate that O1's repair capability exceeds that of prior GPT-family models, successfully fixing all 40 bugs in the benchmark. Our work can serve as a foundation for further in-depth exploration of the applications of GPT-family models in APR.

SEBERTNets: Sequence Enhanced BERT Networks for Event Entity Extraction Tasks Oriented to the Finance Field

Jan 21, 2024

Abstract:Event extraction lies at the cores of investment analysis and asset management in the financial field, and thus has received much attention. The 2019 China conference on knowledge graph and semantic computing (CCKS) challenge sets up a evaluation competition for event entity extraction task oriented to the finance field. In this task, we mainly focus on how to extract the event entity accurately, and recall all the corresponding event entity effectively. In this paper, we propose a novel model, Sequence Enhanced BERT Networks (SEBERTNets for short), which can inherit the advantages of the BERT,and while capturing sequence semantic information. In addition, motivated by recommendation system, we propose Hybrid Sequence Enhanced BERT Networks (HSEBERTNets for short), which uses a multi-channel recall method to recall all the corresponding event entity. The experimental results show that, the F1 score of SEBERTNets is 0.905 in the first stage, and the F1 score of HSEBERTNets is 0.934 in the first stage, which demonstarate the effectiveness of our methods.

A Novel Sequence Tagging Framework for Consumer Event-Cause Extraction

Oct 28, 2021

Abstract:Consumer Event-Cause Extraction, the task aimed at extracting the potential causes behind certain events in the text, has gained much attention in recent years due to its wide applications. The ICDM 2020 conference sets up an evaluation competition that aims to extract events and the causes of the extracted events with a specified subject (a brand or product). In this task, we mainly focus on how to construct an end-to-end model, and extract multiple event types and event-causes simultaneously. To this end, we introduce a fresh perspective to revisit the relational event-cause extraction task and propose a novel sequence tagging framework, instead of extracting event types and events-causes separately. Experiments show our framework outperforms baseline methods even when its encoder module uses an initialized pre-trained BERT encoder, showing the power of the new tagging framework. In this competition, our team achieved 1st place in the first stage leaderboard, and 3rd place in the final stage leaderboard.

SECaps: A Sequence Enhanced Capsule Model for Charge Prediction

Oct 10, 2018

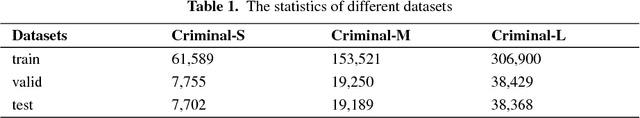

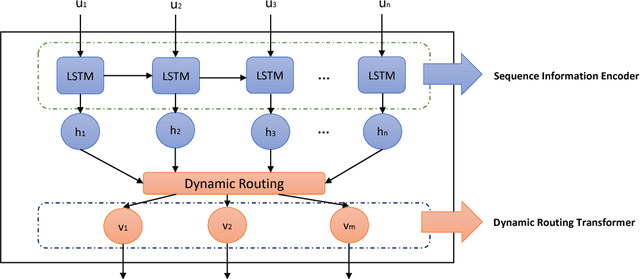

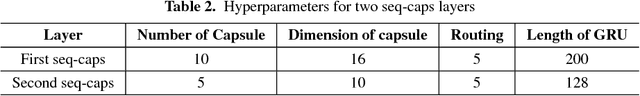

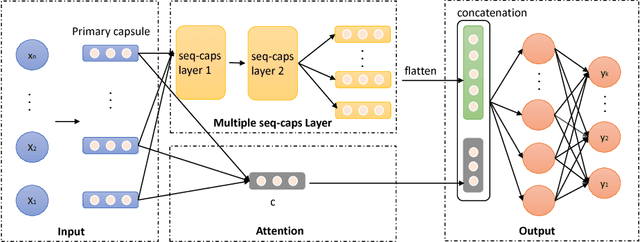

Abstract:Automatic charge prediction aims to predict appropriate final charges according to the fact descriptions for a given criminal case. Automatic charge pre-diction plays an important role in assisting judges and lawyers to improve the effi-ciency of legal decisions, and thus has received much attention. Nevertheless, most existing works on automatic charge prediction perform adequately on those high-frequency charges but are not yet capable of predicting few-shot charges with lim-ited cases. On the other hand, some works have shown the benefits of capsule net-work, which is a powerful technique. This motivates us to propose a Sequence En-hanced Capsule model, dubbed as SECaps model, to relieve this problem. More specifically, we propose a new basic structure, seq-caps layer, to enhance capsule by taking sequence information in to account. In addition, we construct our SE-Caps model by making use of seq-caps layer. Comparing the state-of-the-art meth-ods, our SECaps model achieves 4.5% and 6.4% F1 promotion in two real-world datasets, Criminal-S and Criminal-L, respectively. The experimental results consis-tently demonstrate the superiorities and competitiveness of our proposed model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge