Yunjie Xu

VQ-NeRV: A Vector Quantized Neural Representation for Videos

Mar 19, 2024

Abstract:Implicit neural representations (INR) excel in encoding videos within neural networks, showcasing promise in computer vision tasks like video compression and denoising. INR-based approaches reconstruct video frames from content-agnostic embeddings, which hampers their efficacy in video frame regression and restricts their generalization ability for video interpolation. To address these deficiencies, Hybrid Neural Representation for Videos (HNeRV) was introduced with content-adaptive embeddings. Nevertheless, HNeRV's compression ratios remain relatively low, attributable to an oversight in leveraging the network's shallow features and inter-frame residual information. In this work, we introduce an advanced U-shaped architecture, Vector Quantized-NeRV (VQ-NeRV), which integrates a novel component--the VQ-NeRV Block. This block incorporates a codebook mechanism to discretize the network's shallow residual features and inter-frame residual information effectively. This approach proves particularly advantageous in video compression, as it results in smaller size compared to quantized features. Furthermore, we introduce an original codebook optimization technique, termed shallow codebook optimization, designed to refine the utility and efficiency of the codebook. The experimental evaluations indicate that VQ-NeRV outperforms HNeRV on video regression tasks, delivering superior reconstruction quality (with an increase of 1-2 dB in Peak Signal-to-Noise Ratio (PSNR)), better bit per pixel (bpp) efficiency, and improved video inpainting outcomes.

TransBoost: A Boosting-Tree Kernel Transfer Learning Algorithm for Improving Financial Inclusion

Dec 16, 2021

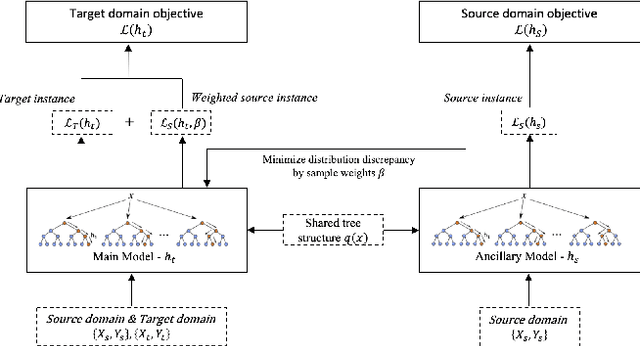

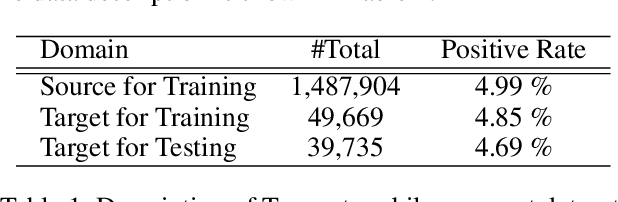

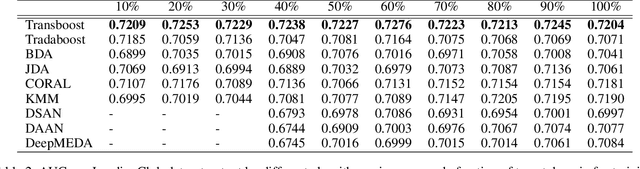

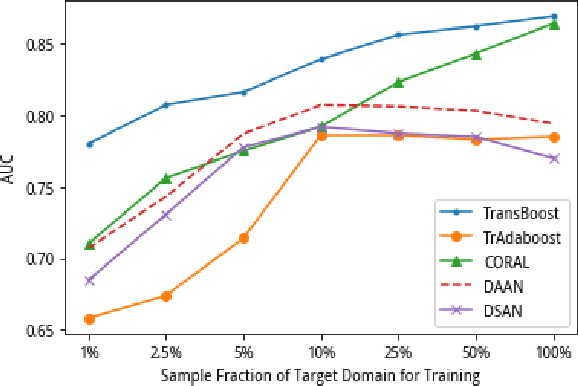

Abstract:The prosperity of mobile and financial technologies has bred and expanded various kinds of financial products to a broader scope of people, which contributes to advocating financial inclusion. It has non-trivial social benefits of diminishing financial inequality. However, the technical challenges in individual financial risk evaluation caused by the distinct characteristic distribution and limited credit history of new users, as well as the inexperience of newly-entered companies in handling complex data and obtaining accurate labels, impede further promoting financial inclusion. To tackle these challenges, this paper develops a novel transfer learning algorithm (i.e., TransBoost) that combines the merits of tree-based models and kernel methods. The TransBoost is designed with a parallel tree structure and efficient weights updating mechanism with theoretical guarantee, which enables it to excel in tackling real-world data with high dimensional features and sparsity in $O(n)$ time complexity. We conduct extensive experiments on two public datasets and a unique large-scale dataset from Tencent Mobile Payment. The results show that the TransBoost outperforms other state-of-the-art benchmark transfer learning algorithms in terms of prediction accuracy with superior efficiency, shows stronger robustness to data sparsity, and provides meaningful model interpretation. Besides, given a financial risk level, the TransBoost enables financial service providers to serve the largest number of users including those who would otherwise be excluded by other algorithms. That is, the TransBoost improves financial inclusion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge