Yunhao Gao

A Spatial-Spectral-Frequency Interactive Network for Multimodal Remote Sensing Classification

Oct 06, 2025Abstract:Deep learning-based methods have achieved significant success in remote sensing Earth observation data analysis. Numerous feature fusion techniques address multimodal remote sensing image classification by integrating global and local features. However, these techniques often struggle to extract structural and detail features from heterogeneous and redundant multimodal images. With the goal of introducing frequency domain learning to model key and sparse detail features, this paper introduces the spatial-spectral-frequency interaction network (S$^2$Fin), which integrates pairwise fusion modules across the spatial, spectral, and frequency domains. Specifically, we propose a high-frequency sparse enhancement transformer that employs sparse spatial-spectral attention to optimize the parameters of the high-frequency filter. Subsequently, a two-level spatial-frequency fusion strategy is introduced, comprising an adaptive frequency channel module that fuses low-frequency structures with enhanced high-frequency details, and a high-frequency resonance mask that emphasizes sharp edges via phase similarity. In addition, a spatial-spectral attention fusion module further enhances feature extraction at intermediate layers of the network. Experiments on four benchmark multimodal datasets with limited labeled data demonstrate that S$^2$Fin performs superior classification, outperforming state-of-the-art methods. The code is available at https://github.com/HaoLiu-XDU/SSFin.

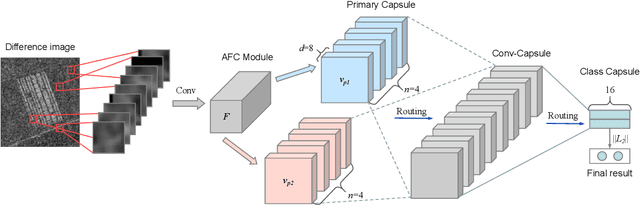

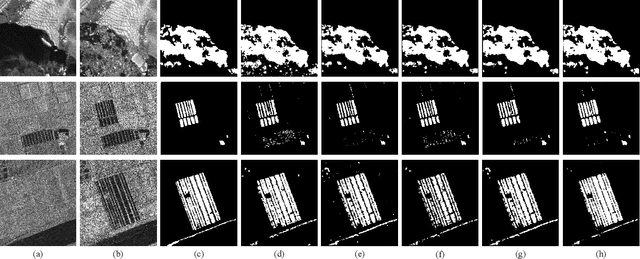

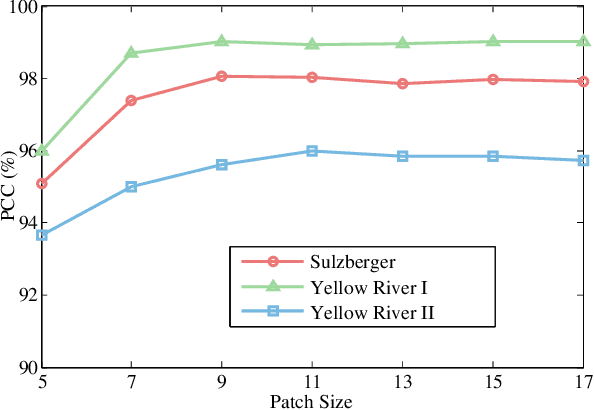

SAR Image Change Detection Based on Multiscale Capsule Network

Jan 22, 2022

Abstract:Traditional synthetic aperture radar image change detection methods based on convolutional neural networks (CNNs) face the challenges of speckle noise and deformation sensitivity. To mitigate these issues, we proposed a Multiscale Capsule Network (Ms-CapsNet) to extract the discriminative information between the changed and unchanged pixels. On the one hand, the multiscale capsule module is employed to exploit the spatial relationship of features. Therefore, equivariant properties can be achieved by aggregating the features from different positions. On the other hand, an adaptive fusion convolution (AFC) module is designed for the proposed Ms-CapsNet. Higher semantic features can be captured for the primary capsules. Feature extracted by the AFC module significantly improves the robustness to speckle noise. The effectiveness of the proposed Ms-CapsNet is verified on three real SAR datasets. The comparison experiments with four state-of-the-art methods demonstrate the efficiency of the proposed method. Our codes are available at https://github.com/summitgao/SAR_CD_MS_CapsNet .

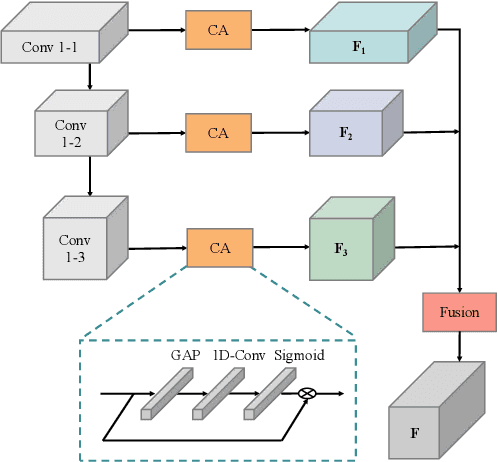

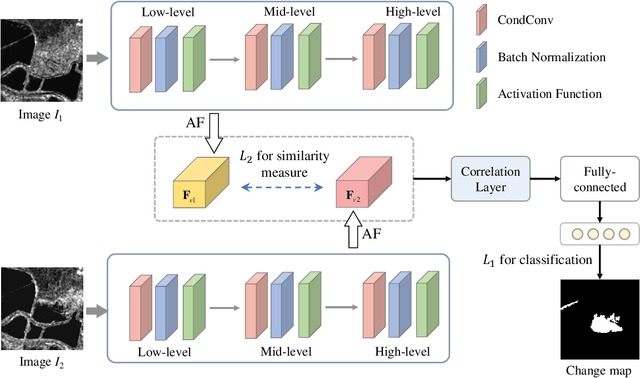

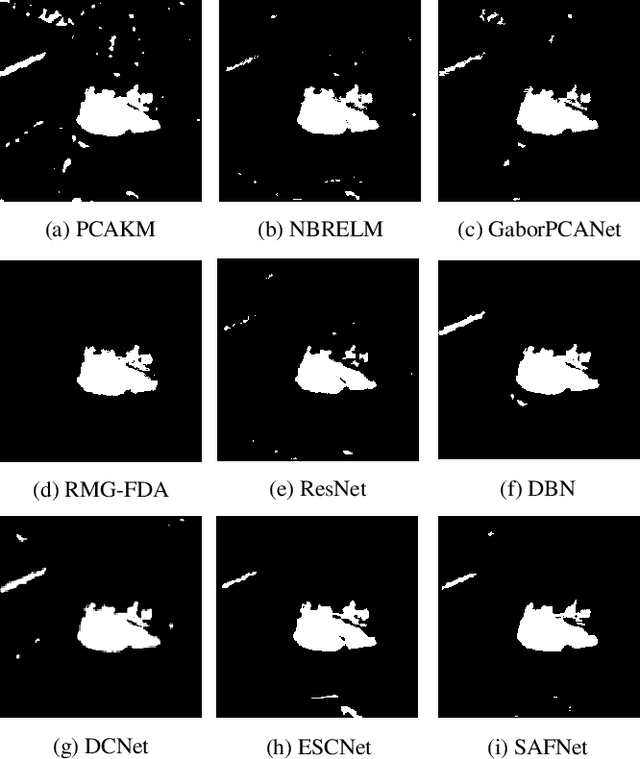

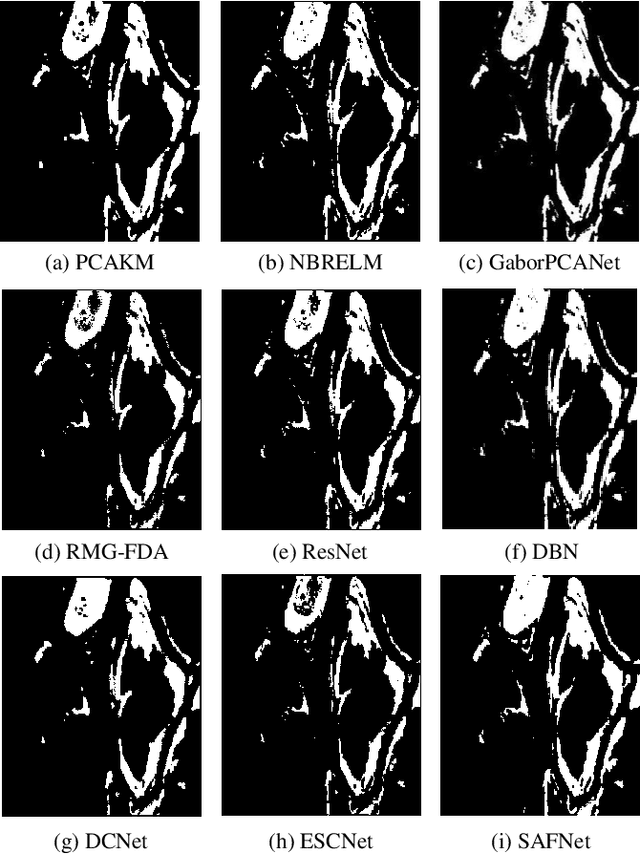

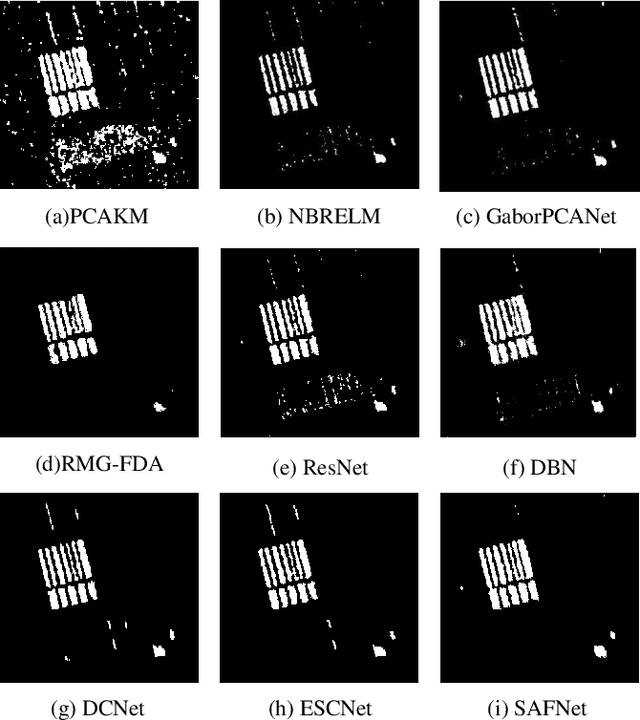

Synthetic Aperture Radar Image Change Detection via Siamese Adaptive Fusion Network

Oct 18, 2021

Abstract:Synthetic aperture radar (SAR) image change detection is a critical yet challenging task in the field of remote sensing image analysis. The task is non-trivial due to the following challenges: Firstly, intrinsic speckle noise of SAR images inevitably degrades the neural network because of error gradient accumulation. Furthermore, the correlation among various levels or scales of feature maps is difficult to be achieved through summation or concatenation. Toward this end, we proposed a siamese adaptive fusion network for SAR image change detection. To be more specific, two-branch CNN is utilized to extract high-level semantic features of multitemporal SAR images. Besides, an adaptive fusion module is designed to adaptively combine multiscale responses in convolutional layers. Therefore, the complementary information is exploited, and feature learning in change detection is further improved. Moreover, a correlation layer is designed to further explore the correlation between multitemporal images. Thereafter, robust feature representation is utilized for classification through a fully-connected layer with softmax. Experimental results on four real SAR datasets demonstrate that the proposed method exhibits superior performance against several state-of-the-art methods. Our codes are available at https://github.com/summitgao/SAR_CD_SAFNet.

Hyperspectral and Multispectral Classification for Coastal Wetland Using Depthwise Feature Interaction Network

Jul 12, 2021

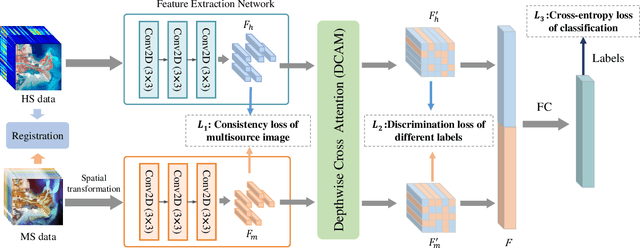

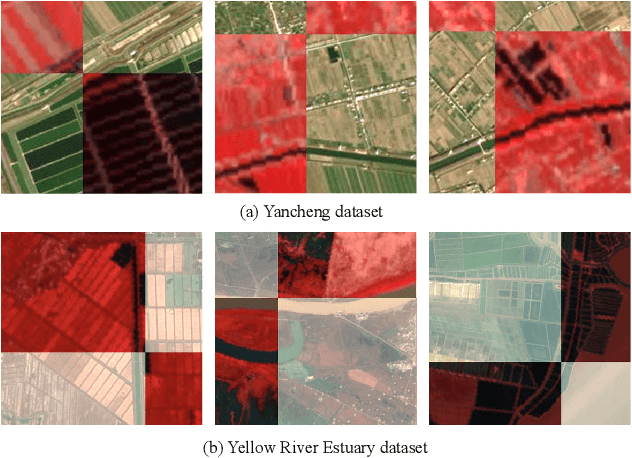

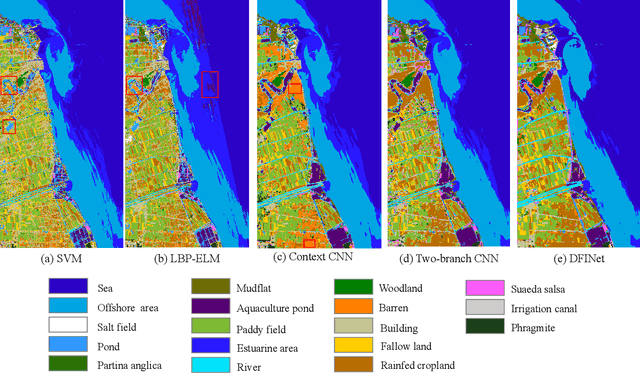

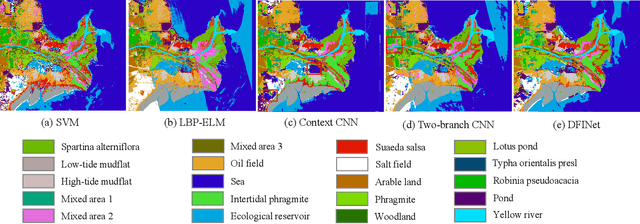

Abstract:The monitoring of coastal wetlands is of great importance to the protection of marine and terrestrial ecosystems. However, due to the complex environment, severe vegetation mixture, and difficulty of access, it is impossible to accurately classify coastal wetlands and identify their species with traditional classifiers. Despite the integration of multisource remote sensing data for performance enhancement, there are still challenges with acquiring and exploiting the complementary merits from multisource data. In this paper, the Deepwise Feature Interaction Network (DFINet) is proposed for wetland classification. A depthwise cross attention module is designed to extract self-correlation and cross-correlation from multisource feature pairs. In this way, meaningful complementary information is emphasized for classification. DFINet is optimized by coordinating consistency loss, discrimination loss, and classification loss. Accordingly, DFINet reaches the standard solution-space under the regularity of loss functions, while the spatial consistency and feature discrimination are preserved. Comprehensive experimental results on two hyperspectral and multispectral wetland datasets demonstrate that the proposed DFINet outperforms other competitive methods in terms of overall accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge