Yumeng Xue

Implicit Multidimensional Projection of Local Subspaces

Sep 07, 2020

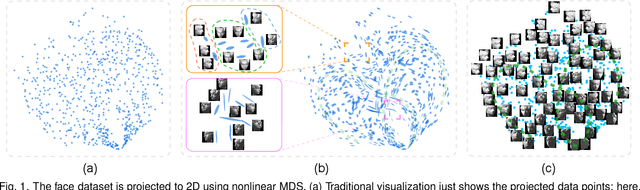

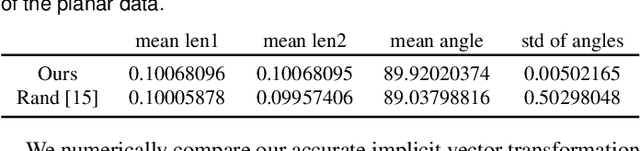

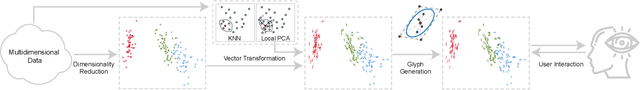

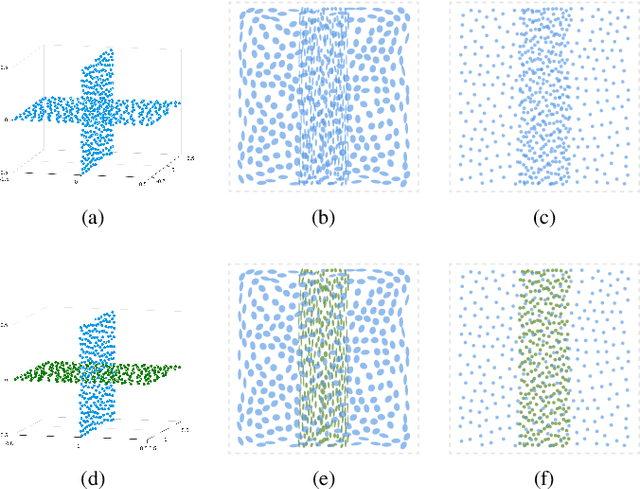

Abstract:We propose a visualization method to understand the effect of multidimensional projection on local subspaces, using implicit function differentiation. Here, we understand the local subspace as the multidimensional local neighborhood of data points. Existing methods focus on the projection of multidimensional data points, and the neighborhood information is ignored. Our method is able to analyze the shape and directional information of the local subspace to gain more insights into the global structure of the data through the perception of local structures. Local subspaces are fitted by multidimensional ellipses that are spanned by basis vectors. An accurate and efficient vector transformation method is proposed based on analytical differentiation of multidimensional projections formulated as implicit functions. The results are visualized as glyphs and analyzed using a full set of specifically-designed interactions supported in our efficient web-based visualization tool. The usefulness of our method is demonstrated using various multi- and high-dimensional benchmark datasets. Our implicit differentiation vector transformation is evaluated through numerical comparisons; the overall method is evaluated through exploration examples and use cases.

Active Transfer Learning Network: A Unified Deep Joint Spectral-Spatial Feature Learning Model For Hyperspectral Image Classification

Apr 04, 2019

Abstract:Deep learning has recently attracted significant attention in the field of hyperspectral images (HSIs) classification. However, the construction of an efficient deep neural network (DNN) mostly relies on a large number of labeled samples being available. To address this problem, this paper proposes a unified deep network, combined with active transfer learning that can be well-trained for HSIs classification using only minimally labeled training data. More specifically, deep joint spectral-spatial feature is first extracted through hierarchical stacked sparse autoencoder (SSAE) networks. Active transfer learning is then exploited to transfer the pre-trained SSAE network and the limited training samples from the source domain to the target domain, where the SSAE network is subsequently fine-tuned using the limited labeled samples selected from both source and target domain by corresponding active learning strategies. The advantages of our proposed method are threefold: 1) the network can be effectively trained using only limited labeled samples with the help of novel active learning strategies; 2) the network is flexible and scalable enough to function across various transfer situations, including cross-dataset and intra-image; 3) the learned deep joint spectral-spatial feature representation is more generic and robust than many joint spectral-spatial feature representation. Extensive comparative evaluations demonstrate that our proposed method significantly outperforms many state-of-the-art approaches, including both traditional and deep network-based methods, on three popular datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge