Yulin Jian

Hunyuan-Game: Industrial-grade Intelligent Game Creation Model

May 20, 2025Abstract:Intelligent game creation represents a transformative advancement in game development, utilizing generative artificial intelligence to dynamically generate and enhance game content. Despite notable progress in generative models, the comprehensive synthesis of high-quality game assets, including both images and videos, remains a challenging frontier. To create high-fidelity game content that simultaneously aligns with player preferences and significantly boosts designer efficiency, we present Hunyuan-Game, an innovative project designed to revolutionize intelligent game production. Hunyuan-Game encompasses two primary branches: image generation and video generation. The image generation component is built upon a vast dataset comprising billions of game images, leading to the development of a group of customized image generation models tailored for game scenarios: (1) General Text-to-Image Generation. (2) Game Visual Effects Generation, involving text-to-effect and reference image-based game visual effect generation. (3) Transparent Image Generation for characters, scenes, and game visual effects. (4) Game Character Generation based on sketches, black-and-white images, and white models. The video generation component is built upon a comprehensive dataset of millions of game and anime videos, leading to the development of five core algorithmic models, each targeting critical pain points in game development and having robust adaptation to diverse game video scenarios: (1) Image-to-Video Generation. (2) 360 A/T Pose Avatar Video Synthesis. (3) Dynamic Illustration Generation. (4) Generative Video Super-Resolution. (5) Interactive Game Video Generation. These image and video generation models not only exhibit high-level aesthetic expression but also deeply integrate domain-specific knowledge, establishing a systematic understanding of diverse game and anime art styles.

Unsupervised Feature Selection via Multi-step Markov Transition Probability

May 29, 2020

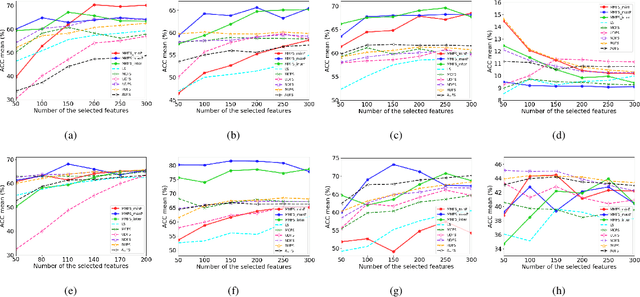

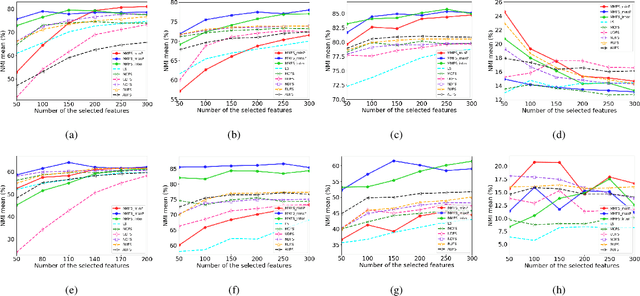

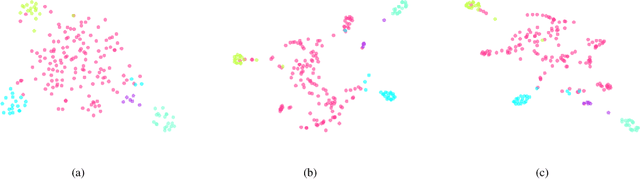

Abstract:Feature selection is a widely used dimension reduction technique to select feature subsets because of its interpretability. Many methods have been proposed and achieved good results, in which the relationships between adjacent data points are mainly concerned. But the possible associations between data pairs that are may not adjacent are always neglected. Different from previous methods, we propose a novel and very simple approach for unsupervised feature selection, named MMFS (Multi-step Markov transition probability for Feature Selection). The idea is using multi-step Markov transition probability to describe the relation between any data pair. Two ways from the positive and negative viewpoints are employed respectively to keep the data structure after feature selection. From the positive viewpoint, the maximum transition probability that can be reached in a certain number of steps is used to describe the relation between two points. Then, the features which can keep the compact data structure are selected. From the viewpoint of negative, the minimum transition probability that can be reached in a certain number of steps is used to describe the relation between two points. On the contrary, the features that least maintain the loose data structure are selected. And the two ways can also be combined. Thus three algorithms are proposed. Our main contributions are a novel feature section approach which uses multi-step transition probability to characterize the data structure, and three algorithms proposed from the positive and negative aspects for keeping data structure. The performance of our approach is compared with the state-of-the-art methods on eight real-world data sets, and the experimental results show that the proposed MMFS is effective in unsupervised feature selection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge