Yuexing Han

Deep Learning-Driven Microstructure Characterization and Vickers Hardness Prediction of Mg-Gd Alloys

Oct 27, 2024

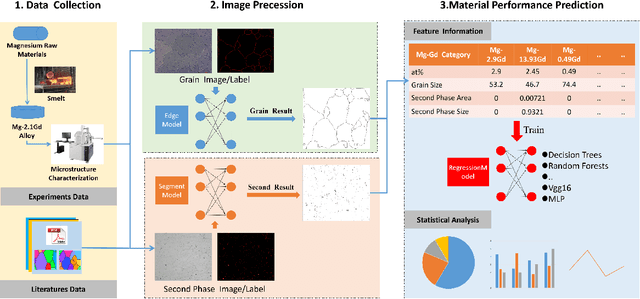

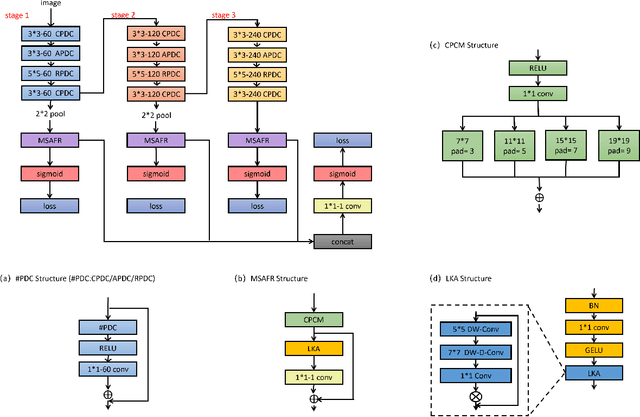

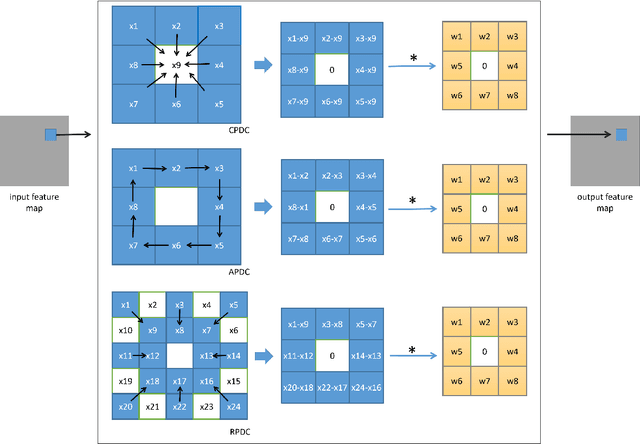

Abstract:In the field of materials science, exploring the relationship between composition, microstructure, and properties has long been a critical research focus. The mechanical performance of solid-solution Mg-Gd alloys is significantly influenced by Gd content, dendritic structures, and the presence of secondary phases. To better analyze and predict the impact of these factors, this study proposes a multimodal fusion learning framework based on image processing and deep learning techniques. This framework integrates both elemental composition and microstructural features to accurately predict the Vickers hardness of solid-solution Mg-Gd alloys. Initially, deep learning methods were employed to extract microstructural information from a variety of solid-solution Mg-Gd alloy images obtained from literature and experiments. This provided precise grain size and secondary phase microstructural features for performance prediction tasks. Subsequently, these quantitative analysis results were combined with Gd content information to construct a performance prediction dataset. Finally, a regression model based on the Transformer architecture was used to predict the Vickers hardness of Mg-Gd alloys. The experimental results indicate that the Transformer model performs best in terms of prediction accuracy, achieving an R^2 value of 0.9. Additionally, SHAP analysis identified critical values for four key features affecting the Vickers hardness of Mg-Gd alloys, providing valuable guidance for alloy design. These findings not only enhance the understanding of alloy performance but also offer theoretical support for future material design and optimization.

Integrated Image-Text Based on Semi-supervised Learning for Small Sample Instance Segmentation

Oct 21, 2024

Abstract:Small sample instance segmentation is a very challenging task, and many existing methods follow the training strategy of meta-learning which pre-train models on support set and fine-tune on query set. The pre-training phase, which is highly task related, requires a significant amount of additional training time and the selection of datasets with close proximity to ensure effectiveness. The article proposes a novel small sample instance segmentation solution from the perspective of maximizing the utilization of existing information without increasing annotation burden and training costs. The proposed method designs two modules to address the problems encountered in small sample instance segmentation. First, it helps the model fully utilize unlabeled data by learning to generate pseudo labels, increasing the number of available samples. Second, by integrating the features of text and image, more accurate classification results can be obtained. These two modules are suitable for box-free and box-dependent frameworks. In the way, the proposed method not only improves the performance of small sample instance segmentation, but also greatly reduce reliance on pre-training. We have conducted experiments in three datasets from different scenes: on land, underwater and under microscope. As evidenced by our experiments, integrated image-text corrects the confidence of classification, and pseudo labels help the model obtain preciser masks. All the results demonstrate the effectiveness and superiority of our method.

FAGStyle: Feature Augmentation on Geodesic Surface for Zero-shot Text-guided Diffusion Image Style Transfer

Aug 21, 2024

Abstract:The goal of image style transfer is to render an image guided by a style reference while maintaining the original content. Existing image-guided methods rely on specific style reference images, restricting their wider application and potentially compromising result quality. As a flexible alternative, text-guided methods allow users to describe the desired style using text prompts. Despite their versatility, these methods often struggle with maintaining style consistency, reflecting the described style accurately, and preserving the content of the target image. To address these challenges, we introduce FAGStyle, a zero-shot text-guided diffusion image style transfer method. Our approach enhances inter-patch information interaction by incorporating the Sliding Window Crop technique and Feature Augmentation on Geodesic Surface into our style control loss. Furthermore, we integrate a Pre-Shape self-correlation consistency loss to ensure content consistency. FAGStyle demonstrates superior performance over existing methods, consistently achieving stylization that retains the semantic content of the source image. Experimental results confirms the efficacy of FAGStyle across a diverse range of source contents and styles, both imagined and common.

Revealing the structure-property relationships of copper alloys with FAGC

Apr 19, 2024Abstract:Understanding how the structure of materials affects their properties is a cornerstone of materials science and engineering. However, traditional methods have struggled to accurately describe the quantitative structure-property relationships for complex structures. In our study, we bridge this gap by leveraging machine learning to analyze images of materials' microstructures, thus offering a novel way to understand and predict the properties of materials based on their microstructures. We introduce a method known as FAGC (Feature Augmentation on Geodesic Curves), specifically demonstrated for Cu-Cr-Zr alloys. This approach utilizes machine learning to examine the shapes within images of the alloys' microstructures and predict their mechanical and electronic properties. This generative FAGC approach can effectively expand the relatively small training datasets due to the limited availability of materials images labeled with quantitative properties. The process begins with extracting features from the images using neural networks. These features are then mapped onto the Pre-shape space to construct the Geodesic curves. Along these curves, new features are generated, effectively increasing the dataset. Moreover, we design a pseudo-labeling mechanism for these newly generated features to further enhance the training dataset. Our FAGC method has shown remarkable results, significantly improving the accuracy of predicting the electronic conductivity and hardness of Cu-Cr-Zr alloys, with R-squared values of 0.978 and 0.998, respectively. These outcomes underscore the potential of FAGC to address the challenge of limited image data in materials science, providing a powerful tool for establishing detailed and quantitative relationships between complex microstructures and material properties.

Few-shot Image Generation via Information Transfer from the Built Geodesic Surface

Jan 03, 2024

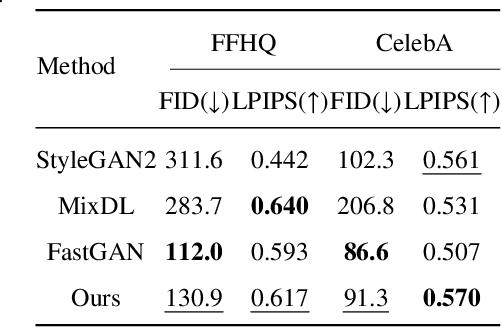

Abstract:Images generated by most of generative models trained with limited data often exhibit deficiencies in either fidelity, diversity, or both. One effective solution to address the limitation is few-shot generative model adaption. However, the type of approaches typically rely on a large-scale pre-trained model, serving as a source domain, to facilitate information transfer to the target domain. In this paper, we propose a method called Information Transfer from the Built Geodesic Surface (ITBGS), which contains two module: Feature Augmentation on Geodesic Surface (FAGS); Interpolation and Regularization (I\&R). With the FAGS module, a pseudo-source domain is created by projecting image features from the training dataset into the Pre-Shape Space, subsequently generating new features on the Geodesic surface. Thus, no pre-trained models is needed for the adaption process during the training of generative models with FAGS. I\&R module are introduced for supervising the interpolated images and regularizing their relative distances, respectively, to further enhance the quality of generated images. Through qualitative and quantitative experiments, we demonstrate that the proposed method consistently achieves optimal or comparable results across a diverse range of semantically distinct datasets, even in extremely few-shot scenarios.

FAGC:Feature Augmentation on Geodesic Curve in the Pre-Shape Space

Dec 25, 2023Abstract:Deep learning has yielded remarkable outcomes in various domains. However, the challenge of requiring large-scale labeled samples still persists in deep learning. Thus, data augmentation has been introduced as a critical strategy to train deep learning models. However, data augmentation suffers from information loss and poor performance in small sample environments. To overcome these drawbacks, we propose a feature augmentation method based on shape space theory, i.e., feature augmentation on Geodesic curve, called FAGC in brevity.First, we extract features from the image with the neural network model. Then, the multiple image features are projected into a pre-shape space as features. In the pre-shape space, a Geodesic curve is built to fit the features. Finally, the many generated features on the Geodesic curve are used to train the various machine learning models. The FAGC module can be seamlessly integrated with most machine learning methods. And the proposed method is simple, effective and insensitive for the small sample datasets.Several examples demonstrate that the FAGC method can greatly improve the performance of the data preprocessing model in a small sample environment.

Backbone-based Dynamic Graph Spatio-Temporal Network for Epidemic Forecasting

Dec 01, 2023

Abstract:Accurate epidemic forecasting is a critical task in controlling disease transmission. Many deep learning-based models focus only on static or dynamic graphs when constructing spatial information, ignoring their relationship. Additionally, these models often rely on recurrent structures, which can lead to error accumulation and computational time consumption. To address the aforementioned problems, we propose a novel model called Backbone-based Dynamic Graph Spatio-Temporal Network (BDGSTN). Intuitively, the continuous and smooth changes in graph structure, make adjacent graph structures share a basic pattern. To capture this property, we use adaptive methods to generate static backbone graphs containing the primary information and temporal models to generate dynamic temporal graphs of epidemic data, fusing them to generate a backbone-based dynamic graph. To overcome potential limitations associated with recurrent structures, we introduce a linear model DLinear to handle temporal dependencies and combine it with dynamic graph convolution for epidemic forecasting. Extensive experiments on two datasets demonstrate that BDGSTN outperforms baseline models and ablation comparison further verifies the effectiveness of model components. Furthermore, we analyze and measure the significance of backbone and temporal graphs by using information metrics from different aspects. Finally, we compare model parameter volume and training time to confirm the superior complexity and efficiency of BDGSTN.

MPSTAN: Metapopulation-based Spatio-Temporal Attention Network for Epidemic Forecasting

Jun 15, 2023

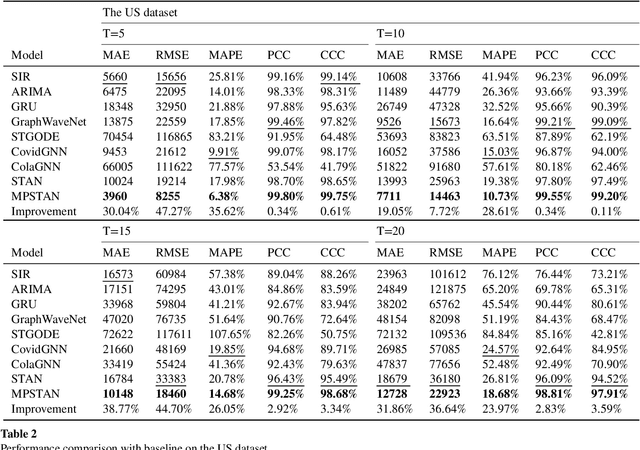

Abstract:Accurate epidemic forecasting plays a vital role for governments in developing effective prevention measures for suppressing epidemics. Most of the present spatio-temporal models cannot provide a general framework for stable, and accurate forecasting of epidemics with diverse evolution trends. Incorporating epidemiological domain knowledge ranging from single-patch to multi-patch into neural networks is expected to improve forecasting accuracy. However, relying solely on single-patch knowledge neglects inter-patch interactions, while constructing multi-patch knowledge is challenging without population mobility data. To address the aforementioned problems, we propose a novel hybrid model called Metapopulation-based Spatio-Temporal Attention Network (MPSTAN). This model aims to improve the accuracy of epidemic forecasting by incorporating multi-patch epidemiological knowledge into a spatio-temporal model and adaptively defining inter-patch interactions. Moreover, we incorporate inter-patch epidemiological knowledge into both the model construction and loss function to help the model learn epidemic transmission dynamics. Extensive experiments conducted on two representative datasets with different epidemiological evolution trends demonstrate that our proposed model outperforms the baselines and provides more accurate and stable short- and long-term forecasting. We confirm the effectiveness of domain knowledge in the learning model and investigate the impact of different ways of integrating domain knowledge on forecasting. We observe that using domain knowledge in both model construction and loss functions leads to more efficient forecasting, and selecting appropriate domain knowledge can improve accuracy further.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge