Yuejiao Su

ANNEXE: Unified Analyzing, Answering, and Pixel Grounding for Egocentric Interaction

Apr 02, 2025

Abstract:Egocentric interaction perception is one of the essential branches in investigating human-environment interaction, which lays the basis for developing next-generation intelligent systems. However, existing egocentric interaction understanding methods cannot yield coherent textual and pixel-level responses simultaneously according to user queries, which lacks flexibility for varying downstream application requirements. To comprehend egocentric interactions exhaustively, this paper presents a novel task named Egocentric Interaction Reasoning and pixel Grounding (Ego-IRG). Taking an egocentric image with the query as input, Ego-IRG is the first task that aims to resolve the interactions through three crucial steps: analyzing, answering, and pixel grounding, which results in fluent textual and fine-grained pixel-level responses. Another challenge is that existing datasets cannot meet the conditions for the Ego-IRG task. To address this limitation, this paper creates the Ego-IRGBench dataset based on extensive manual efforts, which includes over 20k egocentric images with 1.6 million queries and corresponding multimodal responses about interactions. Moreover, we design a unified ANNEXE model to generate text- and pixel-level outputs utilizing multimodal large language models, which enables a comprehensive interpretation of egocentric interactions. The experiments on the Ego-IRGBench exhibit the effectiveness of our ANNEXE model compared with other works.

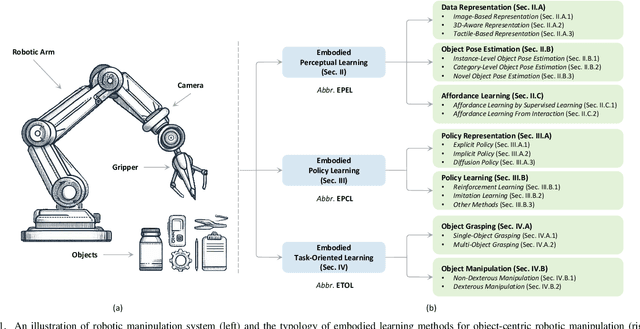

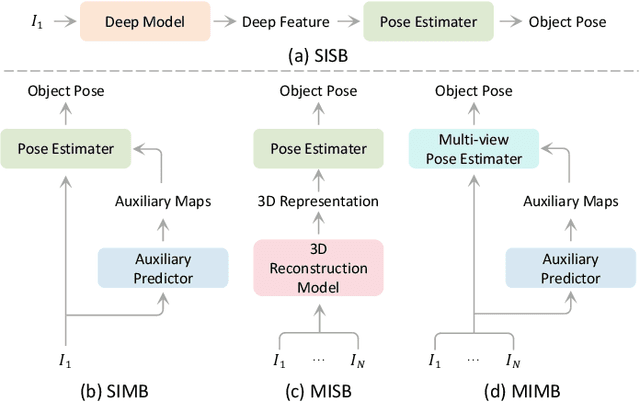

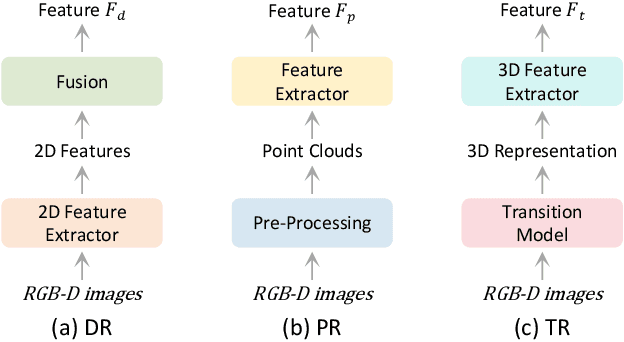

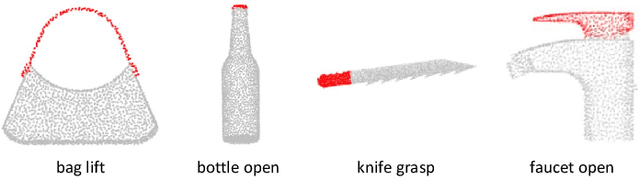

A Survey of Embodied Learning for Object-Centric Robotic Manipulation

Aug 21, 2024

Abstract:Embodied learning for object-centric robotic manipulation is a rapidly developing and challenging area in embodied AI. It is crucial for advancing next-generation intelligent robots and has garnered significant interest recently. Unlike data-driven machine learning methods, embodied learning focuses on robot learning through physical interaction with the environment and perceptual feedback, making it especially suitable for robotic manipulation. In this paper, we provide a comprehensive survey of the latest advancements in this field and categorize the existing work into three main branches: 1) Embodied perceptual learning, which aims to predict object pose and affordance through various data representations; 2) Embodied policy learning, which focuses on generating optimal robotic decisions using methods such as reinforcement learning and imitation learning; 3) Embodied task-oriented learning, designed to optimize the robot's performance based on the characteristics of different tasks in object grasping and manipulation. In addition, we offer an overview and discussion of public datasets, evaluation metrics, representative applications, current challenges, and potential future research directions. A project associated with this survey has been established at https://github.com/RayYoh/OCRM_survey.

ORMNet: Object-centric Relationship Modeling for Egocentric Hand-object Segmentation

Jul 08, 2024

Abstract:Egocentric hand-object segmentation (EgoHOS) is a brand-new task aiming at segmenting the hands and interacting objects in the egocentric image. Although significant advancements have been achieved by current methods, establishing an end-to-end model with high accuracy remains an unresolved challenge. Moreover, existing methods lack explicit modeling of the relationships between hands and objects as well as objects and objects, thereby disregarding critical information on hand-object interaction and introducing confusion into algorithms, ultimately leading to a reduction in segmentation performance. To address the limitations of existing methods, this paper proposes a novel end-to-end Object-centric Relationship Modeling Network (ORMNet) for EgoHOS. Specifically, based on a single-encoder and multi-decoder framework, we design the Hand-Object Relation (HOR) module to leverage hand-guided attention to capture the correlation between hands and objects and facilitate their representations. Moreover, based on the observed interrelationships between diverse categories of objects, we introduce the Object Relation Decoupling (ORD) strategy. This strategy allows the decoupling of the two-hand object during training, thereby alleviating the ambiguity of the network. Experimental results on three datasets show that the proposed ORMNet has notably exceptional segmentation performance with robust generalization capabilities.

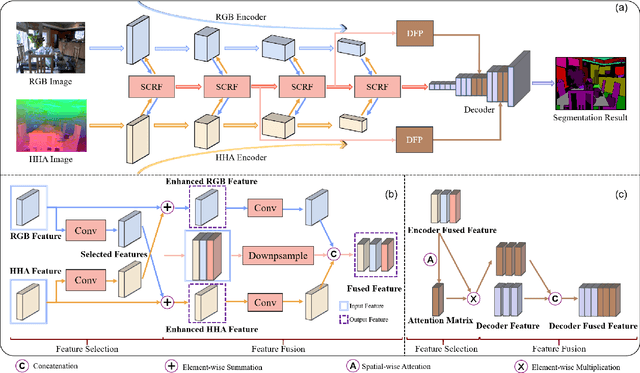

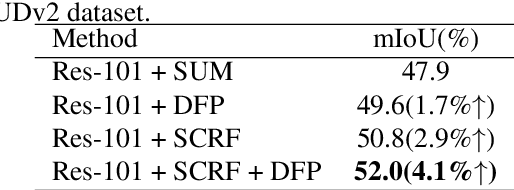

Deep feature selection-and-fusion for RGB-D semantic segmentation

May 10, 2021

Abstract:Scene depth information can help visual information for more accurate semantic segmentation. However, how to effectively integrate multi-modality information into representative features is still an open problem. Most of the existing work uses DCNNs to implicitly fuse multi-modality information. But as the network deepens, some critical distinguishing features may be lost, which reduces the segmentation performance. This work proposes a unified and efficient feature selectionand-fusion network (FSFNet), which contains a symmetric cross-modality residual fusion module used for explicit fusion of multi-modality information. Besides, the network includes a detailed feature propagation module, which is used to maintain low-level detailed information during the forward process of the network. Compared with the state-of-the-art methods, experimental evaluations demonstrate that the proposed model achieves competitive performance on two public datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge