Yuefan Deng

Kolmogorov-Arnold Representation for Symplectic Learning: Advancing Hamiltonian Neural Networks

Aug 26, 2025

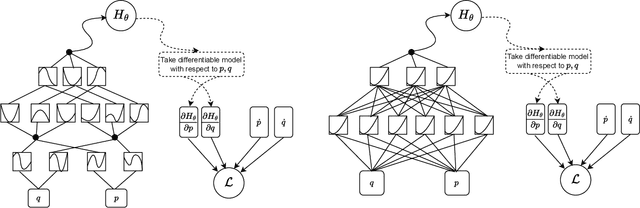

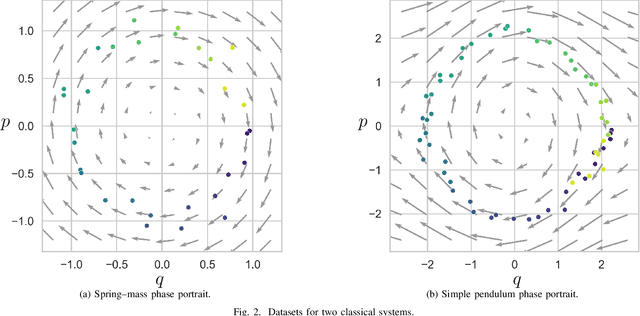

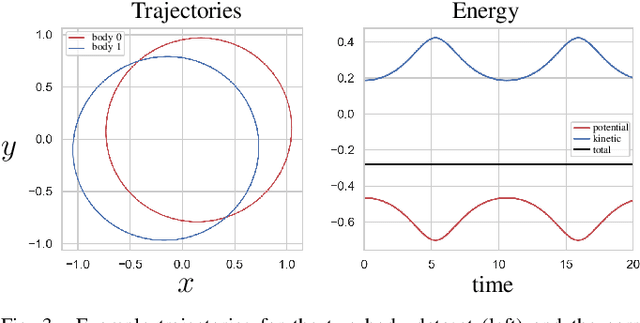

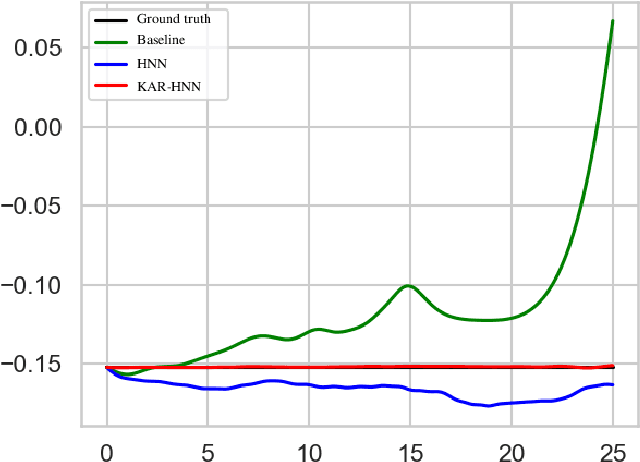

Abstract:We propose a Kolmogorov-Arnold Representation-based Hamiltonian Neural Network (KAR-HNN) that replaces the Multilayer Perceptrons (MLPs) with univariate transformations. While Hamiltonian Neural Networks (HNNs) ensure energy conservation by learning Hamiltonian functions directly from data, existing implementations, often relying on MLPs, cause hypersensitivity to the hyperparameters while exploring complex energy landscapes. Our approach exploits the localized function approximations to better capture high-frequency and multi-scale dynamics, reducing energy drift and improving long-term predictive stability. The networks preserve the symplectic form of Hamiltonian systems, and thus maintain interpretability and physical consistency. After assessing KAR-HNN on four benchmark problems including spring-mass, simple pendulum, two- and three-body problem, we foresee its effectiveness for accurate and stable modeling of realistic physical processes often at high dimensions and with few known parameters.

An Iterative Framework for Generative Backmapping of Coarse Grained Proteins

May 23, 2025Abstract:The techniques of data-driven backmapping from coarse-grained (CG) to fine-grained (FG) representation often struggle with accuracy, unstable training, and physical realism, especially when applied to complex systems such as proteins. In this work, we introduce a novel iterative framework by using conditional Variational Autoencoders and graph-based neural networks, specifically designed to tackle the challenges associated with such large-scale biomolecules. Our method enables stepwise refinement from CG beads to full atomistic details. We outline the theory of iterative generative backmapping and demonstrate via numerical experiments the advantages of multistep schemes by applying them to proteins of vastly different structures with very coarse representations. This multistep approach not only improves the accuracy of reconstructions but also makes the training process more computationally efficient for proteins with ultra-CG representations.

Exploring Robust Features for Improving Adversarial Robustness

Sep 09, 2023Abstract:While deep neural networks (DNNs) have revolutionized many fields, their fragility to carefully designed adversarial attacks impedes the usage of DNNs in safety-critical applications. In this paper, we strive to explore the robust features which are not affected by the adversarial perturbations, i.e., invariant to the clean image and its adversarial examples, to improve the model's adversarial robustness. Specifically, we propose a feature disentanglement model to segregate the robust features from non-robust features and domain specific features. The extensive experiments on four widely used datasets with different attacks demonstrate that robust features obtained from our model improve the model's adversarial robustness compared to the state-of-the-art approaches. Moreover, the trained domain discriminator is able to identify the domain specific features from the clean images and adversarial examples almost perfectly. This enables adversarial example detection without incurring additional computational costs. With that, we can also specify different classifiers for clean images and adversarial examples, thereby avoiding any drop in clean image accuracy.

AI-aided multiscale modeling of physiologically-significant blood clots

May 25, 2022Abstract:We have developed an AI-aided multiple time stepping (AI-MTS) algorithm and multiscale modeling framework (AI-MSM) and implemented them on the Summit-like supercomputer, AIMOS. AI-MSM is the first of its kind to integrate multi-physics, including intra-platelet, inter-platelet, and fluid-platelet interactions, into one system. It has simulated a record-setting multiscale blood clotting model of 102 million particles, of which 70 flowing and 180 aggregating platelets, under dissipative particle dynamics to coarse-grained molecular dynamics. By adaptively adjusting timestep sizes to match the characteristic time scales of the underlying dynamics, AI-MTS optimally balances speeds and accuracies of the simulations.

AGKD-BML: Defense Against Adversarial Attack by Attention Guided Knowledge Distillation and Bi-directional Metric Learning

Aug 13, 2021

Abstract:While deep neural networks have shown impressive performance in many tasks, they are fragile to carefully designed adversarial attacks. We propose a novel adversarial training-based model by Attention Guided Knowledge Distillation and Bi-directional Metric Learning (AGKD-BML). The attention knowledge is obtained from a weight-fixed model trained on a clean dataset, referred to as a teacher model, and transferred to a model that is under training on adversarial examples (AEs), referred to as a student model. In this way, the student model is able to focus on the correct region, as well as correcting the intermediate features corrupted by AEs to eventually improve the model accuracy. Moreover, to efficiently regularize the representation in feature space, we propose a bidirectional metric learning. Specifically, given a clean image, it is first attacked to its most confusing class to get the forward AE. A clean image in the most confusing class is then randomly picked and attacked back to the original class to get the backward AE. A triplet loss is then used to shorten the representation distance between original image and its AE, while enlarge that between the forward and backward AEs. We conduct extensive adversarial robustness experiments on two widely used datasets with different attacks. Our proposed AGKD-BML model consistently outperforms the state-of-the-art approaches. The code of AGKD-BML will be available at: https://github.com/hongw579/AGKD-BML.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge