Yue Peng

STEP3-VL-10B Technical Report

Jan 15, 2026Abstract:We present STEP3-VL-10B, a lightweight open-source foundation model designed to redefine the trade-off between compact efficiency and frontier-level multimodal intelligence. STEP3-VL-10B is realized through two strategic shifts: first, a unified, fully unfrozen pre-training strategy on 1.2T multimodal tokens that integrates a language-aligned Perception Encoder with a Qwen3-8B decoder to establish intrinsic vision-language synergy; and second, a scaled post-training pipeline featuring over 1k iterations of reinforcement learning. Crucially, we implement Parallel Coordinated Reasoning (PaCoRe) to scale test-time compute, allocating resources to scalable perceptual reasoning that explores and synthesizes diverse visual hypotheses. Consequently, despite its compact 10B footprint, STEP3-VL-10B rivals or surpasses models 10$\times$-20$\times$ larger (e.g., GLM-4.6V-106B, Qwen3-VL-235B) and top-tier proprietary flagships like Gemini 2.5 Pro and Seed-1.5-VL. Delivering best-in-class performance, it records 92.2% on MMBench and 80.11% on MMMU, while excelling in complex reasoning with 94.43% on AIME2025 and 75.95% on MathVision. We release the full model suite to provide the community with a powerful, efficient, and reproducible baseline.

PaCoRe: Learning to Scale Test-Time Compute with Parallel Coordinated Reasoning

Jan 09, 2026Abstract:We introduce Parallel Coordinated Reasoning (PaCoRe), a training-and-inference framework designed to overcome a central limitation of contemporary language models: their inability to scale test-time compute (TTC) far beyond sequential reasoning under a fixed context window. PaCoRe departs from the traditional sequential paradigm by driving TTC through massive parallel exploration coordinated via a message-passing architecture in multiple rounds. Each round launches many parallel reasoning trajectories, compacts their findings into context-bounded messages, and synthesizes these messages to guide the next round and ultimately produce the final answer. Trained end-to-end with large-scale, outcome-based reinforcement learning, the model masters the synthesis abilities required by PaCoRe and scales to multi-million-token effective TTC without exceeding context limits. The approach yields strong improvements across diverse domains, and notably pushes reasoning beyond frontier systems in mathematics: an 8B model reaches 94.5% on HMMT 2025, surpassing GPT-5's 93.2% by scaling effective TTC to roughly two million tokens. We open-source model checkpoints, training data, and the full inference pipeline to accelerate follow-up work.

StepFun-Prover Preview: Let's Think and Verify Step by Step

Jul 27, 2025Abstract:We present StepFun-Prover Preview, a large language model designed for formal theorem proving through tool-integrated reasoning. Using a reinforcement learning pipeline that incorporates tool-based interactions, StepFun-Prover can achieve strong performance in generating Lean 4 proofs with minimal sampling. Our approach enables the model to emulate human-like problem-solving strategies by iteratively refining proofs based on real-time environment feedback. On the miniF2F-test benchmark, StepFun-Prover achieves a pass@1 success rate of $70.0\%$. Beyond advancing benchmark performance, we introduce an end-to-end training framework for developing tool-integrated reasoning models, offering a promising direction for automated theorem proving and Math AI assistant.

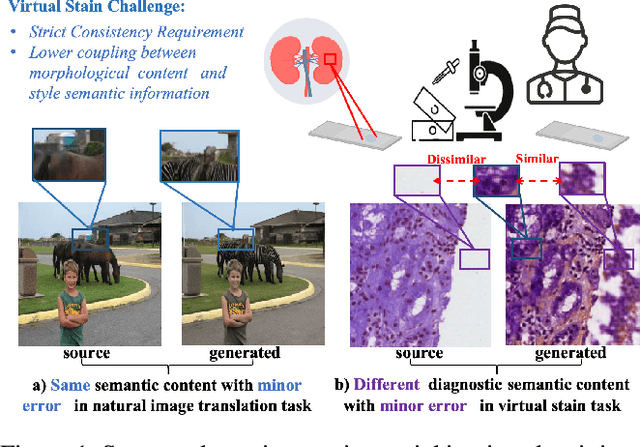

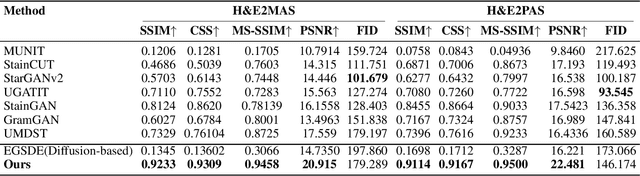

Unpaired Multi-Domain Histopathology Virtual Staining using Dual Path Prompted Inversion

Dec 15, 2024

Abstract:Virtual staining leverages computer-aided techniques to transfer the style of histochemically stained tissue samples to other staining types. In virtual staining of pathological images, maintaining strict structural consistency is crucial, as these images emphasize structural integrity more than natural images. Even slight structural alterations can lead to deviations in diagnostic semantic information. Furthermore, the unpaired characteristic of virtual staining data may compromise the preservation of pathological diagnostic content. To address these challenges, we propose a dual-path inversion virtual staining method using prompt learning, which optimizes visual prompts to control content and style, while preserving complete pathological diagnostic content. Our proposed inversion technique comprises two key components: (1) Dual Path Prompted Strategy, we utilize a feature adapter function to generate reference images for inversion, providing style templates for input image inversion, called Style Target Path. We utilize the inversion of the input image as the Structural Target path, employing visual prompt images to maintain structural consistency in this path while preserving style information from the style Target path. During the deterministic sampling process, we achieve complete content-style disentanglement through a plug-and-play embedding visual prompt approach. (2) StainPrompt Optimization, where we only optimize the null visual prompt as ``operator'' for dual path inversion, rather than fine-tune pre-trained model. We optimize null visual prompt for structual and style trajectory around pivotal noise on each timestep, ensuring accurate dual-path inversion reconstruction. Extensive evaluations on publicly available multi-domain unpaired staining datasets demonstrate high structural consistency and accurate style transfer results.

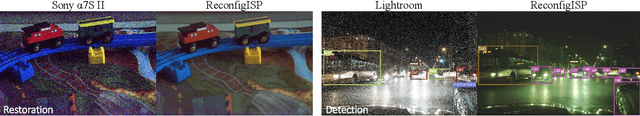

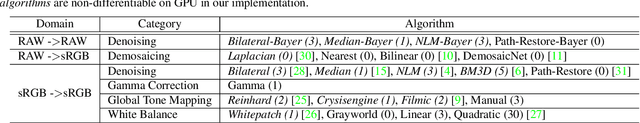

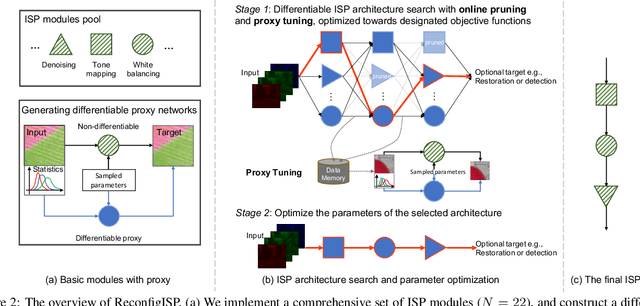

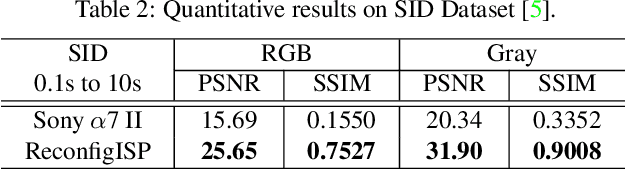

ReconfigISP: Reconfigurable Camera Image Processing Pipeline

Sep 10, 2021

Abstract:Image Signal Processor (ISP) is a crucial component in digital cameras that transforms sensor signals into images for us to perceive and understand. Existing ISP designs always adopt a fixed architecture, e.g., several sequential modules connected in a rigid order. Such a fixed ISP architecture may be suboptimal for real-world applications, where camera sensors, scenes and tasks are diverse. In this study, we propose a novel Reconfigurable ISP (ReconfigISP) whose architecture and parameters can be automatically tailored to specific data and tasks. In particular, we implement several ISP modules, and enable backpropagation for each module by training a differentiable proxy, hence allowing us to leverage the popular differentiable neural architecture search and effectively search for the optimal ISP architecture. A proxy tuning mechanism is adopted to maintain the accuracy of proxy networks in all cases. Extensive experiments conducted on image restoration and object detection, with different sensors, light conditions and efficiency constraints, validate the effectiveness of ReconfigISP. Only hundreds of parameters need tuning for every task.

Fast K-Means Clustering with Anderson Acceleration

May 27, 2018

Abstract:We propose a novel method to accelerate Lloyd's algorithm for K-Means clustering. Unlike previous acceleration approaches that reduce computational cost per iterations or improve initialization, our approach is focused on reducing the number of iterations required for convergence. This is achieved by treating the assignment step and the update step of Lloyd's algorithm as a fixed-point iteration, and applying Anderson acceleration, a well-established technique for accelerating fixed-point solvers. Classical Anderson acceleration utilizes m previous iterates to find an accelerated iterate, and its performance on K-Means clustering can be sensitive to choice of m and the distribution of samples. We propose a new strategy to dynamically adjust the value of m, which achieves robust and consistent speedups across different problem instances. Our method complements existing acceleration techniques, and can be combined with them to achieve state-of-the-art performance. We perform extensive experiments to evaluate the performance of the proposed method, where it outperforms other algorithms in 106 out of 120 test cases, and the mean decrease ratio of computational time is more than 33%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge