Yuda Bi

Cross-Modal Synthesis of Structural MRI and Functional Connectivity Networks via Conditional ViT-GANs

Sep 15, 2023

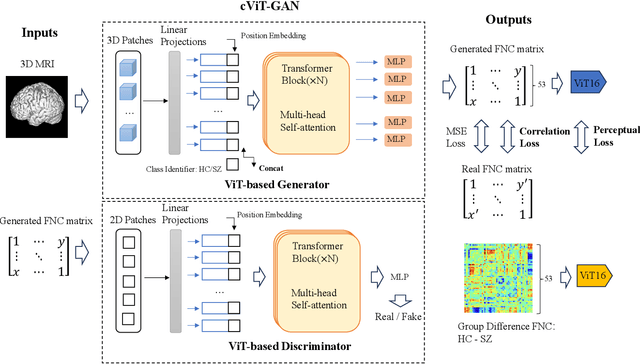

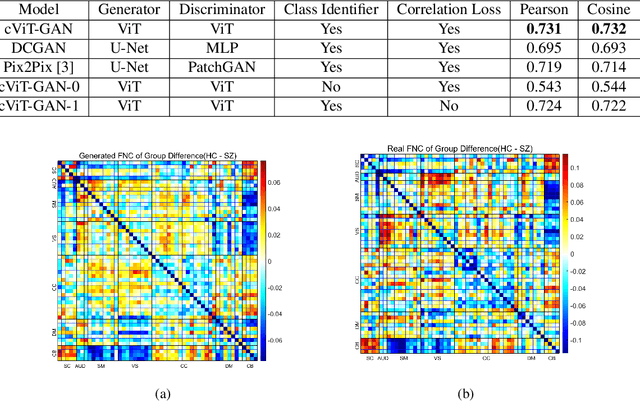

Abstract:The cross-modal synthesis between structural magnetic resonance imaging (sMRI) and functional network connectivity (FNC) is a relatively unexplored area in medical imaging, especially with respect to schizophrenia. This study employs conditional Vision Transformer Generative Adversarial Networks (cViT-GANs) to generate FNC data based on sMRI inputs. After training on a comprehensive dataset that included both individuals with schizophrenia and healthy control subjects, our cViT-GAN model effectively synthesized the FNC matrix for each subject, and then formed a group difference FNC matrix, obtaining a Pearson correlation of 0.73 with the actual FNC matrix. In addition, our FNC visualization results demonstrate significant correlations in particular subcortical brain regions, highlighting the model's capability of capturing detailed structural-functional associations. This performance distinguishes our model from conditional CNN-based GAN alternatives such as Pix2Pix. Our research is one of the first attempts to link sMRI and FNC synthesis, setting it apart from other cross-modal studies that concentrate on T1- and T2-weighted MR images or the fusion of MRI and CT scans.

Exploring the Power of Generative Deep Learning for Image-to-Image Translation and MRI Reconstruction: A Cross-Domain Review

Mar 16, 2023Abstract:Deep learning has become a prominent computational modeling tool in the areas of computer vision and image processing in recent years. This research comprehensively analyzes the different deep-learning methods used for image-to-image translation and reconstruction in the natural and medical imaging domains. We examine the famous deep learning frameworks, such as convolutional neural networks and generative adversarial networks, and their variants, delving into the fundamental principles and difficulties of each. In the field of natural computer vision, we investigate the development and extension of various deep-learning generative models. In comparison, we investigate the possible applications of deep learning to generative medical imaging problems, including medical image translation, MRI reconstruction, and multi-contrast MRI synthesis. This thorough review provides scholars and practitioners in the areas of generative computer vision and medical imaging with useful insights for summarizing past works and getting insight into future research paths.

MultiCrossViT: Multimodal Vision Transformer for Schizophrenia Prediction using Structural MRI and Functional Network Connectivity Data

Nov 12, 2022Abstract:Vision Transformer (ViT) is a pioneering deep learning framework that can address real-world computer vision issues, such as image classification and object recognition. Importantly, ViTs are proven to outperform traditional deep learning models, such as convolutional neural networks (CNNs). Relatively recently, a number of ViT mutations have been transplanted into the field of medical imaging, thereby resolving a variety of critical classification and segmentation challenges, especially in terms of brain imaging data. In this work, we provide a novel multimodal deep learning pipeline, MultiCrossViT, which is capable of analyzing both structural MRI (sMRI) and static functional network connectivity (sFNC) data for the prediction of schizophrenia disease. On a dataset with minimal training subjects, our novel model can achieve an AUC of 0.832. Finally, we visualize multiple brain regions and covariance patterns most relevant to schizophrenia based on the resulting ViT attention maps by extracting features from transformer encoders.

Prediction of Gender from Longitudinal MRI data via Deep Learning on Adolescent Data Reveals Unique Patterns Associated with Brain Structure and Change over a Two-year Period

Sep 15, 2022

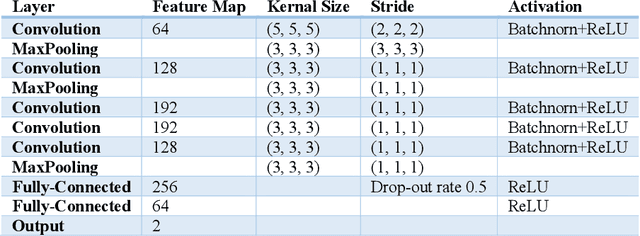

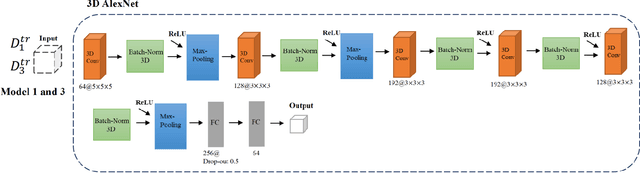

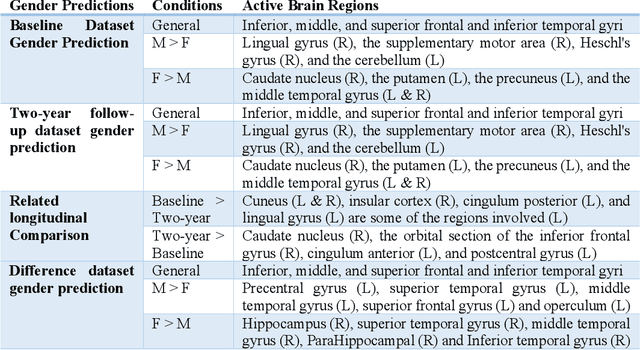

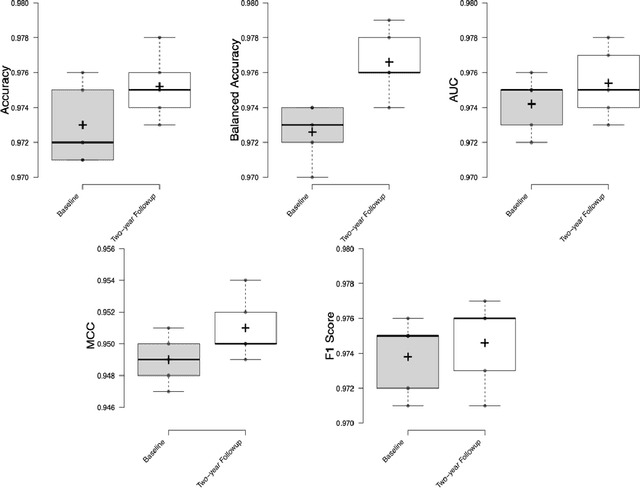

Abstract:Deep learning algorithms for predicting neuroimaging data have shown considerable promise in various applications. Prior work has demonstrated that deep learning models that take advantage of the data's 3D structure can outperform standard machine learning on several learning tasks. However, most prior research in this area has focused on neuroimaging data from adults. Within the Adolescent Brain and Cognitive Development (ABCD) dataset, a large longitudinal development study, we examine structural MRI data to predict gender and identify gender-related changes in brain structure. Results demonstrate that gender prediction accuracy is exceptionally high (>97%) with training epochs >200 and that this accuracy increases with age. Brain regions identified as the most discriminative in the task under study include predominantly frontal areas and the temporal lobe. When evaluating gender predictive changes specific to a two-year increase in age, a broader set of visual, cingulate, and insular regions are revealed. Our findings show a robust gender-related structural brain change pattern, even over a small age range. This suggests that it might be possible to study how the brain changes during adolescence by looking at how these changes are related to different behavioral and environmental factors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge