Yuanzheng Li

Advancing Attack-Resilient Scheduling of Integrated Energy Systems with Demand Response via Deep Reinforcement Learning

Nov 28, 2023

Abstract:Optimally scheduling multi-energy flow is an effective method to utilize renewable energy sources (RES) and improve the stability and economy of integrated energy systems (IES). However, the stable demand-supply of IES faces challenges from uncertainties that arise from RES and loads, as well as the increasing impact of cyber-attacks with advanced information and communication technologies adoption. To address these challenges, this paper proposes an innovative model-free resilience scheduling method based on state-adversarial deep reinforcement learning (DRL) for integrated demand response (IDR)-enabled IES. The proposed method designs an IDR program to explore the interaction ability of electricity-gas-heat flexible loads. Additionally, a state-adversarial Markov decision process (SA-MDP) model characterizes the energy scheduling problem of IES under cyber-attack. The state-adversarial soft actor-critic (SA-SAC) algorithm is proposed to mitigate the impact of cyber-attacks on the scheduling strategy. Simulation results demonstrate that our method is capable of adequately addressing the uncertainties resulting from RES and loads, mitigating the impact of cyber-attacks on the scheduling strategy, and ensuring a stable demand supply for various energy sources. Moreover, the proposed method demonstrates resilience against cyber-attacks. Compared to the original soft actor-critic (SAC) algorithm, it achieves a 10\% improvement in economic performance under cyber-attack scenarios.

PMU measurements based short-term voltage stability assessment of power systems via deep transfer learning

Aug 27, 2023Abstract:Deep learning has emerged as an effective solution for addressing the challenges of short-term voltage stability assessment (STVSA) in power systems. However, existing deep learning-based STVSA approaches face limitations in adapting to topological changes, sample labeling, and handling small datasets. To overcome these challenges, this paper proposes a novel phasor measurement unit (PMU) measurements-based STVSA method by using deep transfer learning. The method leverages the real-time dynamic information captured by PMUs to create an initial dataset. It employs temporal ensembling for sample labeling and utilizes least squares generative adversarial networks (LSGAN) for data augmentation, enabling effective deep learning on small-scale datasets. Additionally, the method enhances adaptability to topological changes by exploring connections between different faults. Experimental results on the IEEE 39-bus test system demonstrate that the proposed method improves model evaluation accuracy by approximately 20% through transfer learning, exhibiting strong adaptability to topological changes. Leveraging the self-attention mechanism of the Transformer model, this approach offers significant advantages over shallow learning methods and other deep learning-based approaches.

Federated Multi-Agent Deep Reinforcement Learning Approach via Physics-Informed Reward for Multi-Microgrid Energy Management

Dec 29, 2022

Abstract:The utilization of large-scale distributed renewable energy promotes the development of the multi-microgrid (MMG), which raises the need of developing an effective energy management method to minimize economic costs and keep self energy-sufficiency. The multi-agent deep reinforcement learning (MADRL) has been widely used for the energy management problem because of its real-time scheduling ability. However, its training requires massive energy operation data of microgrids (MGs), while gathering these data from different MGs would threaten their privacy and data security. Therefore, this paper tackles this practical yet challenging issue by proposing a federated multi-agent deep reinforcement learning (F-MADRL) algorithm via the physics-informed reward. In this algorithm, the federated learning (FL) mechanism is introduced to train the F-MADRL algorithm thus ensures the privacy and the security of data. In addition, a decentralized MMG model is built, and the energy of each participated MG is managed by an agent, which aims to minimize economic costs and keep self energy-sufficiency according to the physics-informed reward. At first, MGs individually execute the self-training based on local energy operation data to train their local agent models. Then, these local models are periodically uploaded to a server and their parameters are aggregated to build a global agent, which will be broadcasted to MGs and replace their local agents. In this way, the experience of each MG agent can be shared and the energy operation data is not explicitly transmitted, thus protecting the privacy and ensuring data security. Finally, experiments are conducted on Oak Ridge national laboratory distributed energy control communication lab microgrid (ORNL-MG) test system, and the comparisons are carried out to verify the effectiveness of introducing the FL mechanism and the outperformance of our proposed F-MADRL.

Optimal scheduling of island integrated energy systems considering multi-uncertainties and hydrothermal simultaneous transmission: A deep reinforcement learning approach

Dec 27, 2022

Abstract:Multi-uncertainties from power sources and loads have brought significant challenges to the stable demand supply of various resources at islands. To address these challenges, a comprehensive scheduling framework is proposed by introducing a model-free deep reinforcement learning (DRL) approach based on modeling an island integrated energy system (IES). In response to the shortage of freshwater on islands, in addition to the introduction of seawater desalination systems, a transmission structure of "hydrothermal simultaneous transmission" (HST) is proposed. The essence of the IES scheduling problem is the optimal combination of each unit's output, which is a typical timing control problem and conforms to the Markov decision-making solution framework of deep reinforcement learning. Deep reinforcement learning adapts to various changes and timely adjusts strategies through the interaction of agents and the environment, avoiding complicated modeling and prediction of multi-uncertainties. The simulation results show that the proposed scheduling framework properly handles multi-uncertainties from power sources and loads, achieves a stable demand supply for various resources, and has better performance than other real-time scheduling methods, especially in terms of computational efficiency. In addition, the HST model constitutes an active exploration to improve the utilization efficiency of island freshwater.

* Accepted by Applied Energy

Wind Power Forecasting Considering Data Privacy Protection: A Federated Deep Reinforcement Learning Approach

Nov 02, 2022

Abstract:In a modern power system with an increasing proportion of renewable energy, wind power prediction is crucial to the arrangement of power grid dispatching plans due to the volatility of wind power. However, traditional centralized forecasting methods raise concerns regarding data privacy-preserving and data islands problem. To handle the data privacy and openness, we propose a forecasting scheme that combines federated learning and deep reinforcement learning (DRL) for ultra-short-term wind power forecasting, called federated deep reinforcement learning (FedDRL). Firstly, this paper uses the deep deterministic policy gradient (DDPG) algorithm as the basic forecasting model to improve prediction accuracy. Secondly, we integrate the DDPG forecasting model into the framework of federated learning. The designed FedDRL can obtain an accurate prediction model in a decentralized way by sharing model parameters instead of sharing private data which can avoid sensitive privacy issues. The simulation results show that the proposed FedDRL outperforms the traditional prediction methods in terms of forecasting accuracy. More importantly, while ensuring the forecasting performance, FedDRL can effectively protect the data privacy and relieve the communication pressure compared with the traditional centralized forecasting method. In addition, a simulation with different federated learning parameters is conducted to confirm the robustness of the proposed scheme.

Enabling the Network to Surf the Internet

Feb 24, 2021

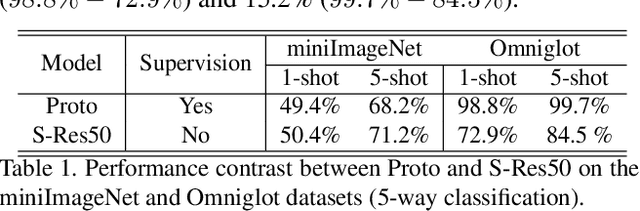

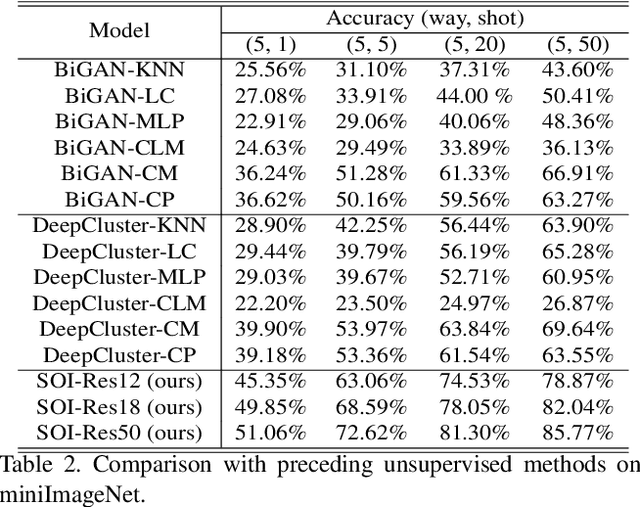

Abstract:Few-shot learning is challenging due to the limited data and labels. Existing algorithms usually resolve this problem by pre-training the model with a considerable amount of annotated data which shares knowledge with the target domain. Nevertheless, large quantities of homogenous data samples are not always available. To tackle this issue, we develop a framework that enables the model to surf the Internet, which implies that the model can collect and annotate data without manual effort. Since the online data is virtually limitless and continues to be generated, the model can thus be empowered to constantly obtain up-to-date knowledge from the Internet. Additionally, we observe that the generalization ability of the learned representation is crucial for self-supervised learning. To present its importance, a naive yet efficient normalization strategy is proposed. Consequentially, this strategy boosts the accuracy of the model significantly (20.46% at most). We demonstrate the superiority of the proposed framework with experiments on miniImageNet, tieredImageNet and Omniglot. The results indicate that our method has surpassed previous unsupervised counterparts by a large margin (more than 10%) and obtained performance comparable with the supervised ones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge