Yousef Emam

Safe Model-Based Reinforcement Learning Using Robust Control Barrier Functions

Oct 11, 2021

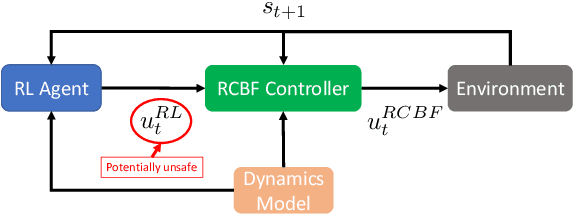

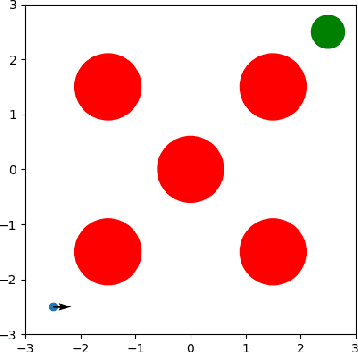

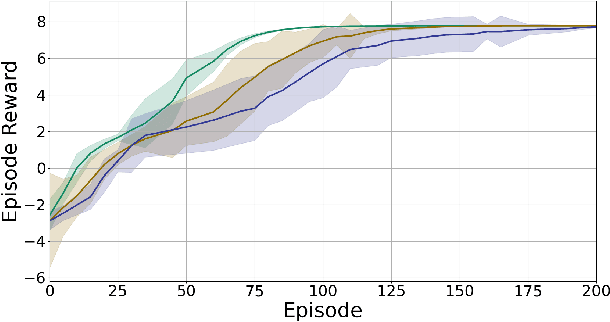

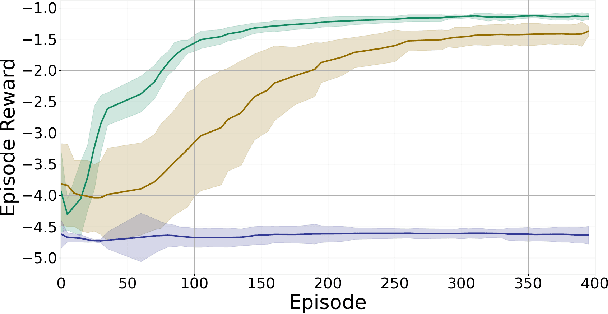

Abstract:Reinforcement Learning (RL) is effective in many scenarios. However, it typically requires the exploration of a sufficiently large number of state-action pairs, some of which may be unsafe. Consequently, its application to safety-critical systems remains a challenge. Towards this end, an increasingly common approach to address safety involves the addition of a safety layer that projects the RL actions onto a safe set of actions. In turn, a challenge for such frameworks is how to effectively couple RL with the safety layer to improve the learning performance. In the context of leveraging control barrier functions for safe RL training, prior work focuses on a restricted class of barrier functions and utilizes an auxiliary neural net to account for the effects of the safety layer which inherently results in an approximation. In this paper, we frame safety as a differentiable robust-control-barrier-function layer in a model-based RL framework. As such, this approach both ensures safety and effectively guides exploration during training resulting in increased sample efficiency as demonstrated in the experiments.

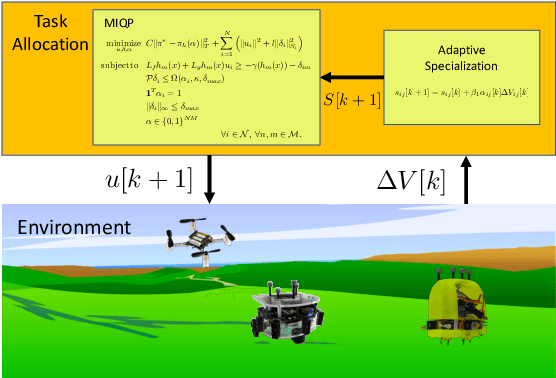

A Resilient and Energy-Aware Task Allocation Framework for Heterogeneous Multi-Robot Systems

May 12, 2021

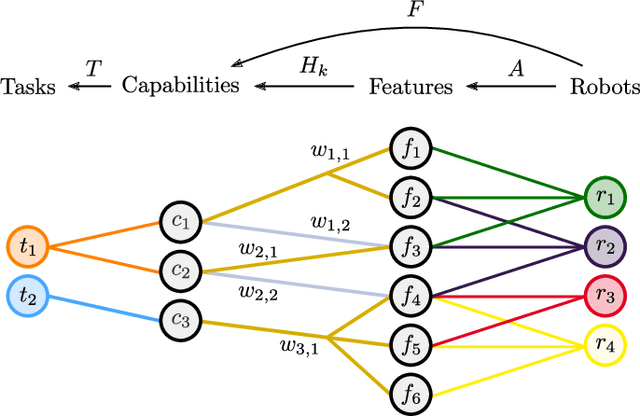

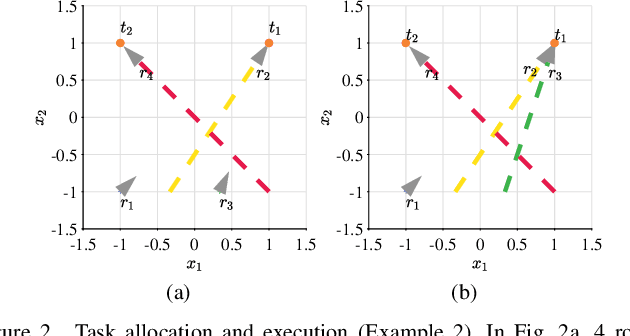

Abstract:In the context of heterogeneous multi-robot teams deployed for executing multiple tasks, this paper develops an energy-aware framework for allocating tasks to robots in an online fashion. With a primary focus on long-duration autonomy applications, we opt for a survivability-focused approach. Towards this end, the task prioritization and execution -- through which the allocation of tasks to robots is effectively realized -- are encoded as constraints within an optimization problem aimed at minimizing the energy consumed by the robots at each point in time. In this context, an allocation is interpreted as a prioritization of a task over all others by each of the robots. Furthermore, we present a novel framework to represent the heterogeneous capabilities of the robots, by distinguishing between the features available on the robots, and the capabilities enabled by these features. By embedding these descriptions within the optimization problem, we make the framework resilient to situations where environmental conditions make certain features unsuitable to support a capability and when component failures on the robots occur. We demonstrate the efficacy and resilience of the proposed approach in a variety of use-case scenarios, consisting of simulations and real robot experiments.

Data-Driven Robust Barrier Functions for Safe, Long-Term Operation

Apr 15, 2021

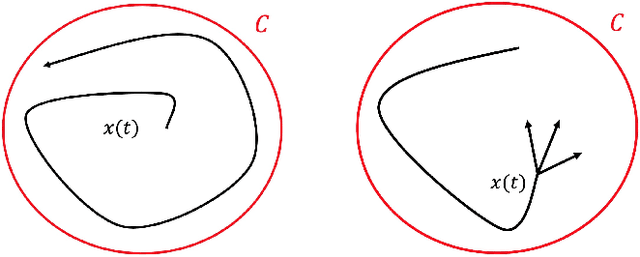

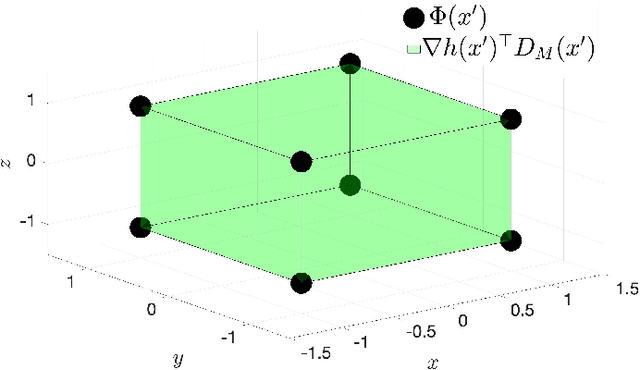

Abstract:Applications that require multi-robot systems to operate independently for extended periods of time in unknown or unstructured environments face a broad set of challenges, such as hardware degradation, changing weather patterns, or unfamiliar terrain. To operate effectively under these changing conditions, algorithms developed for long-term autonomy applications require a stronger focus on robustness. Consequently, this work considers the ability to satisfy the operation-critical constraints of a disturbed system in a modular fashion, which means compatibility with different system objectives and disturbance representations. Toward this end, this paper introduces a controller-synthesis approach to constraint satisfaction for disturbed control-affine dynamical systems by utilizing Control Barrier Functions (CBFs). The aforementioned framework is constructed by modelling the disturbance as a union of convex hulls and leveraging previous work on CBFs for differential inclusions. This method of disturbance modeling grants compatibility with different disturbance-estimation methods. For example, this work demonstrates how a disturbance learned via a Gaussian process may be utilized in the proposed framework. These estimated disturbances are incorporated into the proposed controller-synthesis framework which is then tested on a fleet of robots in different scenarios.

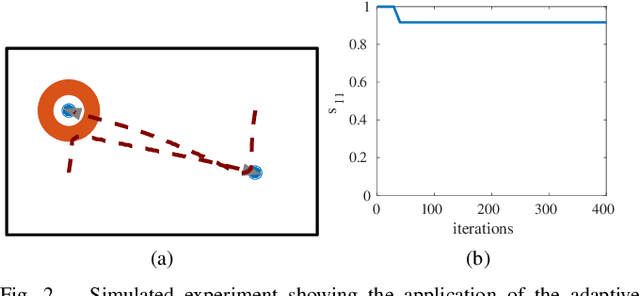

Data-Driven Adaptive Task Allocation for Heterogeneous Multi-Robot Teams Using Robust Control Barrier Functions

Nov 10, 2020

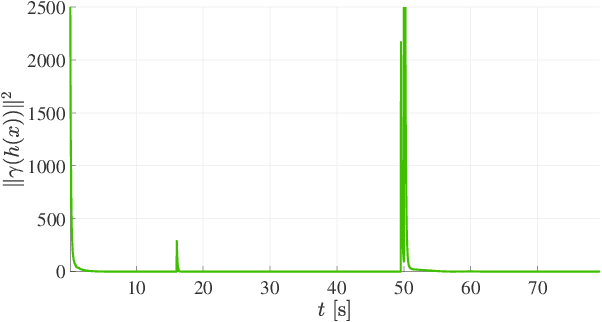

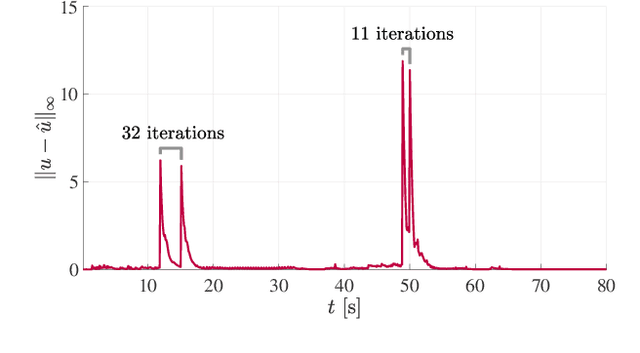

Abstract:Multi-robot task allocation is a ubiquitous problem in robotics due to its applicability in a variety of scenarios. Adaptive task-allocation algorithms account for unknown disturbances and unpredicted phenomena in the environment where robots are deployed to execute tasks. However, this adaptivity typically comes at the cost of requiring precise knowledge of robot models in order to evaluate the allocation effectiveness and to adjust the task assignment online. As such, environmental disturbances can significantly degrade the accuracy of the models which in turn negatively affects the quality of the task allocation. In this paper, we leverage Gaussian processes, differential inclusions, and robust control barrier functions to learn environmental disturbances in order to guarantee robust task execution. We show the implementation and the effectiveness of the proposed framework on a real multi-robot system.

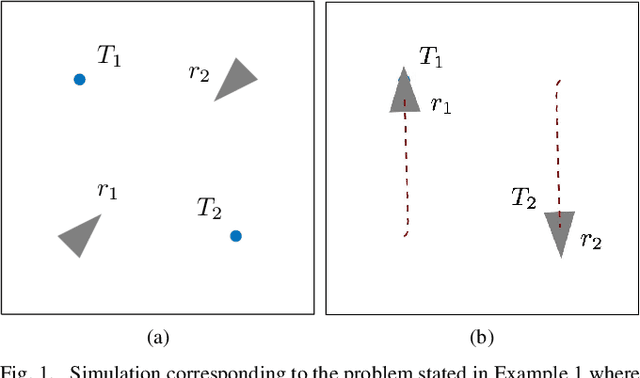

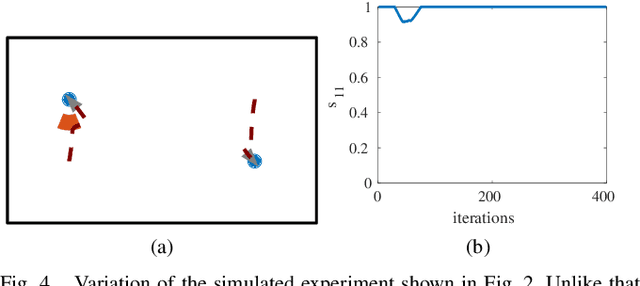

Adaptive Task Allocation for Heterogeneous Multi-Robot Teams with Evolving and Unknown Robot Capabilities

Mar 06, 2020

Abstract:For multi-robot teams with heterogeneous capabilities, typical task allocation methods assign tasks to robots based on the suitability of the robots to perform certain tasks as well as the requirements of the task itself. However, in real-world deployments of robot teams, the suitability of a robot might be unknown prior to deployment, or might vary due to changing environmental conditions. This paper presents an adaptive task allocation and task execution framework which allows individual robots to prioritize among tasks while explicitly taking into account their efficacy at performing the tasks---the parameters of which might be unknown before deployment and/or might vary over time. Such a \emph{specialization} parameter---encoding the effectiveness of a given robot towards a task---is updated on-the-fly, allowing our algorithm to reassign tasks among robots with the aim of executing them. The developed framework requires no explicit model of the changing environment or of the unknown robot capabilities---it only takes into account the progress made by the robots at completing the tasks. Simulations and experiments demonstrate the efficacy of the proposed approach during variations in environmental conditions and when robot capabilities are unknown before deployment.

Robust Barrier Functions for a Fully Autonomous, Remotely Accessible Swarm-Robotics Testbed

Sep 06, 2019

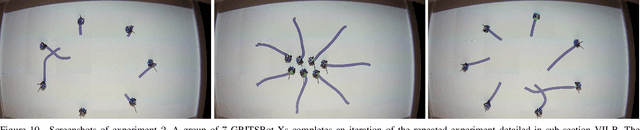

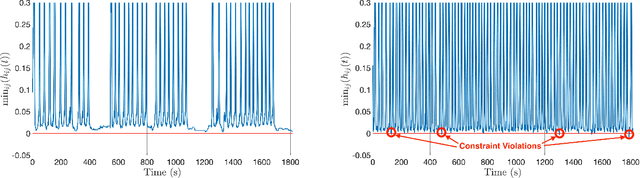

Abstract:The Robotarium, a remotely accessible swarm-robotics testbed, has provided free, open access to robotics and controls research for hundreds of users in thousands of experiments. This high level of usage requires autonomy in the system, which mainly corresponds to constraint satisfaction in the context of users' submissions. In other words, in case that the users' inputs to the robots may lead to collisions, these inputs must be altered to avoid these collisions automatically. However, these alterations must be minimal so as to preserve the users' objective in the experiment. Toward this end, the system has utilized barrier functions, which admit a minimally invasive controller-synthesis procedure. However, barrier functions are yet to be robustified with respect to unmodeled disturbances (e.g., wheel slip or packet loss) in a manner conducive to real-time synthesis. As such, this paper formulates robust barrier functions for a general class of disturbed control-affine systems that, in turn, is key for the Robotarium to operate fully autonomously (i.e., without human supervision). Experimental results showcase the effectiveness of this robust formulation in a long-term experiment in the Robotarium.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge