Yosuke Shinya

BandRe: Rethinking Band-Pass Filters for Scale-Wise Object Detection Evaluation

Jul 21, 2023Abstract:Scale-wise evaluation of object detectors is important for real-world applications. However, existing metrics are either coarse or not sufficiently reliable. In this paper, we propose novel scale-wise metrics that strike a balance between fineness and reliability, using a filter bank consisting of triangular and trapezoidal band-pass filters. We conduct experiments with two methods on two datasets and show that the proposed metrics can highlight the differences between the methods and between the datasets. Code is available at https://github.com/shinya7y/UniverseNet .

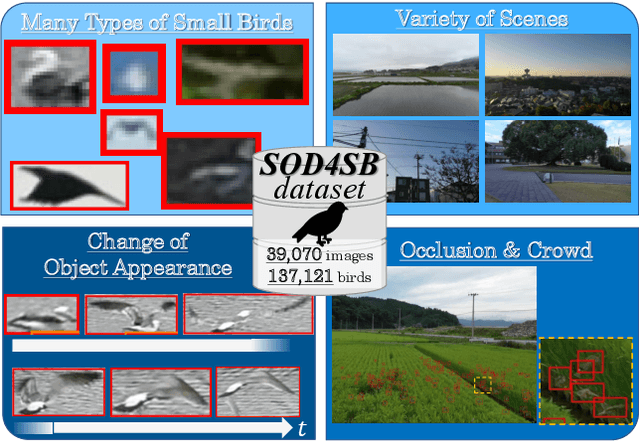

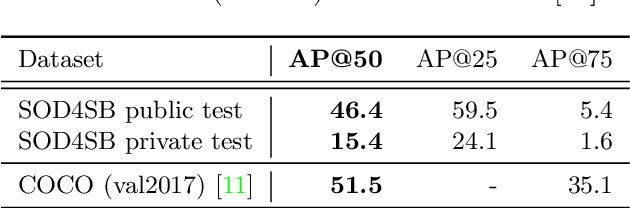

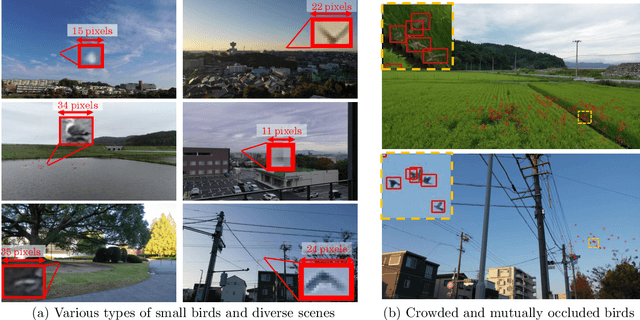

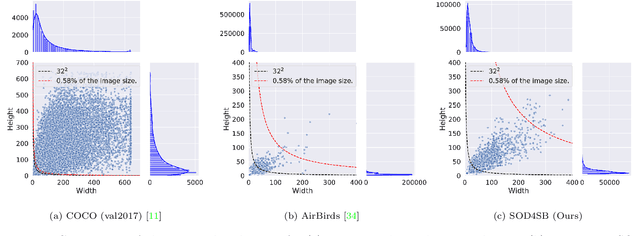

MVA2023 Small Object Detection Challenge for Spotting Birds: Dataset, Methods, and Results

Jul 18, 2023

Abstract:Small Object Detection (SOD) is an important machine vision topic because (i) a variety of real-world applications require object detection for distant objects and (ii) SOD is a challenging task due to the noisy, blurred, and less-informative image appearances of small objects. This paper proposes a new SOD dataset consisting of 39,070 images including 137,121 bird instances, which is called the Small Object Detection for Spotting Birds (SOD4SB) dataset. The detail of the challenge with the SOD4SB dataset is introduced in this paper. In total, 223 participants joined this challenge. This paper briefly introduces the award-winning methods. The dataset, the baseline code, and the website for evaluation on the public testset are publicly available.

USB: Universal-Scale Object Detection Benchmark

Mar 25, 2021

Abstract:Benchmarks, such as COCO, play a crucial role in object detection. However, existing benchmarks are insufficient in scale variation, and their protocols are inadequate for fair comparison. In this paper, we introduce the Universal-Scale object detection Benchmark (USB). USB has variations in object scales and image domains by incorporating COCO with the recently proposed Waymo Open Dataset and Manga109-s dataset. To enable fair comparison, we propose USB protocols by defining multiple thresholds for training epochs and evaluation image resolutions. By analyzing methods on the proposed benchmark, we designed fast and accurate object detectors called UniverseNets, which surpassed all baselines on USB and achieved state-of-the-art results on existing benchmarks. Specifically, UniverseNets achieved 54.1% AP on COCO test-dev with 20 epochs training, the top result among single-stage detectors on the Waymo Open Dataset Challenge 2020 2D detection, and the first place in the NightOwls Detection Challenge 2020 all objects track. The code is available at https://github.com/shinya7y/UniverseNet .

Domain Adaptation Regularization for Spectral Pruning

Dec 26, 2019

Abstract:Deep Neural Networks (DNNs) have recently been achieving state-of-the-art performance on a variety of computer vision related tasks. However, their computational cost limits their ability to be implemented in embedded systems with restricted resources or strict latency constraints. Model compression has therefore been an active field of research to overcome this issue. On the other hand, DNNs typically require massive amounts of labeled data to be trained. This represents a second limitation to their deployment. Domain Adaptation (DA) addresses this issue by allowing to transfer knowledge learned on one labeled source distribution to a target distribution, possibly unlabeled. In this paper, we investigate on possible improvements of compression methods in DA setting. We focus on a compression method that was previously developed in the context of a single data distribution and show that, with a careful choice of data to use during compression and additional regularization terms directly related to DA objectives, it is possible to improve compression results. We also show that our method outperforms an existing compression method studied in the DA setting by a large margin for high compression rates. Although our work is based on one specific compression method, we also outline some general guidelines for improving compression in DA setting.

Understanding the Effects of Pre-Training for Object Detectors via Eigenspectrum

Sep 09, 2019

Abstract:ImageNet pre-training has been regarded as essential for training accurate object detectors for a long time. Recently, it has been shown that object detectors trained from randomly initialized weights can be on par with those fine-tuned from ImageNet pre-trained models. However, the effects of pre-training and the differences caused by pre-training are still not fully understood. In this paper, we analyze the eigenspectrum dynamics of the covariance matrix of each feature map in object detectors. Based on our analysis on ResNet-50, Faster R-CNN with FPN, and Mask R-CNN, we show that object detectors trained from ImageNet pre-trained models and those trained from scratch behave differently from each other even if both object detectors have similar accuracy. Furthermore, we propose a method for automatically determining the widths (the numbers of channels) of object detectors based on the eigenspectrum. We train Faster R-CNN with FPN from randomly initialized weights, and show that our method can reduce ~27% of the parameters of ResNet-50 without increasing Multiply-Accumulate operations and losing accuracy. Our results indicate that we should develop more appropriate methods for transferring knowledge from image classification to object detection (or other tasks).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge