Yoon-Sik Cho

Graph Perceiver IO: A General Architecture for Graph Structured Data

Sep 14, 2022

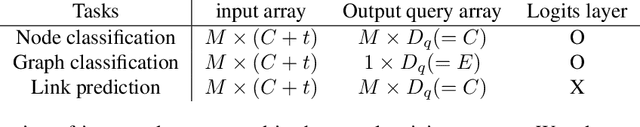

Abstract:Multimodal machine learning has been widely studied for the development of general intelligence. Recently, the remarkable multimodal algorithms, the Perceiver and Perceiver IO, show competitive results for diverse dataset domains and tasks. However, recent works, Perceiver and Perceiver IO, have focused on heterogeneous modalities, including image, text, and speech, and there are few research works for graph structured datasets. A graph is one of the most generalized dataset structures, and we can represent the other dataset, including images, text, and speech, as graph structured data. A graph has an adjacency matrix different from other dataset domains such as text and image, and it is not trivial to handle the topological information, relational information, and canonical positional information. In this study, we provide a Graph Perceiver IO, the Perceiver IO for the graph structured dataset. We keep the main structure of the Graph Perceiver IO as the Perceiver IO because the Perceiver IO already handles the diverse dataset well, except for the graph structured dataset. The Graph Perceiver IO is a general method, and it can handle diverse datasets such as graph structured data as well as text and images. Comparing the graph neural networks, the Graph Perceiver IO requires a lower complexity, and it can incorporate the local and global information efficiently. We show that Graph Perceiver IO shows competitive results for diverse graph-related tasks, including node classification, graph classification, and link prediction.

Latent Space Model for Multi-Modal Social Data

Oct 18, 2015

Abstract:With the emergence of social networking services, researchers enjoy the increasing availability of large-scale heterogenous datasets capturing online user interactions and behaviors. Traditional analysis of techno-social systems data has focused mainly on describing either the dynamics of social interactions, or the attributes and behaviors of the users. However, overwhelming empirical evidence suggests that the two dimensions affect one another, and therefore they should be jointly modeled and analyzed in a multi-modal framework. The benefits of such an approach include the ability to build better predictive models, leveraging social network information as well as user behavioral signals. To this purpose, here we propose the Constrained Latent Space Model (CLSM), a generalized framework that combines Mixed Membership Stochastic Blockmodels (MMSB) and Latent Dirichlet Allocation (LDA) incorporating a constraint that forces the latent space to concurrently describe the multiple data modalities. We derive an efficient inference algorithm based on Variational Expectation Maximization that has a computational cost linear in the size of the network, thus making it feasible to analyze massive social datasets. We validate the proposed framework on two problems: prediction of social interactions from user attributes and behaviors, and behavior prediction exploiting network information. We perform experiments with a variety of multi-modal social systems, spanning location-based social networks (Gowalla), social media services (Instagram, Orkut), e-commerce and review sites (Amazon, Ciao), and finally citation networks (Cora). The results indicate significant improvement in prediction accuracy over state of the art methods, and demonstrate the flexibility of the proposed approach for addressing a variety of different learning problems commonly occurring with multi-modal social data.

* 12 pages, 7 figures, 2 tables

Latent Self-Exciting Point Process Model for Spatial-Temporal Networks

Apr 30, 2014

Abstract:We propose a latent self-exciting point process model that describes geographically distributed interactions between pairs of entities. In contrast to most existing approaches that assume fully observable interactions, here we consider a scenario where certain interaction events lack information about participants. Instead, this information needs to be inferred from the available observations. We develop an efficient approximate algorithm based on variational expectation-maximization to infer unknown participants in an event given the location and the time of the event. We validate the model on synthetic as well as real-world data, and obtain very promising results on the identity-inference task. We also use our model to predict the timing and participants of future events, and demonstrate that it compares favorably with baseline approaches.

* 20 pages, 6 figures (v3); 11 pages, 6 figures (v2); previous version appeared in the 9th Bayesian Modeling Applications Workshop, UAI'12

Co-evolution of Selection and Influence in Social Networks

Jun 14, 2011

Abstract:Many networks are complex dynamical systems, where both attributes of nodes and topology of the network (link structure) can change with time. We propose a model of co-evolving networks where both node at- tributes and network structure evolve under mutual influence. Specifically, we consider a mixed membership stochastic blockmodel, where the probability of observing a link between two nodes depends on their current membership vectors, while those membership vectors themselves evolve in the presence of a link between the nodes. Thus, the network is shaped by the interaction of stochastic processes describing the nodes, while the processes themselves are influenced by the changing network structure. We derive an efficient variational inference procedure for our model, and validate the model on both synthetic and real-world data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge