Yonghwan Kim

Vital Sign Forecasting for Sepsis Patients in ICUs

Nov 08, 2023

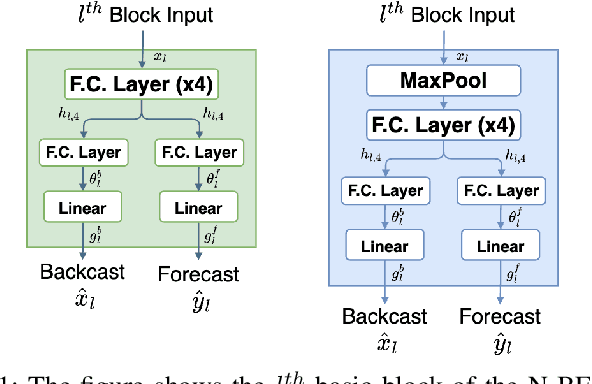

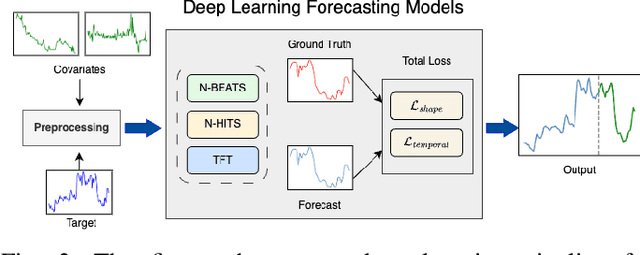

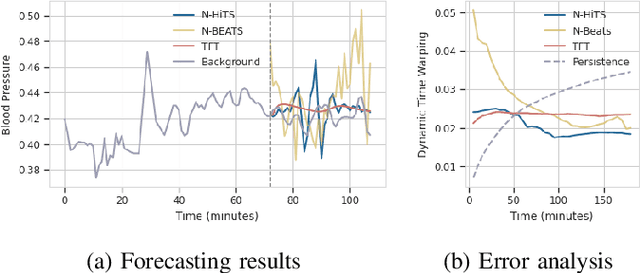

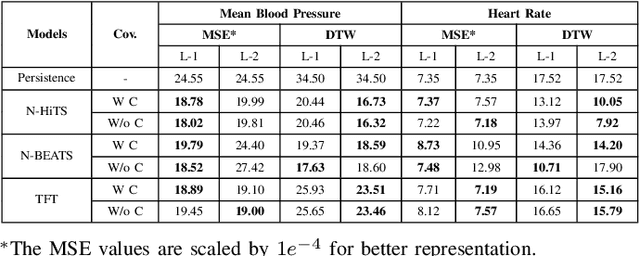

Abstract:Sepsis and septic shock are a critical medical condition affecting millions globally, with a substantial mortality rate. This paper uses state-of-the-art deep learning (DL) architectures to introduce a multi-step forecasting system to predict vital signs indicative of septic shock progression in Intensive Care Units (ICUs). Our approach utilizes a short window of historical vital sign data to forecast future physiological conditions. We introduce a DL-based vital sign forecasting system that predicts up to 3 hours of future vital signs from 6 hours of past data. We further adopt the DILATE loss function to capture better the shape and temporal dynamics of vital signs, which are critical for clinical decision-making. We compare three DL models, N-BEATS, N-HiTS, and Temporal Fusion Transformer (TFT), using the publicly available eICU Collaborative Research Database (eICU-CRD), highlighting their forecasting capabilities in a critical care setting. We evaluate the performance of our models using mean squared error (MSE) and dynamic time warping (DTW) metrics. Our findings show that while TFT excels in capturing overall trends, N-HiTS is superior in retaining short-term fluctuations within a predefined range. This paper demonstrates the potential of deep learning in transforming the monitoring systems in ICUs, potentially leading to significant improvements in patient care and outcomes by accurately forecasting vital signs to assist healthcare providers in detecting early signs of physiological instability and anticipating septic shock.

Extending Machine Learning-Based Early Sepsis Detection to Different Demographics

Nov 07, 2023Abstract:Sepsis requires urgent diagnosis, but research is predominantly focused on Western datasets. In this study, we perform a comparative analysis of two ensemble learning methods, LightGBM and XGBoost, using the public eICU-CRD dataset and a private South Korean St. Mary's Hospital's dataset. Our analysis reveals the effectiveness of these methods in addressing healthcare data imbalance and enhancing sepsis detection. Specifically, LightGBM shows a slight edge in computational efficiency and scalability. The study paves the way for the broader application of machine learning in critical care, thereby expanding the reach of predictive analytics in healthcare globally.

Interpreting Forecasted Vital Signs Using N-BEATS in Sepsis Patients

Jun 24, 2023

Abstract:Detecting and predicting septic shock early is crucial for the best possible outcome for patients. Accurately forecasting the vital signs of patients with sepsis provides valuable insights to clinicians for timely interventions, such as administering stabilizing drugs or optimizing infusion strategies. Our research examines N-BEATS, an interpretable deep-learning forecasting model that can forecast 3 hours of vital signs for sepsis patients in intensive care units (ICUs). In this work, we use the N-BEATS interpretable configuration to forecast the vital sign trends and compare them with the actual trend to understand better the patient's changing condition and the effects of infused drugs on their vital signs. We evaluate our approach using the publicly available eICU Collaborative Research Database dataset and rigorously evaluate the vital sign forecasts using out-of-sample evaluation criteria. We present the performance of our model using error metrics, including mean squared error (MSE), mean average percentage error (MAPE), and dynamic time warping (DTW), where the best scores achieved are 18.52e-4, 7.60, and 17.63e-3, respectively. We analyze the samples where the forecasted trend does not match the actual trend and study the impact of infused drugs on changing the actual vital signs compared to the forecasted trend. Additionally, we examined the mortality rates of patients where the actual trend and the forecasted trend did not match. We observed that the mortality rate was higher (92%) when the actual and forecasted trends closely matched, compared to when they were not similar (84%).

Investigating Poor Performance Regions of Black Boxes: LIME-based Exploration in Sepsis Detection

Jun 21, 2023

Abstract:Interpreting machine learning models remains a challenge, hindering their adoption in clinical settings. This paper proposes leveraging Local Interpretable Model-Agnostic Explanations (LIME) to provide interpretable descriptions of black box classification models in high-stakes sepsis detection. By analyzing misclassified instances, significant features contributing to suboptimal performance are identified. The analysis reveals regions where the classifier performs poorly, allowing the calculation of error rates within these regions. This knowledge is crucial for cautious decision-making in sepsis detection and other critical applications. The proposed approach is demonstrated using the eICU dataset, effectively identifying and visualizing regions where the classifier underperforms. By enhancing interpretability, our method promotes the adoption of machine learning models in clinical practice, empowering informed decision-making and mitigating risks in critical scenarios.

Complete Visibility Algorithm for Autonomous Mobile Luminous Robots under an Asynchronous Scheduler on Grid Plane

Jun 14, 2023

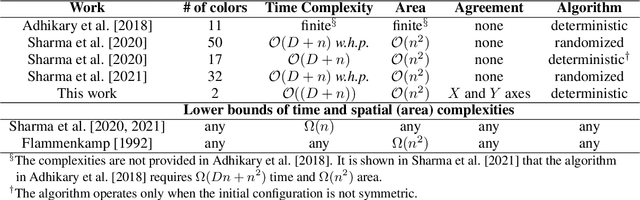

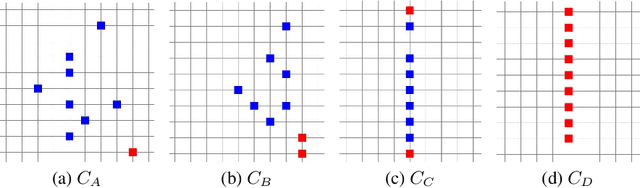

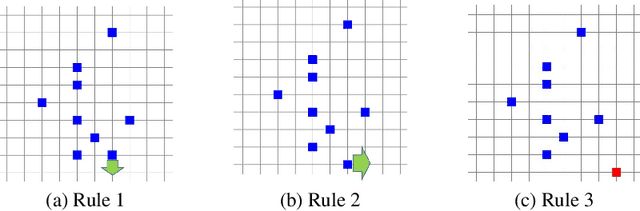

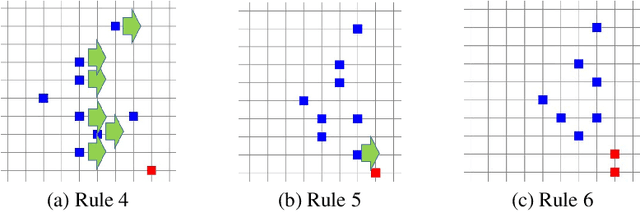

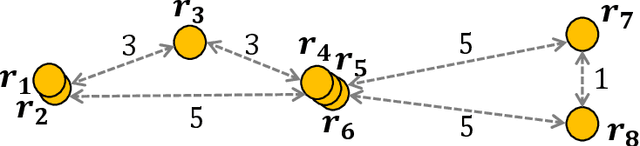

Abstract:An autonomous mobile robot system is a distributed system consisting of mobile computational entities (called robots) that autonomously and repeatedly perform three operations: Look, Compute, and Move. Various problems related to autonomous mobile robots, such as gathering, pattern formation, or flocking, have been extensively studied to understand the relationship between each robot's capabilities and the solvability of these problems. In this study, we focus on the complete visibility problem, which involves relocating all the robots on an infinite grid plane such that each robot is visible to every other robot. We assume that each robot is a luminous robot (i.e., has a light with a constant number of colors) and opaque (not transparent). In this paper, we propose an algorithm to achieve complete visibility when a set of robots is given. The algorithm ensures that complete visibility is achieved even when robots operate asynchronously and have no knowledge of the total number of robots on the grid plane using only two colors.

Gathering Despite Defected View

Aug 17, 2022

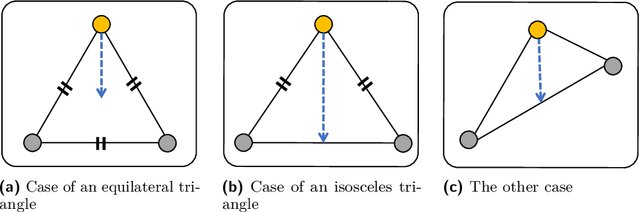

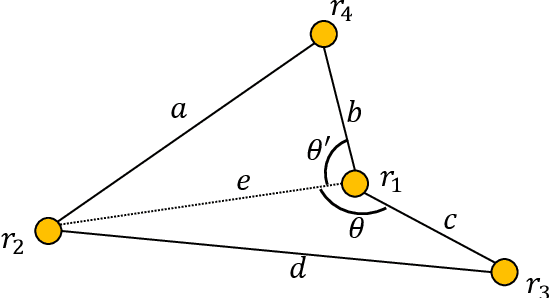

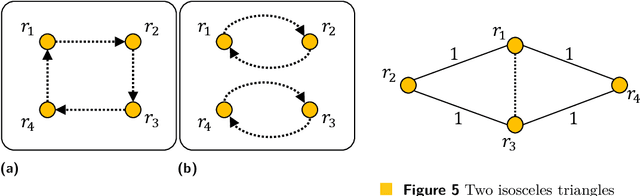

Abstract:An autonomous mobile robot system consisting of many mobile computational entities (called robots) attracts much attention of researchers, and to clarify the relation between the capabilities of robots and solvability of the problems is an emerging issue for a recent couple of decades. Generally, each robot can observe all other robots as long as there are no restrictions for visibility range or obstructions, regardless of the number of robots. In this paper, we provide a new perspective on the observation by robots; a robot cannot necessarily observe all other robots regardless of distances to them. We call this new computational model defected view model. Under this model, in this paper, we consider the gathering problem that requires all the robots to gather at the same point and propose two algorithms to solve the gathering problem in the adversarial ($N$,$N-2$)-defected model for $N \geq 5$ (where each robot observes at most $N-2$ robots chosen adversarially) and the distance-based (4,2)-defected model (where each robot observes at most 2 closest robots to itself) respectively, where $N$ is the number of robots. Moreover, we present an impossibility result showing that there is no (deterministic) gathering algorithm in the adversarial or distance-based (3,1)-defected model. Moreover, we show an impossibility result for the gathering in a relaxed ($N$, $N-2$)-defected model.

Gathering of seven autonomous mobile robots on triangular grids

Mar 15, 2021

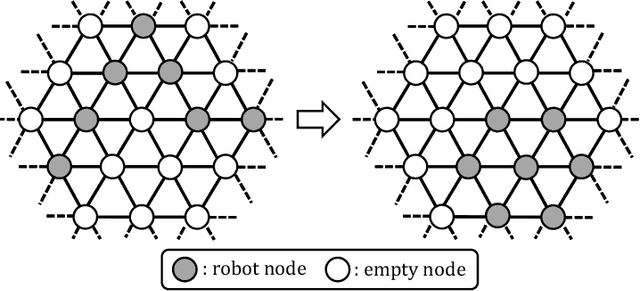

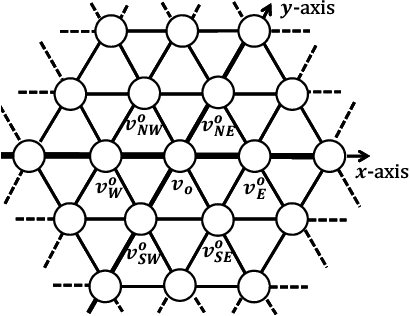

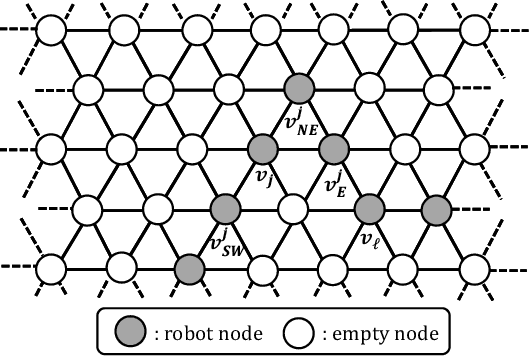

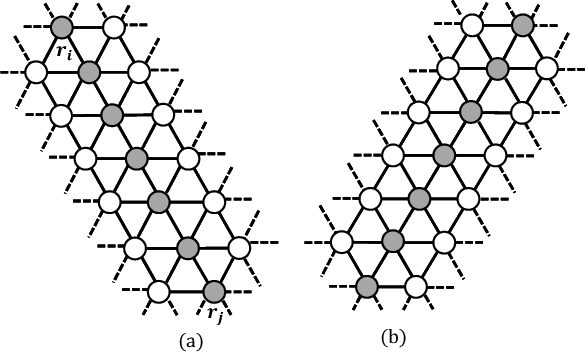

Abstract:In this paper, we consider the gathering problem of seven autonomous mobile robots on triangular grids. The gathering problem requires that, starting from any connected initial configuration where a subgraph induced by all robot nodes (nodes where a robot exists) constitutes one connected graph, robots reach a configuration such that the maximum distance between two robots is minimized. For the case of seven robots, gathering is achieved when one robot has six adjacent robot nodes (they form a shape like a hexagon). In this paper, we aim to clarify the relationship between the capability of robots and the solvability of gathering on a triangular grid. In particular, we focus on visibility range of robots. To discuss the solvability of the problem in terms of the visibility range, we consider strong assumptions except for visibility range. Concretely, we assume that robots are fully synchronous and they agree on the direction and orientation of the x-axis, and chirality in the triangular grid. In this setting, we first consider the weakest assumption about visibility range, i.e., robots with visibility range 1. In this case, we show that there exists no collision-free algorithm to solve the gathering problem. Next, we extend the visibility range to 2. In this case, we show that our algorithm can solve the problem from any connected initial configuration. Thus, the proposed algorithm is optimal in terms of visibility range.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge