Yixiang Shan

Contrastive Diffuser: Planning Towards High Return States via Contrastive Learning

Feb 06, 2024

Abstract:Applying diffusion models in reinforcement learning for long-term planning has gained much attention recently. Several diffusion-based methods have successfully leveraged the modeling capabilities of diffusion for arbitrary distributions. These methods generate subsequent trajectories for planning and have demonstrated significant improvement. However, these methods are limited by their plain base distributions and their overlooking of the diversity of samples, in which different states have different returns. They simply leverage diffusion to learn the distribution of offline dataset, generate the trajectories whose states share the same distribution with the offline dataset. As a result, the probability of these models reaching the high-return states is largely dependent on the dataset distribution. Even equipped with the guidance model, the performance is still suppressed. To address these limitations, in this paper, we propose a novel method called CDiffuser, which devises a return contrast mechanism to pull the states in generated trajectories towards high-return states while pushing them away from low-return states to improve the base distribution. Experiments on 14 commonly used D4RL benchmarks demonstrate the effectiveness of our proposed method.

DiffStitch: Boosting Offline Reinforcement Learning with Diffusion-based Trajectory Stitching

Feb 04, 2024

Abstract:In offline reinforcement learning (RL), the performance of the learned policy highly depends on the quality of offline datasets. However, in many cases, the offline dataset contains very limited optimal trajectories, which poses a challenge for offline RL algorithms as agents must acquire the ability to transit to high-reward regions. To address this issue, we introduce Diffusion-based Trajectory Stitching (DiffStitch), a novel diffusion-based data augmentation pipeline that systematically generates stitching transitions between trajectories. DiffStitch effectively connects low-reward trajectories with high-reward trajectories, forming globally optimal trajectories to address the challenges faced by offline RL algorithms. Empirical experiments conducted on D4RL datasets demonstrate the effectiveness of DiffStitch across RL methodologies. Notably, DiffStitch demonstrates substantial enhancements in the performance of one-step methods (IQL), imitation learning methods (TD3+BC), and trajectory optimization methods (DT).

Learning the Network of Graphs for Graph Neural Networks

Oct 08, 2022

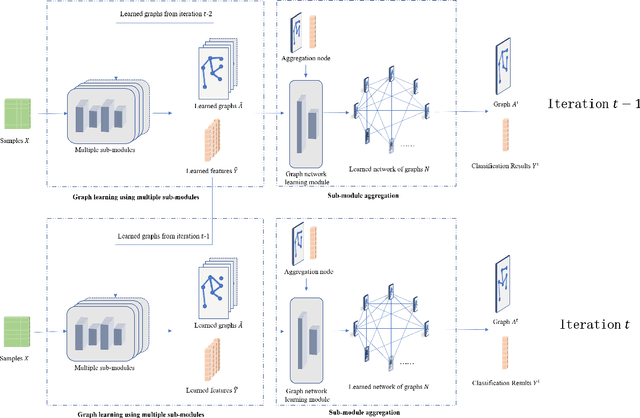

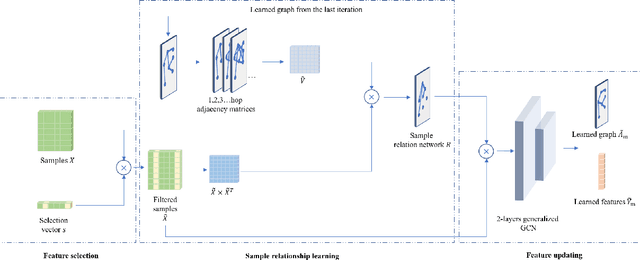

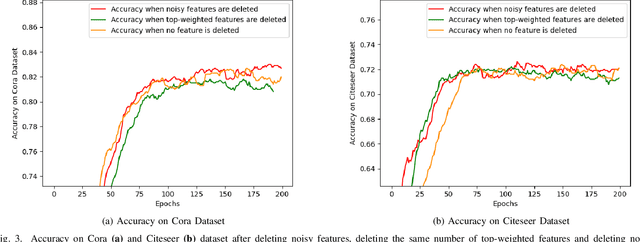

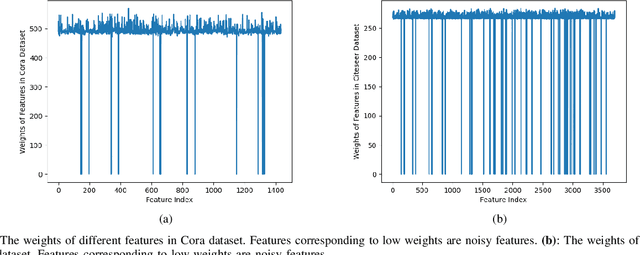

Abstract:Graph neural networks (GNNs) have achieved great success in many scenarios with graph-structured data. However, in many real applications, there are three issues when applying GNNs: graphs are unknown, nodes have noisy features, and graphs contain noisy connections. Aiming at solving these problems, we propose a new graph neural network named as GL-GNN. Our model includes multiple sub-modules, each sub-module selects important data features and learn the corresponding key relation graph of data samples when graphs are unknown. GL-GNN further obtains the network of graphs by learning the network of sub-modules. The learned graphs are further fused using an aggregation method over the network of graphs. Our model solves the first issue by simultaneously learning multiple relation graphs of data samples as well as a relation network of graphs, and solves the second and the third issue by selecting important data features as well as important data sample relations. We compare our method with 14 baseline methods on seven datasets when the graph is unknown and 11 baseline methods on two datasets when the graph is known. The results show that our method achieves better accuracies than the baseline methods and is capable of selecting important features and graph edges from the dataset. Our code will be publicly available at \url{https://github.com/Looomo/GL-GNN}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge