Yingchun Guo

Domain generalization Person Re-identification on Attention-aware multi-operation strategery

Oct 19, 2022

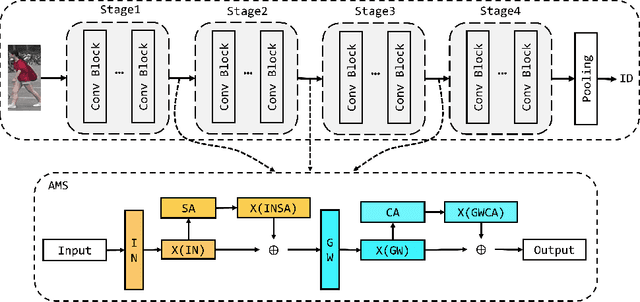

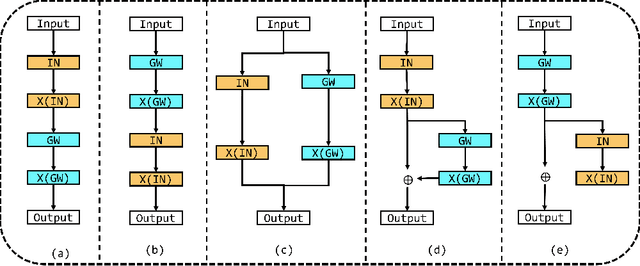

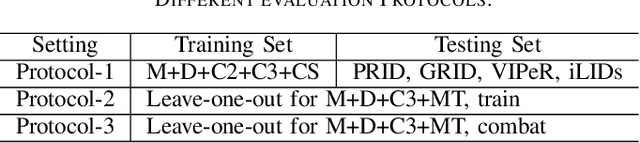

Abstract:Domain generalization person re-identification (DG Re-ID) aims to directly deploy a model trained on the source domain to the unseen target domain with good generalization, which is a challenging problem and has practical value in a real-world deployment. In the existing DG Re-ID methods, invariant operations are effective in extracting domain generalization features, and Instance Normalization (IN) or Batch Normalization (BN) is used to alleviate the bias to unseen domains. Due to domain-specific information being used to capture discriminability of the individual source domain, the generalized ability for unseen domains is unsatisfactory. To address this problem, an Attention-aware Multi-operation Strategery (AMS) for DG Re-ID is proposed to extract more generalized features. We investigate invariant operations and construct a multi-operation module based on IN and group whitening (GW) to extract domain-invariant feature representations. Furthermore, we analyze different domain-invariant characteristics, and apply spatial attention to the IN operation and channel attention to the GW operation to enhance the domain-invariant features. The proposed AMS module can be used as a plug-and-play module to incorporate into existing network architectures. Extensive experimental results show that AMS can effectively enhance the model's generalization ability to unseen domains and significantly improves the recognition performance in DG Re-ID on three protocols with ten datasets.

Information Prebuilt Recurrent Reconstruction Network for Video Super-Resolution

Dec 10, 2021

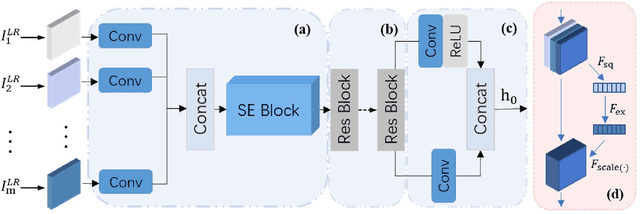

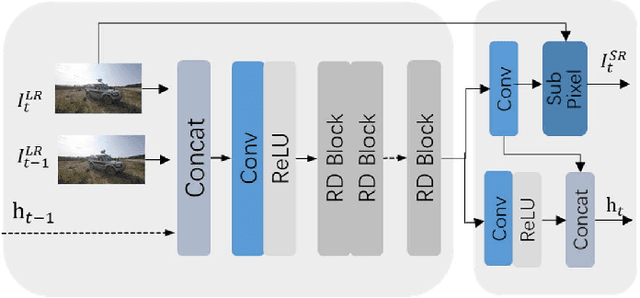

Abstract:The video super-resolution (VSR) method based on the recurrent convolutional network has strong temporal modeling capability for video sequences. However, the input information received by different recurrent units in the unidirectional recurrent convolutional network is unbalanced. Early reconstruction frames receive less temporal information, resulting in fuzzy or artifact results. Although the bidirectional recurrent convolution network can alleviate this problem, it greatly increases reconstruction time and computational complexity. It is also not suitable for many application scenarios, such as online super-resolution. To solve the above problems, we propose an end-to-end information prebuilt recurrent reconstruction network (IPRRN), consisting of an information prebuilt network (IPNet) and a recurrent reconstruction network (RRNet). By integrating sufficient information from the front of the video to build the hidden state needed for the initially recurrent unit to help restore the earlier frames, the information prebuilt network balances the input information difference before and after without backward propagation. In addition, we demonstrate a compact recurrent reconstruction network, which has significant improvements in recovery quality and time efficiency. Many experiments have verified the effectiveness of our proposed network, and compared with the existing state-of-the-art methods, our method can effectively achieve higher quantitative and qualitative evaluation performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge