Ying Siu Liang

iRoPro: An interactive Robot Programming Framework

Dec 08, 2021

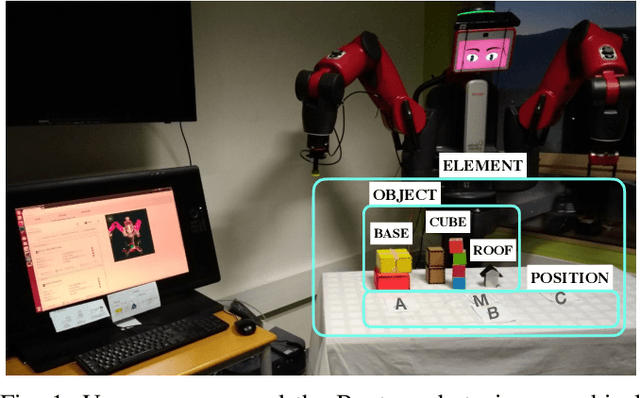

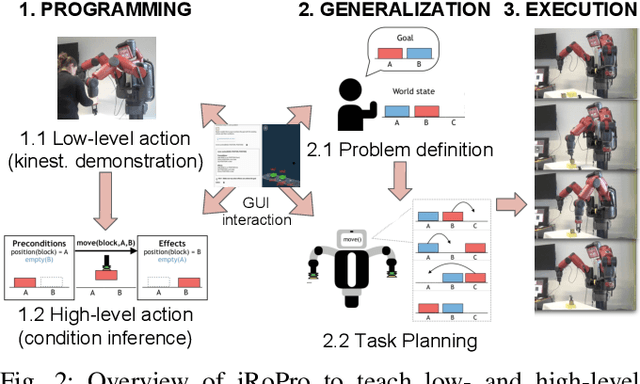

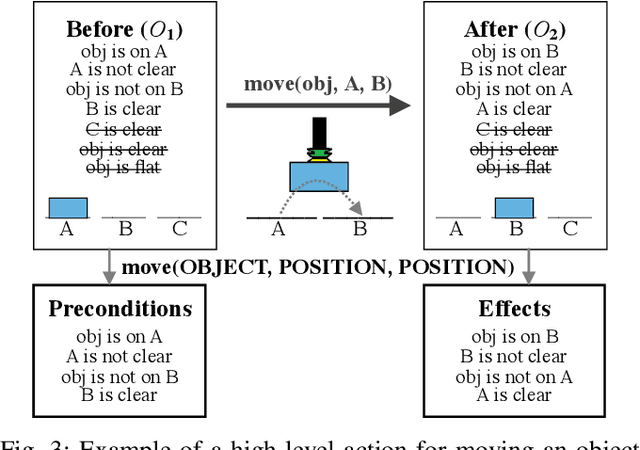

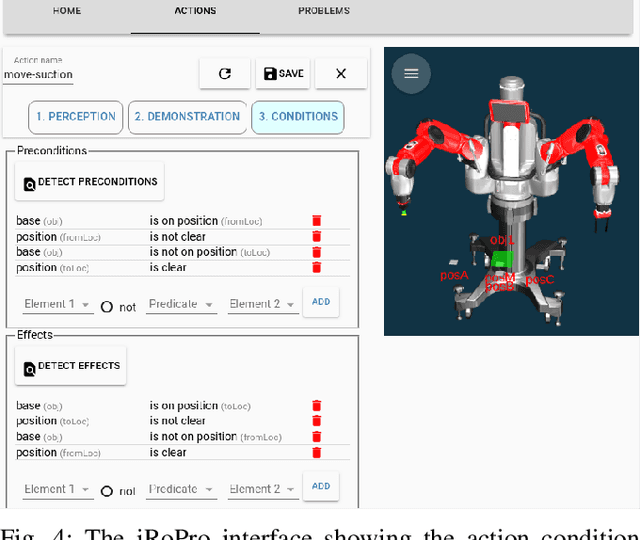

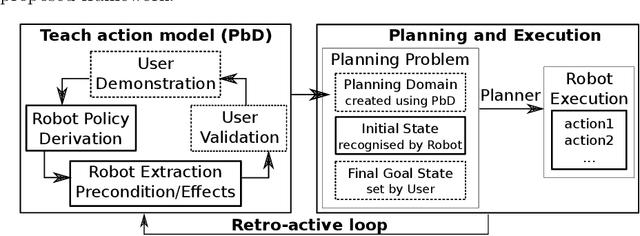

Abstract:The great diversity of end-user tasks ranging from manufacturing environments to personal homes makes pre-programming robots for general purpose applications extremely challenging. In fact, teaching robots new actions from scratch that can be reused for previously unseen tasks remains a difficult challenge and is generally left up to robotics experts. In this work, we present iRoPro, an interactive Robot Programming framework that allows end-users with little to no technical background to teach a robot new reusable actions. We combine Programming by Demonstration and Automated Planning techniques to allow the user to construct the robot's knowledge base by teaching new actions by kinesthetic demonstration. The actions are generalised and reused with a task planner to solve previously unseen problems defined by the user. We implement iRoPro as an end-to-end system on a Baxter Research Robot to simultaneously teach low- and high-level actions by demonstration that the user can customise via a Graphical User Interface to adapt to their specific use case. To evaluate the feasibility of our approach, we first conducted pre-design experiments to better understand the user's adoption of involved concepts and the proposed robot programming process. We compare results with post-design experiments, where we conducted a user study to validate the usability of our approach with real end-users. Overall, we showed that users with different programming levels and educational backgrounds can easily learn and use iRoPro and its robot programming process.

* arXiv admin note: substantial text overlap with arXiv:2103.14342

Improving Object Permanence using Agent Actions and Reasoning

Oct 01, 2021

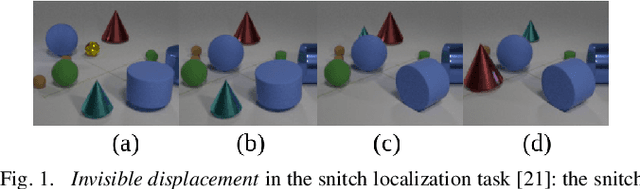

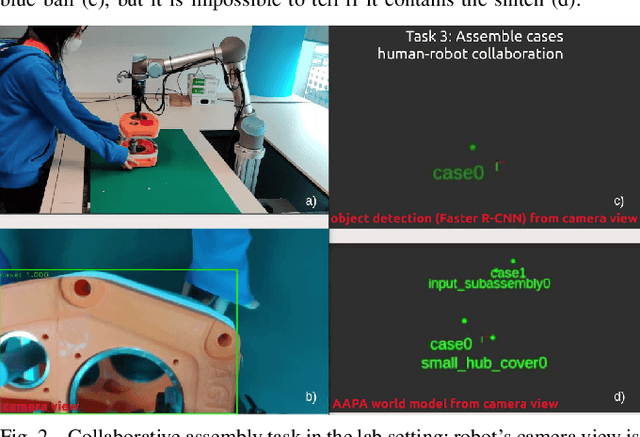

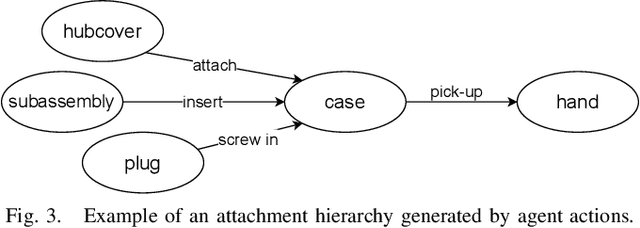

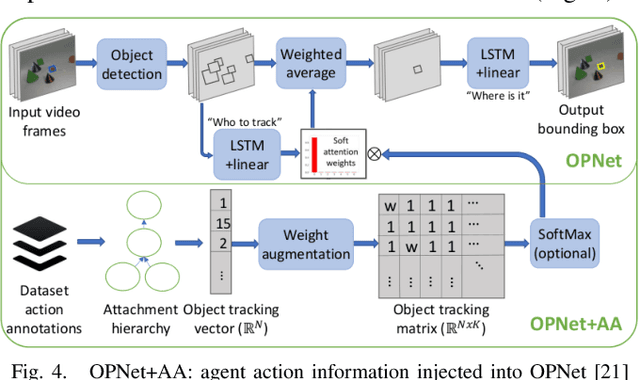

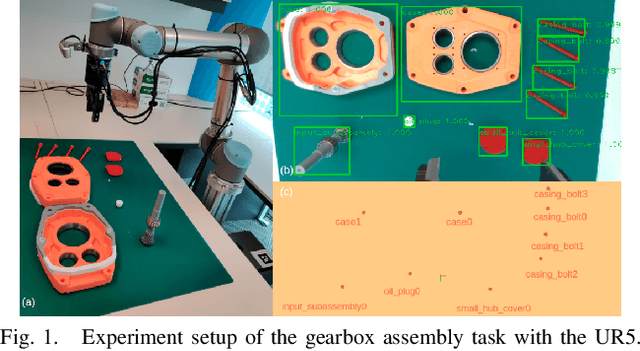

Abstract:Object permanence in psychology means knowing that objects still exist even if they are no longer visible. It is a crucial concept for robots to operate autonomously in uncontrolled environments. Existing approaches learn object permanence from low-level perception, but perform poorly on more complex scenarios, like when objects are contained and carried by others. Knowledge about manipulation actions performed on an object prior to its disappearance allows us to reason about its location, e.g., that the object has been placed in a carrier. In this paper we argue that object permanence can be improved when the robot uses knowledge about executed actions and describe an approach to infer hidden object states from agent actions. We show that considering agent actions not only improves rule-based reasoning models but also purely neural approaches, showing its general applicability. Then, we conduct quantitative experiments on a snitch localization task using a dataset of 1,371 synthesized videos, where we compare the performance of different object permanence models with and without action annotations. We demonstrate that models with action annotations can significantly increase performance of both neural and rule-based approaches. Finally, we evaluate the usability of our approach in real-world applications by conducting qualitative experiments with two Universal Robots (UR5 and UR16e) in both lab and industrial settings. The robots complete benchmark tasks for a gearbox assembly and demonstrate the object permanence capabilities with real sensor data in an industrial environment.

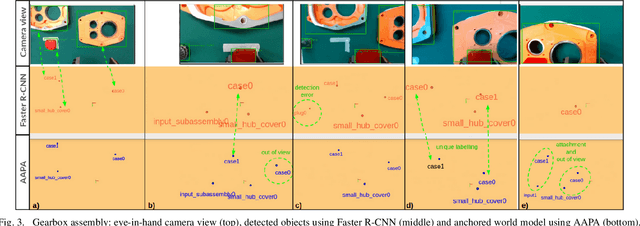

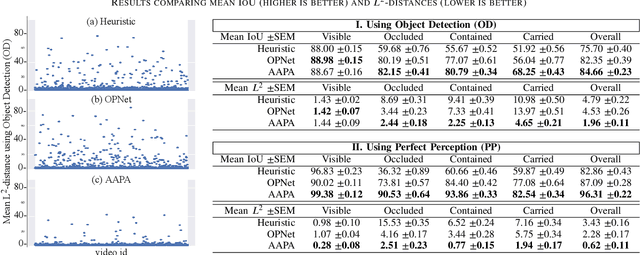

Maintaining a Reliable World Model using Action-aware Perceptual Anchoring

Jul 07, 2021

Abstract:Reliable perception is essential for robots that interact with the world. But sensors alone are often insufficient to provide this capability, and they are prone to errors due to various conditions in the environment. Furthermore, there is a need for robots to maintain a model of its surroundings even when objects go out of view and are no longer visible. This requires anchoring perceptual information onto symbols that represent the objects in the environment. In this paper, we present a model for action-aware perceptual anchoring that enables robots to track objects in a persistent manner. Our rule-based approach considers inductive biases to perform high-level reasoning over the results from low-level object detection, and it improves the robot's perceptual capability for complex tasks. We evaluate our model against existing baseline models for object permanence and show that it outperforms these on a snitch localisation task using a dataset of 1,371 videos. We also integrate our action-aware perceptual anchoring in the context of a cognitive architecture and demonstrate its benefits in a realistic gearbox assembly task on a Universal Robot.

* 7 pages, 3 figures

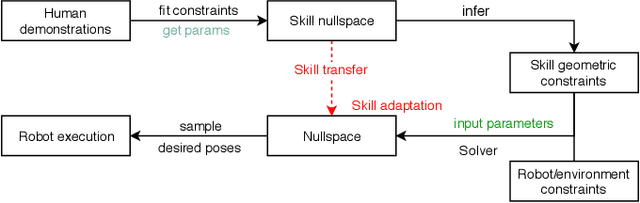

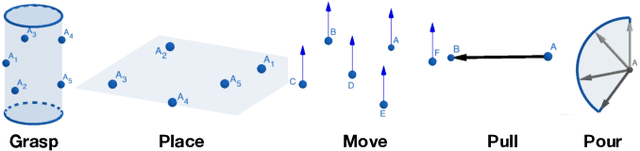

Inferring the Geometric Nullspace of Robot Skills from Human Demonstrations

Mar 30, 2021

Abstract:In this paper we present a framework to learn skills from human demonstrations in the form of geometric nullspaces, which can be executed using a robot. We collect data of human demonstrations, fit geometric nullspaces to them, and also infer their corresponding geometric constraint models. These geometric constraints provide a powerful mathematical model as well as an intuitive representation of the skill in terms of the involved objects. To execute the skill using a robot, we combine this geometric skill description with the robot's kinematics and other environmental constraints, from which poses can be sampled for the robot's execution. The result of our framework is a system that takes the human demonstrations as input, learns the underlying skill model, and executes the learnt skill with different robots in different dynamic environments. We evaluate our approach on a simulated industrial robot, and execute the final task on the iCub humanoid robot.

* 8 pages, 6 figures, ICRA 2020

End-User Programming of Low- and High-Level Actions for Robotic Task Planning

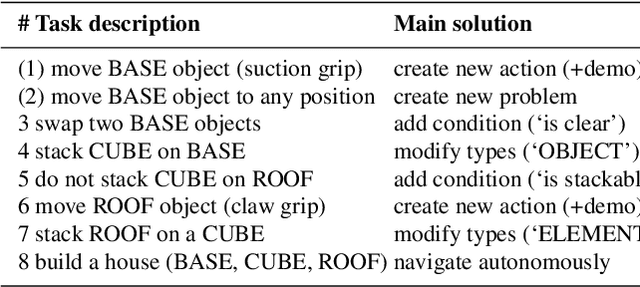

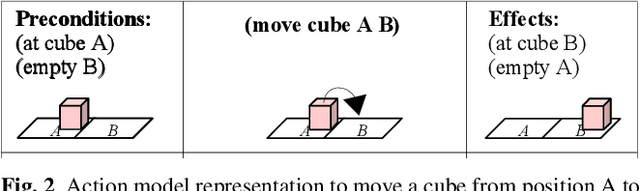

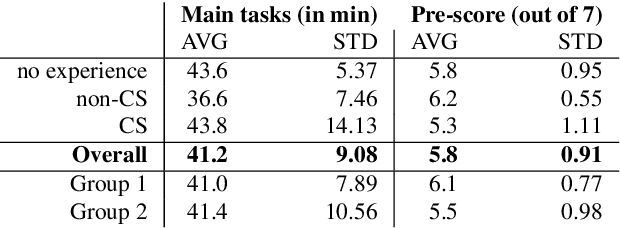

Mar 26, 2021

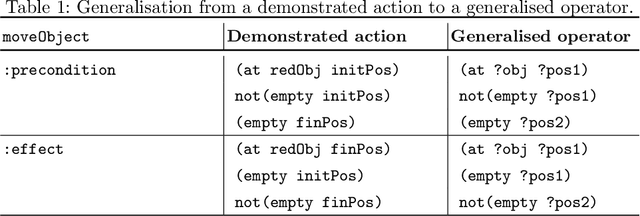

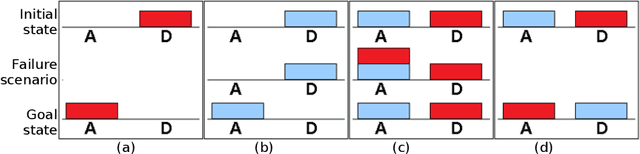

Abstract:Programming robots for general purpose applications is extremely challenging due to the great diversity of end-user tasks ranging from manufacturing environments to personal homes. Recent work has focused on enabling end-users to program robots using Programming by Demonstration. However, teaching robots new actions from scratch that can be reused for unseen tasks remains a difficult challenge and is generally left up to robotic experts. We propose iRoPro, an interactive Robot Programming framework that allows end-users to teach robots new actions from scratch and reuse them with a task planner. In this work we provide a system implementation on a two-armed Baxter robot that (i) allows simultaneous teaching of low- and high-level actions by demonstration, (ii) includes a user interface for action creation with condition inference and modification, and (iii) allows creating and solving previously unseen problems using a task planner for the robot to execute in real-time. We evaluate the generalisation power of the system on six benchmark tasks and show how taught actions can be easily reused for complex tasks. We further demonstrate its usability with a user study (N=21), where users completed eight tasks to teach the robot new actions that are reused with a task planner. The study demonstrates that users with any programming level and educational background can easily learn and use the system.

* 8 pages, 6 figures, 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN)

A Framework for Robot Programming in Cobotic Environments: First user experiments

Oct 19, 2018

Abstract:The increasing presence of robots in industries has not gone unnoticed. Large industrial players have incorporated them into their production lines, but smaller companies hesitate due to high initial costs and the lack of programming expertise. In this work we introduce a framework that combines two disciplines, Programming by Demonstration and Automated Planning, to allow users without any programming knowledge to program a robot. The user teaches the robot atomic actions together with their semantic meaning and represents them in terms of preconditions and effects. Using these atomic actions the robot can generate action sequences autonomously to reach any goal given by the user. We evaluated the usability of our framework in terms of user experiments with a Baxter Research Robot and showed that it is well-adapted to users without any programming experience.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge