Yinan Hu

Constrained Density Estimation via Optimal Transport

Jan 11, 2026Abstract:A novel framework for density estimation under expectation constraints is proposed. The framework minimizes the Wasserstein distance between the estimated density and a prior, subject to the constraints that the expected value of a set of functions adopts or exceeds given values. The framework is generalized to include regularization inequalities to mitigate the artifacts in the target measure. An annealing-like algorithm is developed to address non-smooth constraints, with its effectiveness demonstrated through both synthetic and proof-of-concept real world examples in finance.

A Real-time Non-contact Localization Method for Faulty Electric Energy Storage Components using Highly Sensitive Magnetometers

Aug 15, 2023

Abstract:With the wide application of electric energy storage component arrays, such as battery arrays, capacitor arrays, inductor arrays, their potential safety risks have gradually drawn the public attention. However, existing technologies cannot meet the needs of non-contact and real-time diagnosis for faulty components inside these massive arrays. To solve this problem, this paper proposes a new method based on the beamforming spatial filtering algorithm to precisely locate the faulty components within the arrays in real-time. The method uses highly sensitive magnetometers to collect the magnetic signals from energy storage component arrays, without damaging or even contacting any component. The experimental results demonstrate the potential of the proposed method in securing energy storage component arrays. Within an imaging area of 80 mm $\times$ 80 mm, the one faulty component out of nine total components can be localized with an accuracy of 0.72 mm for capacitor arrays and 1.60 mm for battery arrays.

Detection in Human-sensor Systems under Quantum Prospect Theory using Bayesian Persuasion Frameworks

Mar 21, 2023Abstract:Human-sensor systems have a variety of applications in robotics, healthcare and finance. Sensors observe the true state of nature and produce strategically designed signals to help human arrive at more accurate decisions of the state of nature. We formulate the human-sensor system into Bayesian persuasion framework consolidated into prospect theories and construct a detection scheme where human wants to find out the true state by observing the realization of quantum states from the sensor. We obtain the optimal signaling rule for the sensor and optimal decision rule for the human receiver and verify that the total law of probability is violated in this scenario. We also illustrate how the concept of rationality influences human's detection performance and also the sender's signaling rules.

PSP: Pre-trained Soft Prompts for Few-Shot Abstractive Summarization

Apr 09, 2022

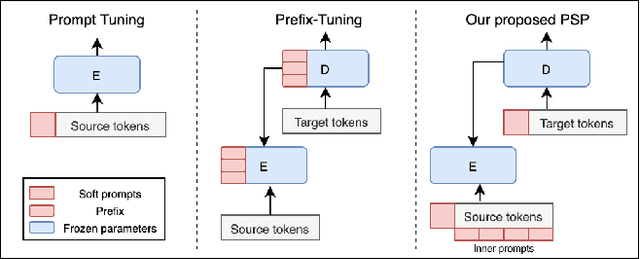

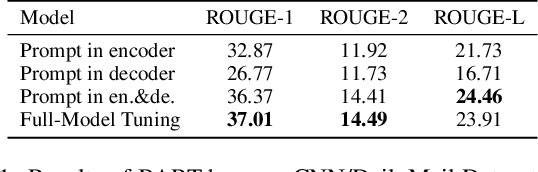

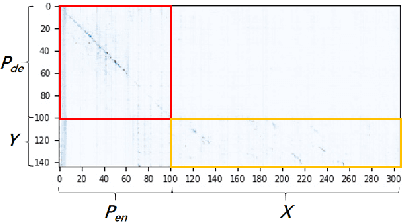

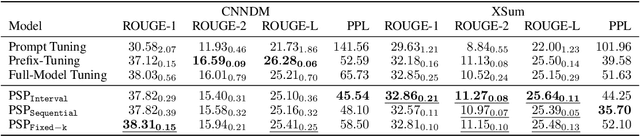

Abstract:Few-shot abstractive summarization has become a challenging task in natural language generation. To support it, we designed a novel soft prompts architecture coupled with a prompt pre-training plus fine-tuning paradigm that is effective and tunes only extremely light parameters. The soft prompts include continuous input embeddings across an encoder and a decoder to fit the structure of the generation models. Importantly, a novel inner-prompt placed in the text is introduced to capture document-level information. The aim is to devote attention to understanding the document that better prompts the model to generate document-related content. The first step in the summarization procedure is to conduct prompt pre-training with self-supervised pseudo-data. This teaches the model basic summarizing capabilities. The model is then fine-tuned with few-shot examples. Experimental results on the CNN/DailyMail and XSum datasets show that our method, with only 0.1% of the parameters, outperforms full-model tuning where all model parameters are tuned. It also surpasses Prompt Tuning by a large margin and delivers competitive results against Prefix-Tuning with 3% of the parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge