Yi-Ting Huang

Institute of Information Science, Academia Sinica, Taipei, Taiwan

Approach to Predicting News -- A Precise Multi-LSTM Network With BERT

Apr 26, 2022

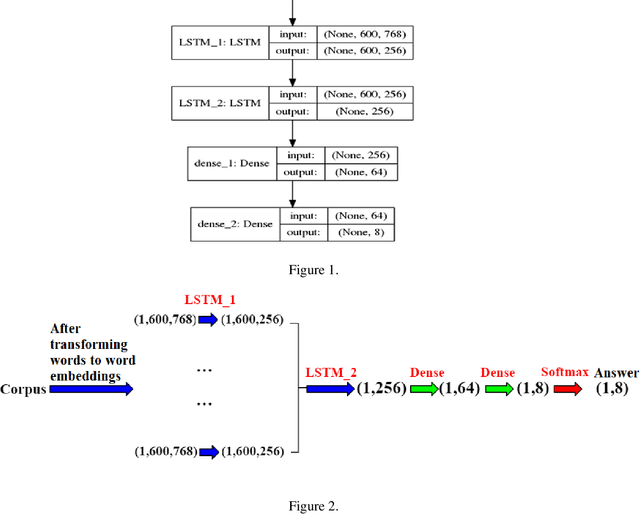

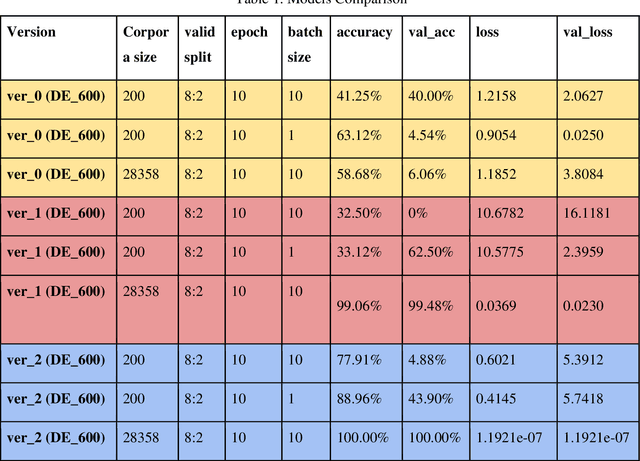

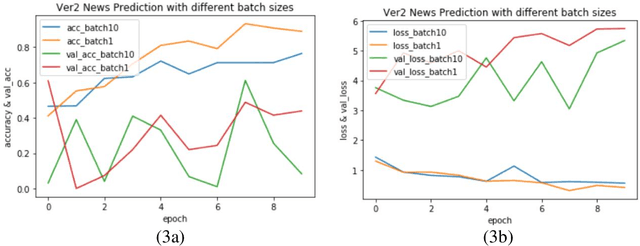

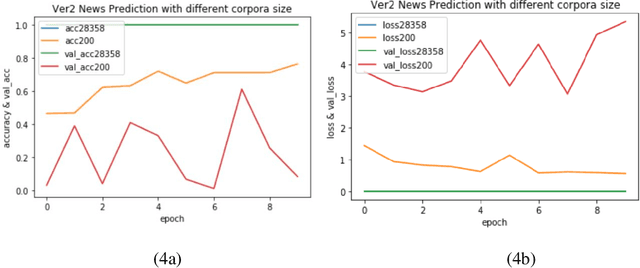

Abstract:Varieties of Democracy (V-Dem) is a new approach to conceptualizing and measuring democracy and politics. It has information for 200 countries and is one of the biggest databases for political science. According to the V-Dem annual democracy report 2019, Taiwan is one of the two countries that got disseminated false information from foreign governments the most. It also shows that the "made-up news" has caused a great deal of confusion in Taiwanese society and has serious impacts on global stability. Although there are several applications helping distinguish the false information, we found out that the pre-processing of categorizing the news is still done by human labor. However, human labor may cause mistakes and cannot work for a long time. The growing demands for automatic machines in the near decades show that while the machine can do as good as humans or even better, using machines can reduce humans' burden and cut down costs. Therefore, in this work, we build a predictive model to classify the category of news. The corpora we used contains 28358 news and 200 news scraped from the online newspaper Liberty Times Net (LTN) website and includes 8 categories: Technology, Entertainment, Fashion, Politics, Sports, International, Finance, and Health. At first, we use Bidirectional Encoder Representations from Transformers (BERT) for word embeddings which transform each Chinese character into a (1,768) vector. Then, we use a Long Short-Term Memory (LSTM) layer to transform word embeddings into sentence embeddings and add another LSTM layer to transform them into document embeddings. Each document embedding is an input for the final predicting model, which contains two Dense layers and one Activation layer. And each document embedding is transformed into 1 vector with 8 real numbers, then the highest one will correspond to the 8 news categories with up to 99% accuracy.

* Accepted by The 25th International Conference on Information Management & Practice (IMP) 2019

Headline Diagnosis: Manipulation of Content Farm Headlines

Apr 25, 2022

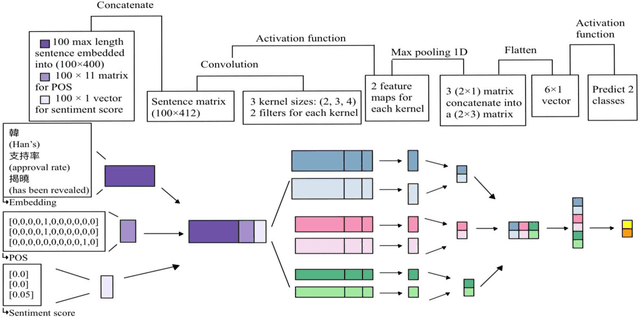

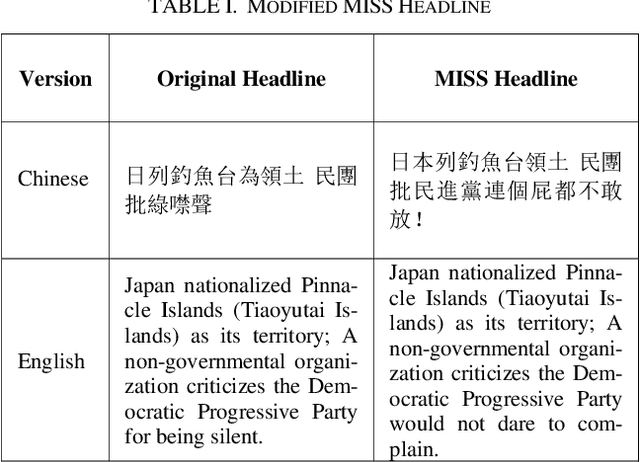

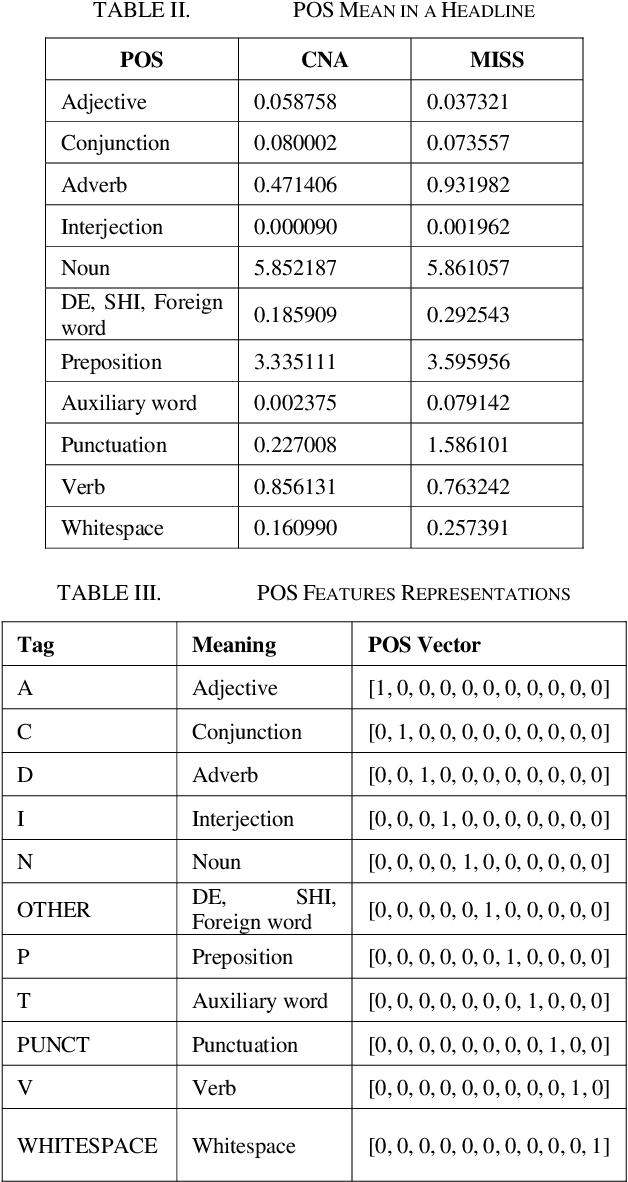

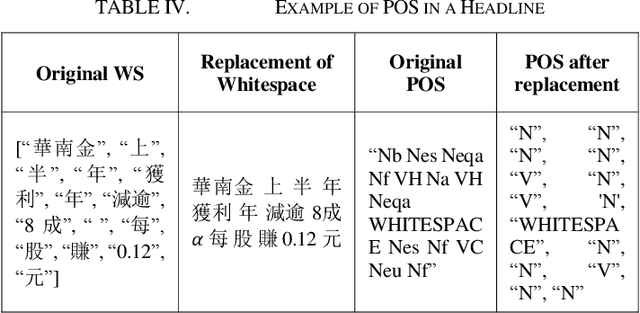

Abstract:As technology grows faster, the news spreads through social media. In order to attract more readers and acquire additional profit, some news agencies reproduce massive news in a more appealing manner. Therefore, it is essential to accurately predict whether a news article is from official news agencies. This work develops a headline classification based on Convoluted Neural Network to determine credibility of a news article. The model primarily focuses on investigating key factors from headlines. These factors include word segmentation, part-of-speech tags, and sentiment features. With integrating these features into the proposed classification model, the demonstrated evaluation achieves 93.99% for accuracy.

* Accepted by The 26th Taiwan Academic Network Conference (TANET) 2020

Learning Malware Representation based on Execution Sequences

Dec 16, 2019

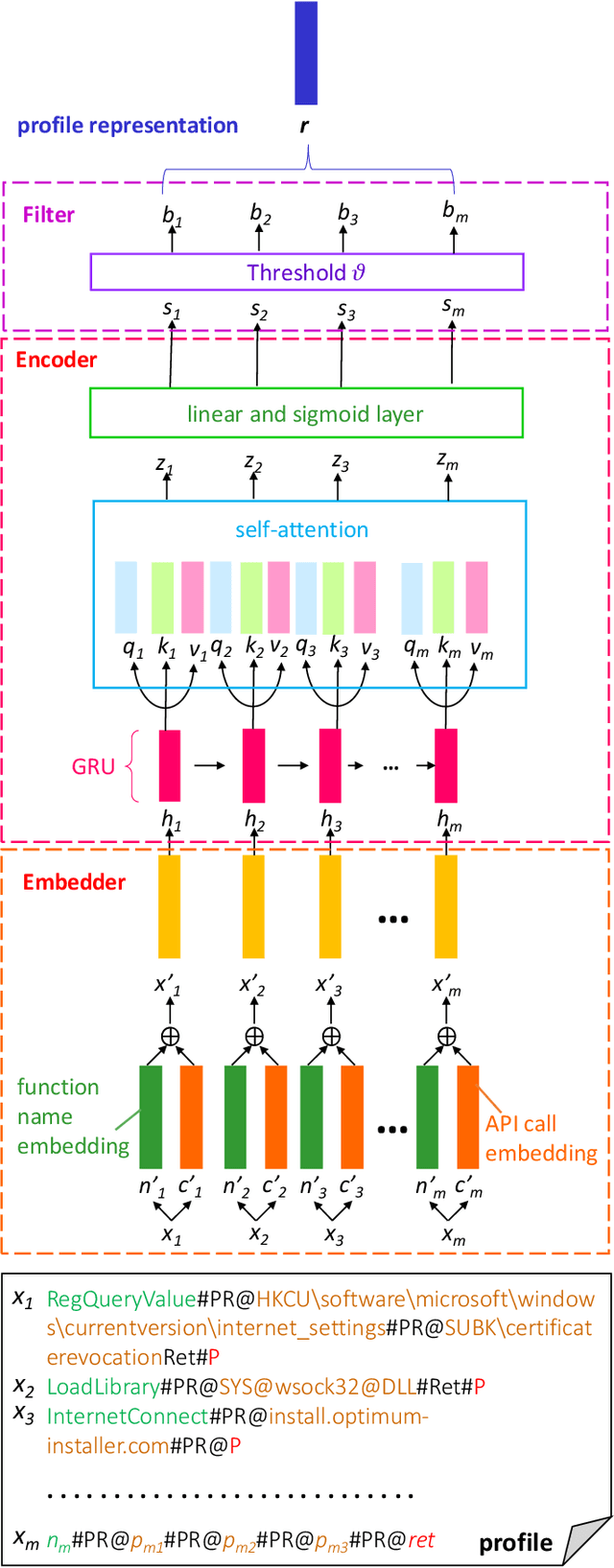

Abstract:Malware analysis has been extensively investigated as the number and types of malware has increased dramatically. However, most previous studies use end-to-end systems to detect whether a sample is malicious, or to identify its malware family. In this paper, we propose a neural network framework composed of an embedder, an encoder, and a filter to learn malware representations from characteristic execution sequences for malware family classification. The embedder uses BERT and Sent2Vec, state-of-the-art embedding modules, to capture relations within a single API call and among consecutive API calls in an execution trace. The encoder comprises gated recurrent units (GRU) to preserve the ordinal position of API calls and a self-attention mechanism for comparing intra-relations among different positions of API calls. The filter identifies representative API calls to build the malware representation. We conduct broad experiments to determine the influence of individual framework components. The results show that the proposed framework outperforms the baselines, and also demonstrates that considering Sent2Vec to learn complete API call embeddings and GRU to explicitly preserve ordinal information yields more information and thus significant improvements. Also, the proposed approach effectively classifies new malicious execution traces on the basis of similarities with previously collected families.

From Receptive to Productive: Learning to Use Confusing Words through Automatically Selected Example Sentences

Jun 06, 2019

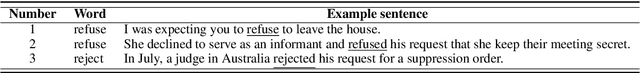

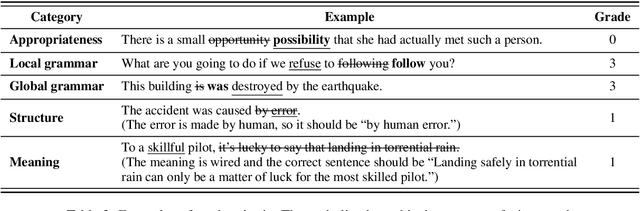

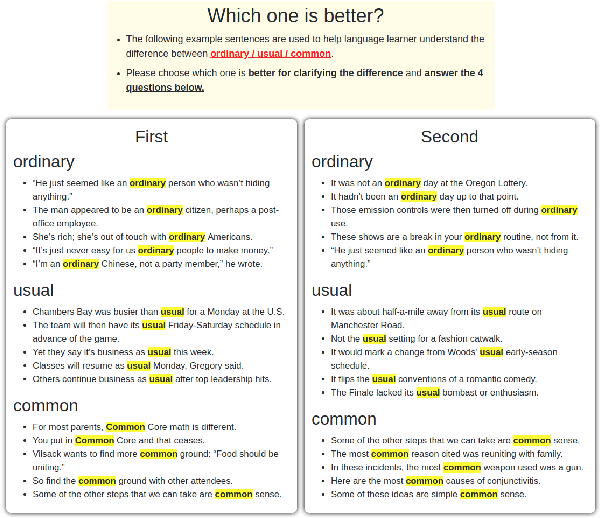

Abstract:Knowing how to use words appropriately has been a key to improving language proficiency. Previous studies typically discuss how students learn receptively to select the correct candidate from a set of confusing words in the fill-in-the-blank task where specific context is given. In this paper, we go one step further, assisting students to learn to use confusing words appropriately in a productive task: sentence translation. We leverage the GiveMeExample system, which suggests example sentences for each confusing word, to achieve this goal. In this study, students learn to differentiate the confusing words by reading the example sentences, and then choose the appropriate word(s) to complete the sentence translation task. Results show students made substantial progress in terms of sentence structure. In addition, highly proficient students better managed to learn confusing words. In view of the influence of the first language on learners, we further propose an effective approach to improve the quality of the suggested sentences.

Bringing personalized learning into computer-aided question generation

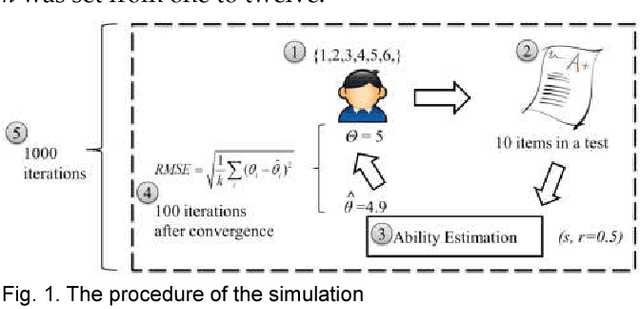

Aug 29, 2018

Abstract:This paper proposes a novel and statistical method of ability estimation based on acquisition distribution for a personalized computer aided question generation. This method captures the learning outcomes over time and provides a flexible measurement based on the acquisition distributions instead of precalibration. Compared to the previous studies, the proposed method is robust, especially when an ability of a student is unknown. The results from the empirical data show that the estimated abilities match the actual abilities of learners, and the pretest and post-test of the experimental group show significant improvement. These results suggest that this method can serves as the ability estimation for a personalized computer-aided testing environment.

Development and Evaluation of a Personalized Computer-aided Question Generation for English Learners to Improve Proficiency and Correct Mistakes

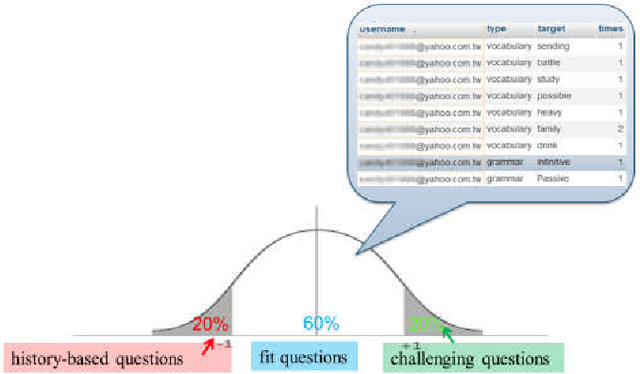

Aug 29, 2018

Abstract:In the last several years, the field of computer assisted language learning has increasingly focused on computer aided question generation. However, this approach often provides test takers with an exhaustive amount of questions that are not designed for any specific testing purpose. In this work, we present a personalized computer aided question generation that generates multiple choice questions at various difficulty levels and types, including vocabulary, grammar and reading comprehension. In order to improve the weaknesses of test takers, it selects questions depending on an estimated proficiency level and unclear concepts behind incorrect responses. This results show that the students with the personalized automatic quiz generation corrected their mistakes more frequently than ones only with computer aided question generation. Moreover, students demonstrated the most progress between the pretest and post test and correctly answered more difficult questions. Finally, we investigated the personalizing strategy and found that a student could make a significant progress if the proposed system offered the vocabulary questions at the same level of his or her proficiency level, and if the grammar and reading comprehension questions were at a level lower than his or her proficiency level.

Characterizing the Influence of Features on Reading Difficulty Estimation for Non-native Readers

Aug 29, 2018

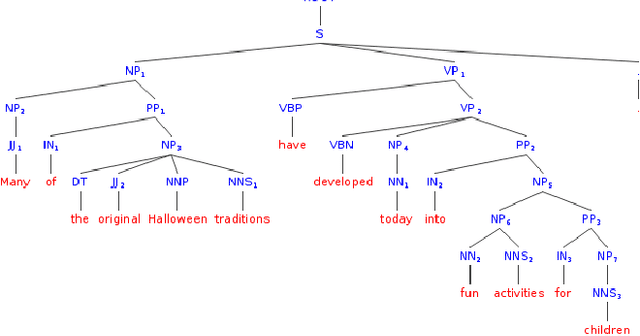

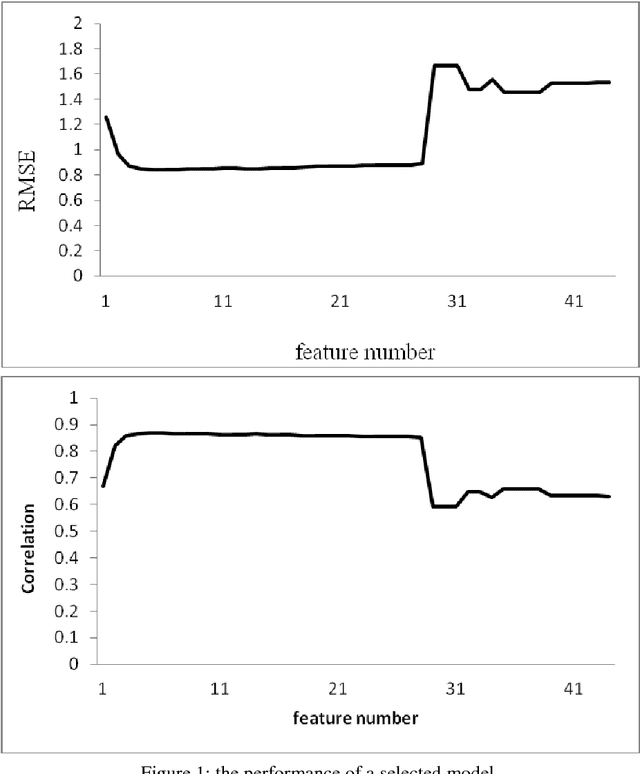

Abstract:In recent years, the number of people studying English as a second language (ESL) has surpassed the number of native speakers. Recent work have demonstrated the success of providing personalized content based on reading difficulty, such as information retrieval and summarization. However, almost all prior studies of reading difficulty are designed for native speakers, rather than non-native readers. In this study, we investigate various features for ESL readers, by conducting a linear regression to estimate the reading level of English language sources. This estimation is based not only on the complexity of lexical and syntactic features, but also several novel concepts, including the age of word and grammar acquisition from several sources, word sense from WordNet, and the implicit relation between sentences. By employing Bayesian Information Criterion (BIC) to select the optimal model, we find that the combination of the number of words, the age of word acquisition and the height of the parsing tree generate better results than alternative competing models. Thus, our results show that proposed second language reading difficulty estimation outperforms other first language reading difficulty estimations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge