Yi Bao

GWRBoost:A geographically weighted gradient boosting method for explainable quantification of spatially-varying relationships

Dec 15, 2022

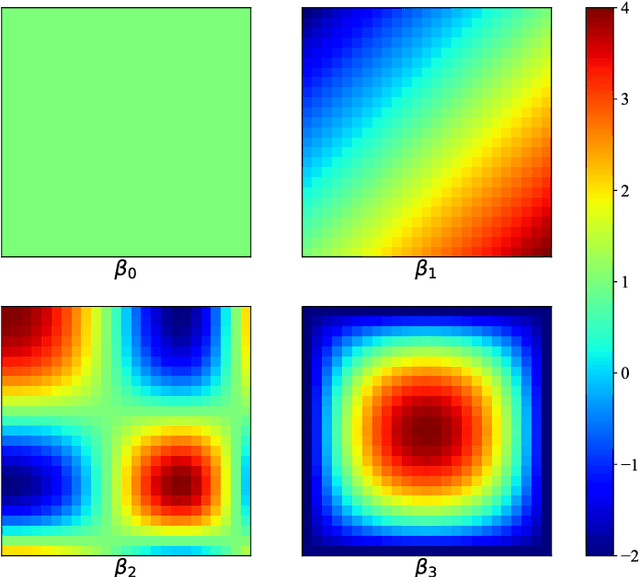

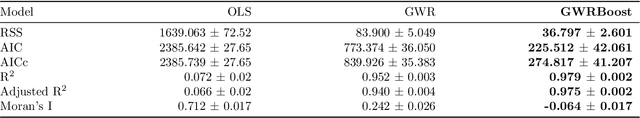

Abstract:The geographically weighted regression (GWR) is an essential tool for estimating the spatial variation of relationships between dependent and independent variables in geographical contexts. However, GWR suffers from the problem that classical linear regressions, which compose the GWR model, are more prone to be underfitting, especially for significant volume and complex nonlinear data, causing inferior comparative performance. Nevertheless, some advanced models, such as the decision tree and the support vector machine, can learn features from complex data more effectively while they cannot provide explainable quantification for the spatial variation of localized relationships. To address the above issues, we propose a geographically gradient boosting weighted regression model, GWRBoost, that applies the localized additive model and gradient boosting optimization method to alleviate underfitting problems and retains explainable quantification capability for spatially-varying relationships between geographically located variables. Furthermore, we formulate the computation method of the Akaike information score for the proposed model to conduct the comparative analysis with the classic GWR algorithm. Simulation experiments and the empirical case study are applied to prove the efficient performance and practical value of GWRBoost. The results show that our proposed model can reduce the RMSE by 18.3% in parameter estimation accuracy and AICc by 67.3% in the goodness of fit.

DouFu: A Double Fusion Joint Learning Method For Driving Trajectory Representation

May 05, 2022

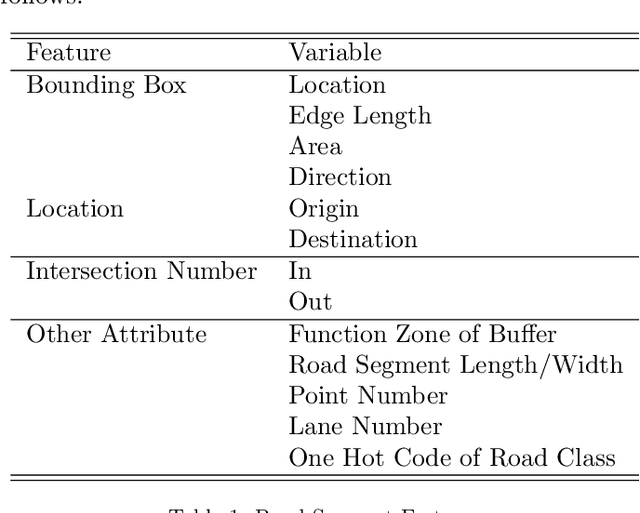

Abstract:Driving trajectory representation learning is of great significance for various location-based services, such as driving pattern mining and route recommendation. However, previous representation generation approaches tend to rarely address three challenges: 1) how to represent the intricate semantic intentions of mobility inexpensively; 2) complex and weak spatial-temporal dependencies due to the sparsity and heterogeneity of the trajectory data; 3) route selection preferences and their correlation to driving behavior. In this paper, we propose a novel multimodal fusion model, DouFu, for trajectory representation joint learning, which applies multimodal learning and attention fusion module to capture the internal characteristics of trajectories. We first design movement, route, and global features generated from the trajectory data and urban functional zones and then analyze them respectively with the attention encoder or feed forward network. The attention fusion module incorporates route features with movement features to create a better spatial-temporal embedding. With the global semantic feature, DouFu produces a comprehensive embedding for each trajectory. We evaluate representations generated by our method and other baseline models on classification and clustering tasks. Empirical results show that DouFu outperforms other models in most of the learning algorithms like the linear regression and the support vector machine by more than 10%.

Identification and classification of exfoliated graphene flakes from microscopy images using a hierarchical deep convolutional neural network

Mar 29, 2022

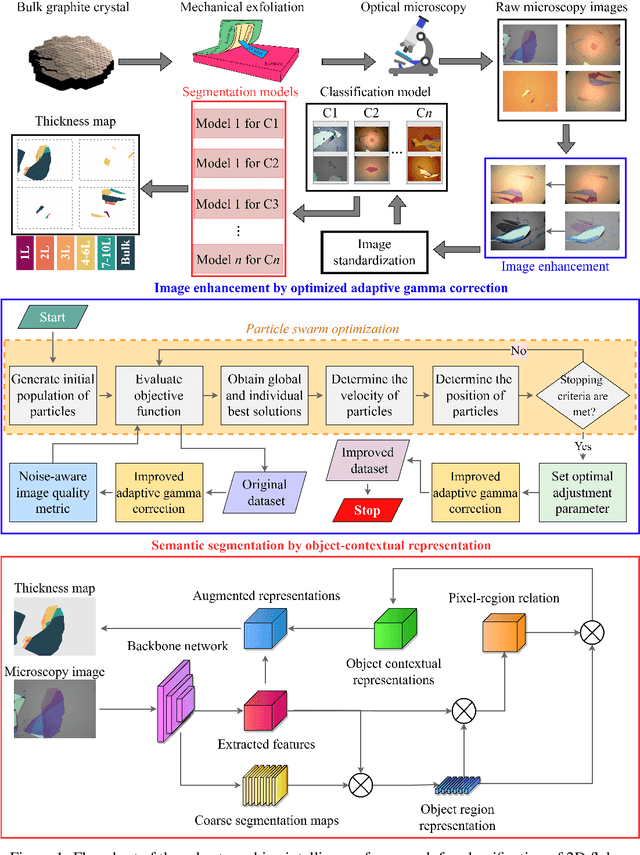

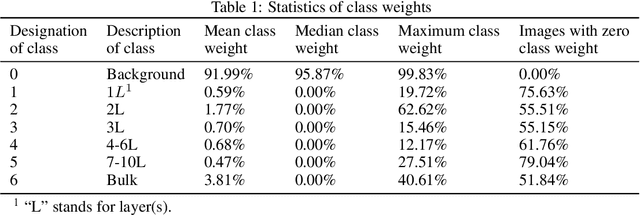

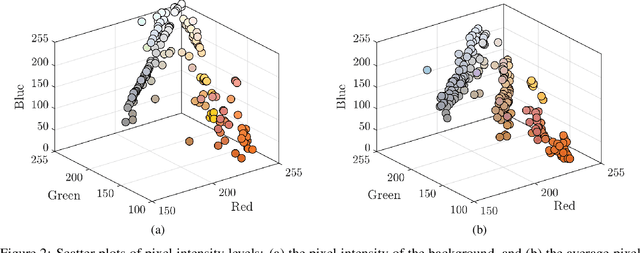

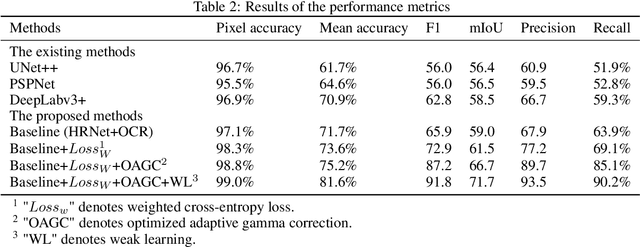

Abstract:Identification of the mechanically exfoliated graphene flakes and classification of the thickness is important in the nanomanufacturing of next-generation materials and devices that overcome the bottleneck of Moore's Law. Currently, identification and classification of exfoliated graphene flakes are conducted by human via inspecting the optical microscope images. The existing state-of-the-art automatic identification by machine learning is not able to accommodate images with different backgrounds while different backgrounds are unavoidable in experiments. This paper presents a deep learning method to automatically identify and classify the thickness of exfoliated graphene flakes on Si/SiO2 substrates from optical microscope images with various settings and background colors. The presented method uses a hierarchical deep convolutional neural network that is capable of learning new images while preserving the knowledge from previous images. The deep learning model was trained and used to classify exfoliated graphene flakes into monolayer (1L), bi-layer (2L), tri-layer (3L), four-to-six-layer (4-6L), seven-to-ten-layer (7-10L), and bulk categories. Compared with existing machine learning methods, the presented method possesses high accuracy and efficiency as well as robustness to the backgrounds and resolutions of images. The results indicated that our deep learning model has accuracy as high as 99% in identifying and classifying exfoliated graphene flakes. This research will shed light on scaled-up manufacturing and characterization of graphene for advanced materials and devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge