Yermek Kapushev

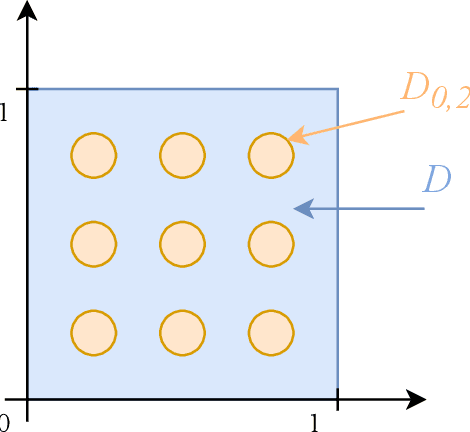

Denoising Score Matching with Random Fourier Features

Jan 13, 2021

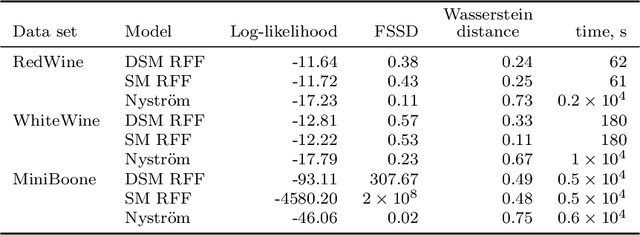

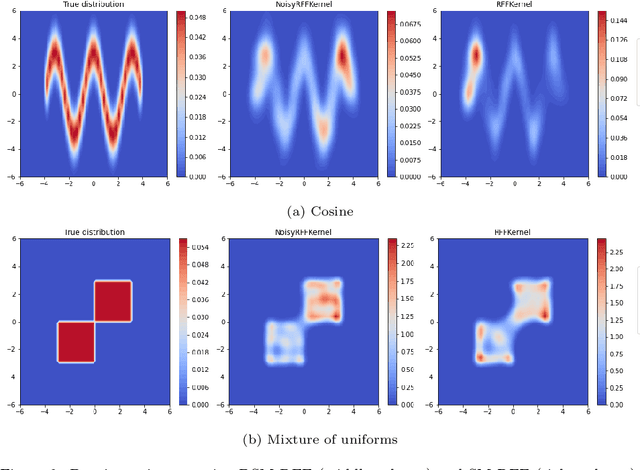

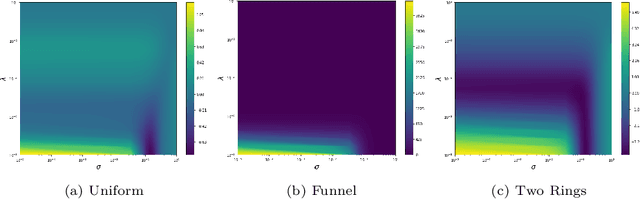

Abstract:The density estimation is one of the core problems in statistics. Despite this, existing techniques like maximum likelihood estimation are computationally inefficient due to the intractability of the normalizing constant. For this reason an interest to score matching has increased being independent on the normalizing constant. However, such estimator is consistent only for distributions with the full space support. One of the approaches to make it consistent is to add noise to the input data which is called Denoising Score Matching. In this work we derive analytical expression for the Denoising Score matching using the Kernel Exponential Family as a model distribution. The usage of the kernel exponential family is motivated by the richness of this class of densities. To tackle the computational complexity we use Random Fourier Features based approximation of the kernel function. The analytical expression allows to drop additional regularization terms based on the higher-order derivatives as they are already implicitly included. Moreover, the obtained expression explicitly depends on the noise variance, so the validation loss can be straightforwardly used to tune the noise level. Along with benchmark experiments, the model was tested on various synthetic distributions to study the behaviour of the model in different cases. The empirical study shows comparable quality to the competing approaches, while the proposed method being computationally faster. The latter one enables scaling up to complex high-dimensional data.

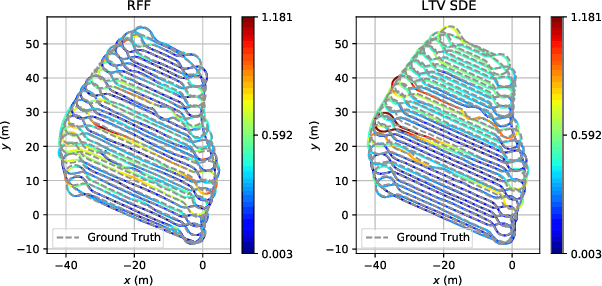

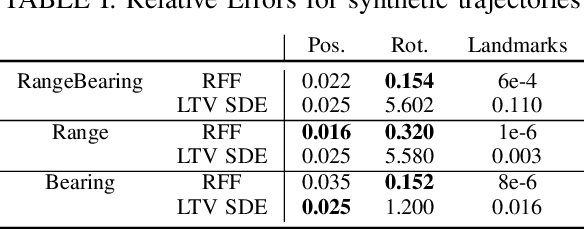

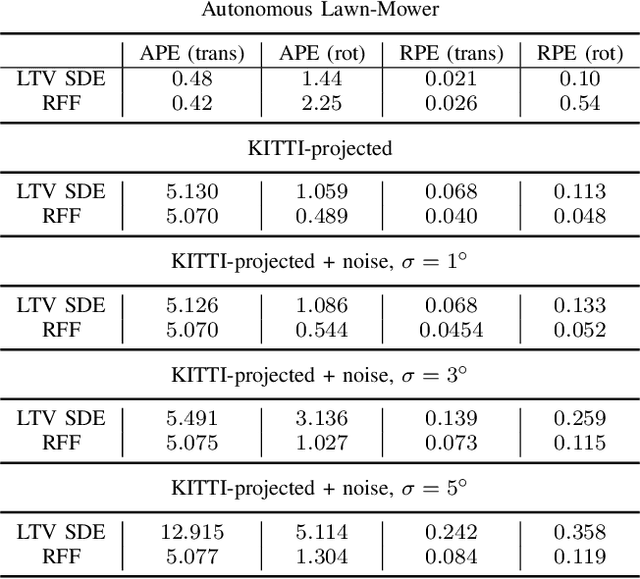

Random Fourier Features based SLAM

Nov 01, 2020

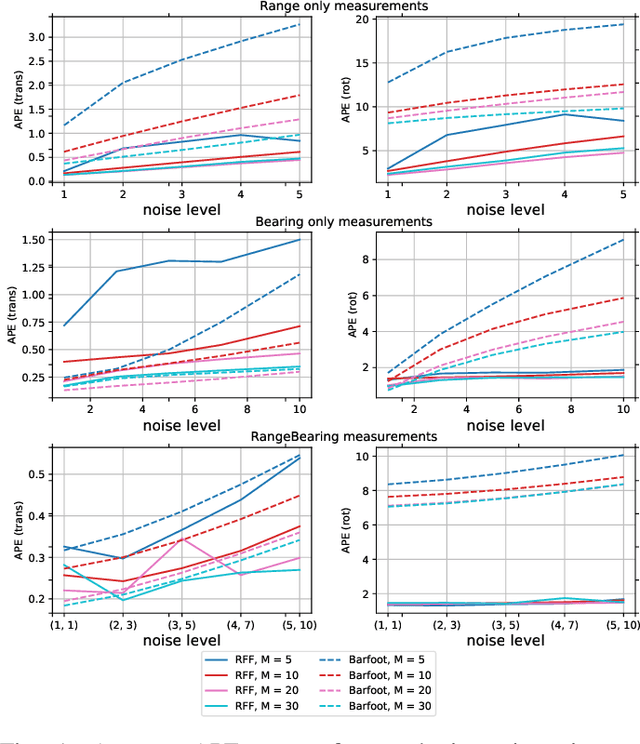

Abstract:This work is dedicated to simultaneous continuous-time trajectory estimation and mapping based on Gaussian Processes (GP). State-of-the-art GP-based models for Simultaneous Localization and Mapping (SLAM) are computationally efficient but can only be used with a restricted class of kernel functions. This paper provides the algorithm based on GP with Random Fourier Features(RFF)approximation for SLAM without any constraints. The advantages of RFF for continuous-time SLAM are that we can consider a broader class of kernels and, at the same time, significantly reduce computational complexity by operating in the Fourier space of features. The additional speedup can be obtained by limiting the number of features. Our experimental results on synthetic and real-world benchmarks demonstrate the cases in which our approach provides better results compared to the current state-of-the-art.

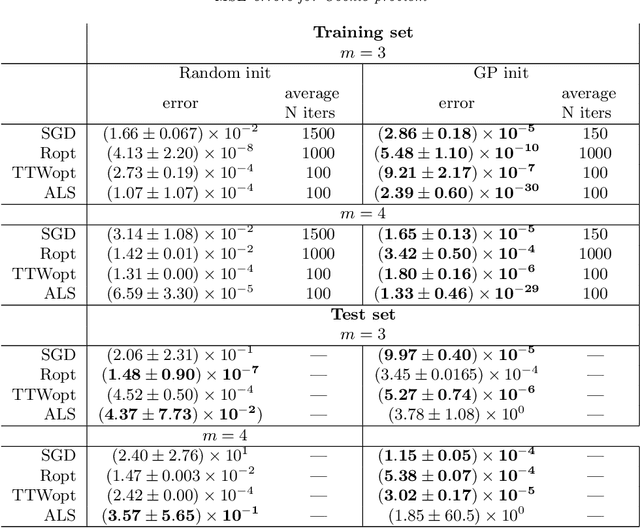

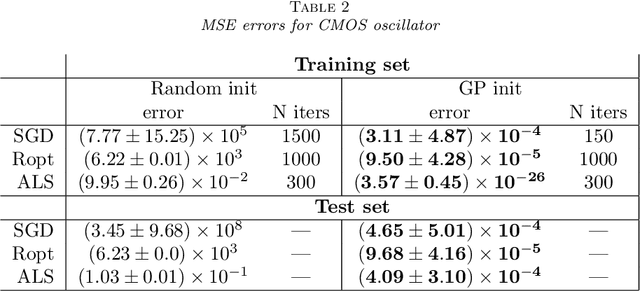

Tensor Completion via Gaussian Process Based Initialization

Dec 11, 2019

Abstract:In this paper, we consider the tensor completion problem representing the solution in the tensor train (TT) format. It is assumed that tensor is high-dimensional, and tensor values are generated by an unknown smooth function. The assumption allows us to develop an efficient initialization scheme based on Gaussian Process Regression and TT-cross approximation technique. The proposed approach can be used in conjunction with any optimization algorithm that is usually utilized in tensor completion problems. We empirically justify that in this case the reconstruction error improves compared to the tensor completion with random initialization. As an additional benefit, our technique automatically selects rank thanks to using the TT-cross approximation technique.

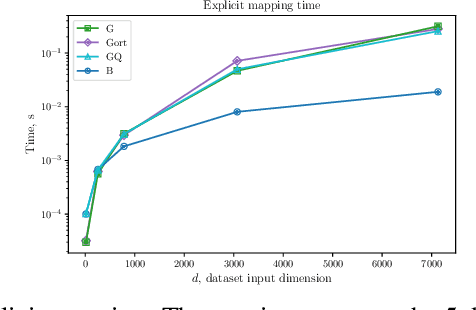

Quadrature-based features for kernel approximation

Oct 29, 2018

Abstract:We consider the problem of improving kernel approximation via randomized feature maps. These maps arise as Monte Carlo approximation to integral representations of kernel functions and scale up kernel methods for larger datasets. Based on an efficient numerical integration technique, we propose a unifying approach that reinterprets the previous random features methods and extends to better estimates of the kernel approximation. We derive the convergence behaviour and conduct an extensive empirical study that supports our hypothesis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge