Yechong Huang

Mutual information neural estimation in CNN-based end-to-end medical image registration

Aug 23, 2019

Abstract:Image registration is one of the most underlined processes in medical image analysis. Recently, convolutional neural networks (CNNs) have shown significant potential in both affine and deformable registration. However, the lack of voxel-wise ground truth challenges the training of an accurate CNN-based registration. In this work, we implement a CNN-based mutual information neural estimator for image registration that evaluates the registration outputs in an unsupervised manner. Based on the estimator, we propose an end-to-end registration framework, denoted as MIRegNet, to realize one-shot affine and deformable registration. Furthermore, we propose a weakly supervised network combining mutual information with the Dice similarity coefficients (DSC) loss. We employed a dataset consisting of 190 pairs of 3D pulmonary CT images for validation. Results showed that the MIRegNet obtained an average Dice score of 0.960 for registering the pulmonary images, and the Dice score was further improved to 0.963 when the DSC was included for a weakly supervised learning of image registration.

A Fully-Automatic Framework for Parkinson's Disease Diagnosis by Multi-Modality Images

Feb 26, 2019

Abstract:Background: Parkinson's disease (PD) is a prevalent long-term neurodegenerative disease. Though the diagnostic criteria of PD are relatively well defined, the current medical imaging diagnostic procedures are expertise-demanding, and thus call for a higher-integrated AI-based diagnostic algorithm. Methods: In this paper, we proposed an automatic, end-to-end, multi-modality diagnosis framework, including segmentation, registration, feature generation and machine learning, to process the information of the striatum for the diagnosis of PD. Multiple modalities, including T1- weighted MRI and 11C-CFT PET, were used in the proposed framework. The reliability of this framework was then validated on a dataset from the PET center of Huashan Hospital, as the dataset contains paired T1-MRI and CFT-PET images of 18 Normal (NL) subjects and 49 PD subjects. Results: We obtained an accuracy of 100% for the PD/NL classification task, besides, we conducted several comparative experiments to validate the diagnosis ability of our framework. Conclusion: Through experiment we illustrate that (1) automatic segmentation has the same classification effect as the manual segmentation, (2) the multi-modality images generates a better prediction than single modality images, and (3) volume feature is shown to be irrelevant to PD diagnosis.

Diagnosis of Alzheimer's Disease via Multi-modality 3D Convolutional Neural Network

Feb 26, 2019

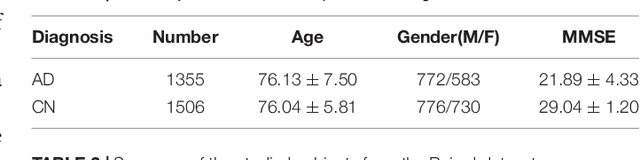

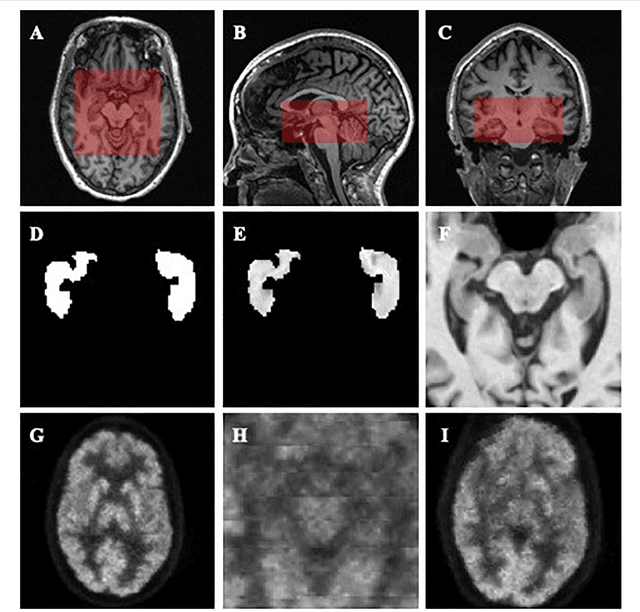

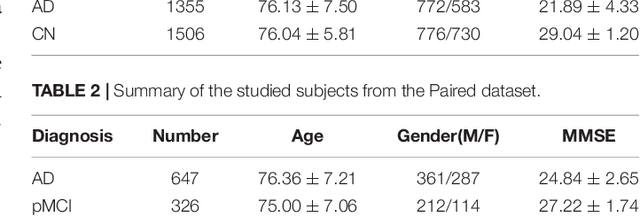

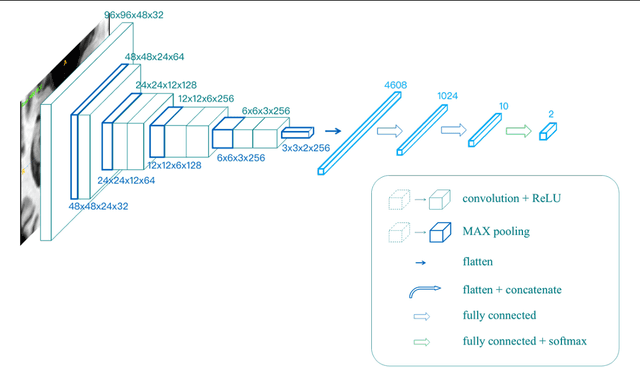

Abstract:Alzheimer's Disease (AD) is one of the most concerned neurodegenerative diseases. In the last decade, studies on AD diagnosis attached great significance to artificial intelligence (AI)-based diagnostic algorithms. Among the diverse modality imaging data, T1-weighted MRI and 18F-FDGPET are widely researched for this task. In this paper, we propose a novel convolutional neural network (CNN) to fuse the multi-modality information including T1-MRI and FDG-PDT images around the hippocampal area for the diagnosis of AD. Different from the traditional machine learning algorithms, this method does not require manually extracted features, and utilizes the stateof-art 3D image-processing CNNs to learn features for the diagnosis and prognosis of AD. To validate the performance of the proposed network, we trained the classifier with paired T1-MRI and FDG-PET images using the ADNI datasets, including 731 Normal (NL) subjects, 647 AD subjects, 441 stable MCI (sMCI) subjects and 326 progressive MCI (pMCI) subjects. We obtained the maximal accuracies of 90.10% for NL/AD task, 87.46% for NL/pMCI task, and 76.90% for sMCI/pMCI task. The proposed framework yields comparative results against state-of-the-art approaches. Moreover, the experimental results have demonstrated that (1) segmentation is not a prerequisite by using CNN, (2) the hippocampal area provides enough information to give a reference to AD diagnosis. Keywords: Alzheimer's Disease, Multi-modality, Image Classification, CNN, Deep Learning, Hippocampal

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge