Yanxiang Jiang

Social-aware Cooperative Caching in Fog Radio Access Networks

Jun 28, 2022

Abstract:In this paper, the cooperative caching problem in fog radio access networks (F-RANs) is investigated to jointly optimize the transmission delay and energy consumption. Exploiting the potential social relationships among fog access points (F-APs), we firstly propose a clustering scheme based on hedonic coalition game (HCG) to improve the potential cooperation gain. Then, considering that the optimization problem is non-deterministic polynomial hard (NP-hard), we further propose an improved firefly algorithm (FA) based cooperative caching scheme, which utilizes a mutation strategy based on local content popularity to avoid pre-mature convergence. Simulation results show that our proposed scheme can effectively reduce the content transmission delay and energy consumption in comparison with the baselines.

A Federated Reinforcement Learning Method with Quantization for Cooperative Edge Caching in Fog Radio Access Networks

Jun 23, 2022

Abstract:In this paper, cooperative edge caching problem is studied in fog radio access networks (F-RANs). Given the non-deterministic polynomial hard (NP-hard) property of the problem, a dueling deep Q network (Dueling DQN) based caching update algorithm is proposed to make an optimal caching decision by learning the dynamic network environment. In order to protect user data privacy and solve the problem of slow convergence of the single deep reinforcement learning (DRL) model training, we propose a federated reinforcement learning method with quantization (FRLQ) to implement cooperative training of models from multiple fog access points (F-APs) in F-RANs. To address the excessive consumption of communications resources caused by model transmission, we prune and quantize the shared DRL models to reduce the number of model transfer parameters. The communications interval is increased and the communications rounds are reduced by periodical model global aggregation. We analyze the global convergence and computational complexity of our policy. Simulation results verify that our policy has better performance in reducing user request delay and improving cache hit rate compared to benchmark schemes. The proposed policy is also shown to have faster training speed and higher communications efficiency with minimal loss of model accuracy.

Content Popularity Prediction Based on Quantized Federated Bayesian Learning in Fog Radio Access Networks

Jun 23, 2022

Abstract:In this paper, we investigate the content popularity prediction problem in cache-enabled fog radio access networks (F-RANs). In order to predict the content popularity with high accuracy and low complexity, we propose a Gaussian process based regressor to model the content request pattern. Firstly, the relationship between content features and popularity is captured by our proposed model. Then, we utilize Bayesian learning to train the model parameters, which is robust to overfitting. However, Bayesian methods are usually unable to find a closed-form expression of the posterior distribution. To tackle this issue, we apply a stochastic variance reduced gradient Hamiltonian Monte Carlo (SVRG-HMC) method to approximate the posterior distribution. To utilize the computing resources of other fog access points (F-APs) and to reduce the communications overhead, we propose a quantized federated learning (FL) framework combining with Bayesian learning. The quantized federated Bayesian learning framework allows each F-AP to send gradients to the cloud server after quantizing and encoding. It can achieve a tradeoff between prediction accuracy and communications overhead effectively. Simulation results show that the performance of our proposed policy outperforms the existing policies.

Content Popularity Prediction in Fog-RANs: A Clustered Federated Learning Based Approach

Jun 13, 2022

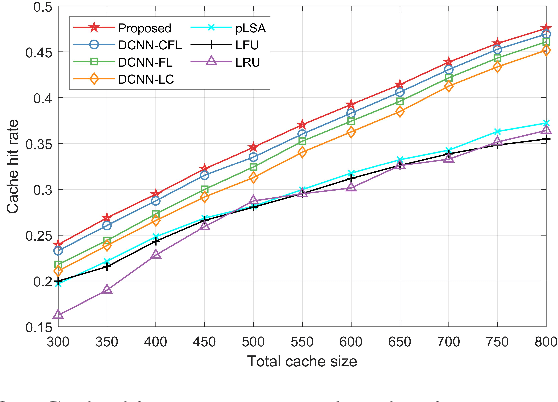

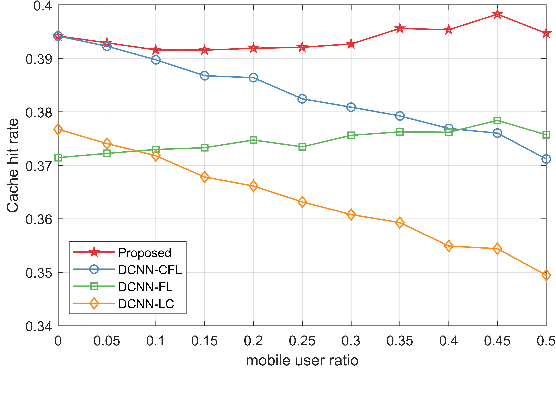

Abstract:In this paper, the content popularity prediction problem in fog radio access networks (F-RANs) is investigated. Based on clustered federated learning, we propose a novel mobility-aware popularity prediction policy, which integrates content popularities in terms of local users and mobile users. For local users, the content popularity is predicted by learning the hidden representations of local users and contents. Initial features of local users and contents are generated by incorporating neighbor information with self information. Then, dual-channel neural network (DCNN) model is introduced to learn the hidden representations by producing deep latent features from initial features. For mobile users, the content popularity is predicted via user preference learning. In order to distinguish regional variations of content popularity, clustered federated learning (CFL) is employed, which enables fog access points (F-APs) with similar regional types to benefit from one another and provides a more specialized DCNN model for each F-AP. Simulation results show that our proposed policy achieves significant performance improvement over the traditional policies.

Computation Offloading and Resource Allocation in F-RANs: A Federated Deep Reinforcement Learning Approach

Jun 13, 2022

Abstract:The fog radio access network (F-RAN) is a promising technology in which the user mobile devices (MDs) can offload computation tasks to the nearby fog access points (F-APs). Due to the limited resource of F-APs, it is important to design an efficient task offloading scheme. In this paper, by considering time-varying network environment, a dynamic computation offloading and resource allocation problem in F-RANs is formulated to minimize the task execution delay and energy consumption of MDs. To solve the problem, a federated deep reinforcement learning (DRL) based algorithm is proposed, where the deep deterministic policy gradient (DDPG) algorithm performs computation offloading and resource allocation in each F-AP. Federated learning is exploited to train the DDPG agents in order to decrease the computing complexity of training process and protect the user privacy. Simulation results show that the proposed federated DDPG algorithm can achieve lower task execution delay and energy consumption of MDs more quickly compared with the other existing strategies.

Distributed Edge Caching via Reinforcement Learning in Fog Radio Access Networks

Feb 27, 2019

Abstract:In this paper, the distributed edge caching problem in fog radio access networks (F-RANs) is investigated. By considering the unknown spatio-temporal content popularity and user preference, a user request model based on hidden Markov process is proposed to characterize the fluctuant spatio-temporal traffic demands in F-RANs. Then, the Q-learning method based on the reinforcement learning (RL) framework is put forth to seek the optimal caching policy in a distributed manner, which enables fog access points (F-APs) to learn and track the potential dynamic process without extra communications cost. Furthermore, we propose a more efficient Q-learning method with value function approximation (Q-VFA-learning) to reduce complexity and accelerate convergence. Simulation results show that the performance of our proposed method is superior to those of the traditional methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge