Yanglei Gan

Exploiting Inter-Session Information with Frequency-enhanced Dual-Path Networks for Sequential Recommendation

Nov 14, 2025

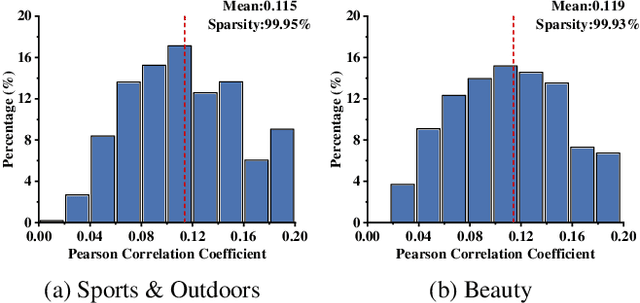

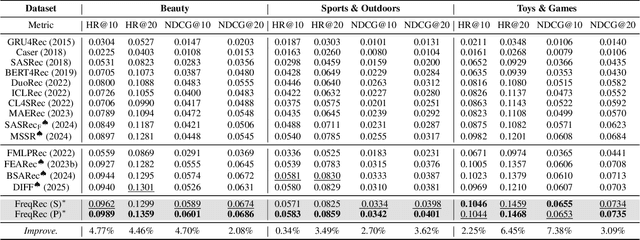

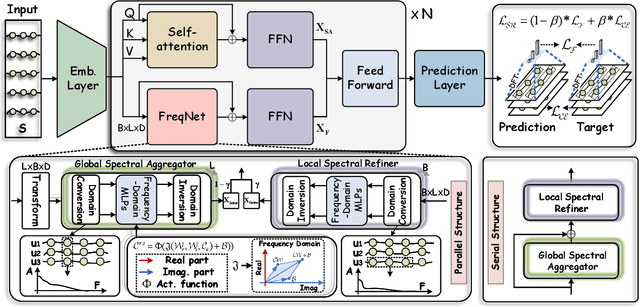

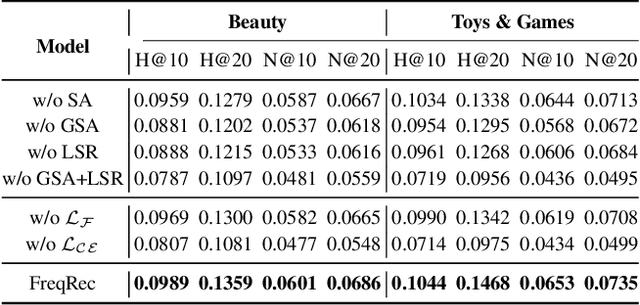

Abstract:Sequential recommendation (SR) aims to predict a user's next item preference by modeling historical interaction sequences. Recent advances often integrate frequency-domain modules to compensate for self-attention's low-pass nature by restoring the high-frequency signals critical for personalized recommendations. Nevertheless, existing frequency-aware solutions process each session in isolation and optimize exclusively with time-domain objectives. Consequently, they overlook cross-session spectral dependencies and fail to enforce alignment between predicted and actual spectral signatures, leaving valuable frequency information under-exploited. To this end, we propose FreqRec, a Frequency-Enhanced Dual-Path Network for sequential Recommendation that jointly captures inter-session and intra-session behaviors via a learnable Frequency-domain Multi-layer Perceptrons. Moreover, FreqRec is optimized under a composite objective that combines cross entropy with a frequency-domain consistency loss, explicitly aligning predicted and true spectral signatures. Extensive experiments on three benchmarks show that FreqRec surpasses strong baselines and remains robust under data sparsity and noisy-log conditions.

DiffuTraj: A Stochastic Vessel Trajectory Prediction Approach via Guided Diffusion Process

Oct 12, 2024Abstract:Maritime vessel maneuvers, characterized by their inherent complexity and indeterminacy, requires vessel trajectory prediction system capable of modeling the multi-modality nature of future motion states. Conventional stochastic trajectory prediction methods utilize latent variables to represent the multi-modality of vessel motion, however, tends to overlook the complexity and dynamics inherent in maritime behavior. In contrast, we explicitly simulate the transition of vessel motion from uncertainty towards a state of certainty, effectively handling future indeterminacy in dynamic scenes. In this paper, we present a novel framework (\textit{DiffuTraj}) to conceptualize the trajectory prediction task as a guided reverse process of motion pattern uncertainty diffusion, in which we progressively remove uncertainty from maritime regions to delineate the intended trajectory. Specifically, we encode the previous states of the target vessel, vessel-vessel interactions, and the environment context as guiding factors for trajectory generation. Subsequently, we devise a transformer-based conditional denoiser to capture spatio-temporal dependencies, enabling the generation of trajectories better aligned for particular maritime environment. Comprehensive experiments on vessel trajectory prediction benchmarks demonstrate the superiority of our method.

Synergistic Anchored Contrastive Pre-training for Few-Shot Relation Extraction

Dec 24, 2023

Abstract:Few-shot Relation Extraction (FSRE) aims to extract relational facts from a sparse set of labeled corpora. Recent studies have shown promising results in FSRE by employing Pre-trained Language Models (PLMs) within the framework of supervised contrastive learning, which considers both instances and label facts. However, how to effectively harness massive instance-label pairs to encompass the learned representation with semantic richness in this learning paradigm is not fully explored. To address this gap, we introduce a novel synergistic anchored contrastive pre-training framework. This framework is motivated by the insight that the diverse viewpoints conveyed through instance-label pairs capture incomplete yet complementary intrinsic textual semantics. Specifically, our framework involves a symmetrical contrastive objective that encompasses both sentence-anchored and label-anchored contrastive losses. By combining these two losses, the model establishes a robust and uniform representation space. This space effectively captures the reciprocal alignment of feature distributions among instances and relational facts, simultaneously enhancing the maximization of mutual information across diverse perspectives within the same relation. Experimental results demonstrate that our framework achieves significant performance enhancements compared to baseline models in downstream FSRE tasks. Furthermore, our approach exhibits superior adaptability to handle the challenges of domain shift and zero-shot relation extraction. Our code is available online at https://github.com/AONE-NLP/FSRE-SaCon.

Aspect-oriented Opinion Alignment Network for Aspect-Based Sentiment Classification

Aug 22, 2023Abstract:Aspect-based sentiment classification is a crucial problem in fine-grained sentiment analysis, which aims to predict the sentiment polarity of the given aspect according to its context. Previous works have made remarkable progress in leveraging attention mechanism to extract opinion words for different aspects. However, a persistent challenge is the effective management of semantic mismatches, which stem from attention mechanisms that fall short in adequately aligning opinions words with their corresponding aspect in multi-aspect sentences. To address this issue, we propose a novel Aspect-oriented Opinion Alignment Network (AOAN) to capture the contextual association between opinion words and the corresponding aspect. Specifically, we first introduce a neighboring span enhanced module which highlights various compositions of neighboring words and given aspects. In addition, we design a multi-perspective attention mechanism that align relevant opinion information with respect to the given aspect. Extensive experiments on three benchmark datasets demonstrate that our model achieves state-of-the-art results. The source code is available at https://github.com/AONE-NLP/ABSA-AOAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge