Xueyao Jiang

Tackling Math Word Problems with Fine-to-Coarse Abstracting and Reasoning

May 17, 2022

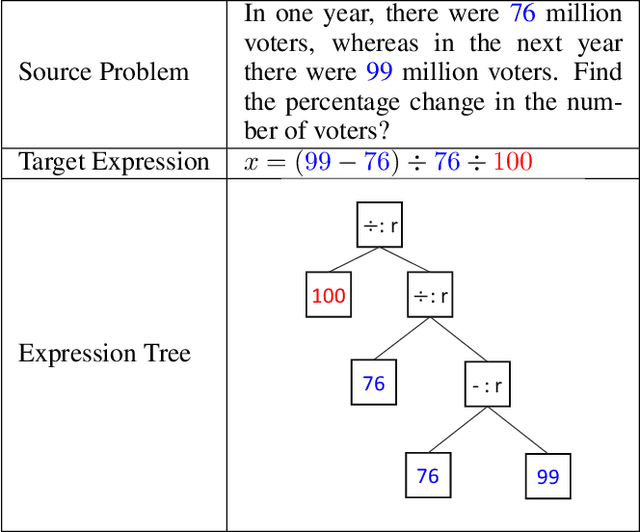

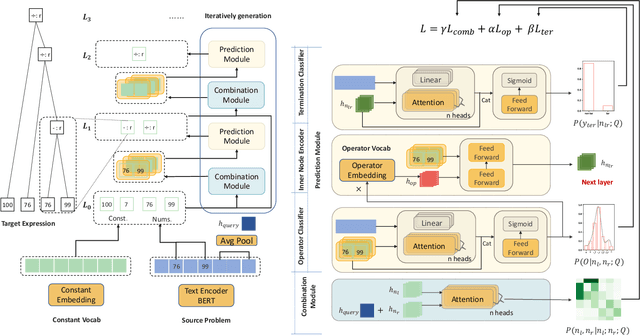

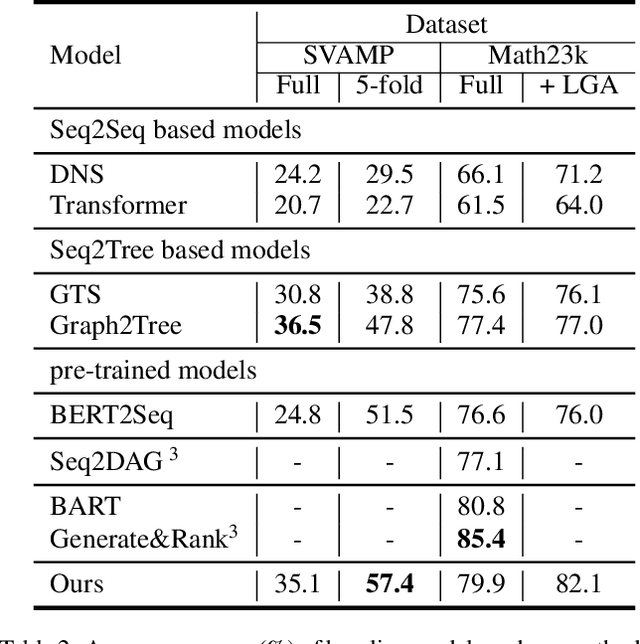

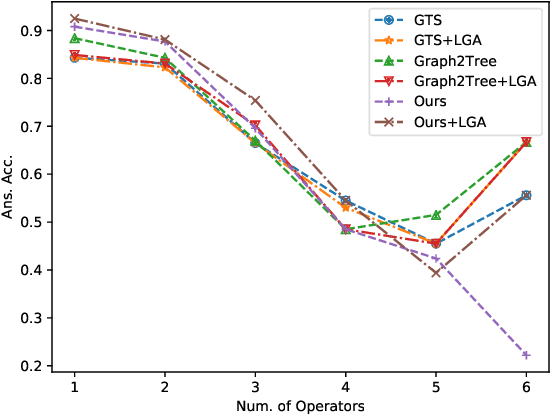

Abstract:Math Word Problems (MWP) is an important task that requires the ability of understanding and reasoning over mathematical text. Existing approaches mostly formalize it as a generation task by adopting Seq2Seq or Seq2Tree models to encode an input math problem in natural language as a global representation and generate the output mathematical expression. Such approaches only learn shallow heuristics and fail to capture fine-grained variations in inputs. In this paper, we propose to model a math word problem in a fine-to-coarse manner to capture both the local fine-grained information and the global logical structure of it. Instead of generating a complete equation sequence or expression tree from the global features, we iteratively combine low-level operands to predict a higher-level operator, abstracting the problem and reasoning about the solving operators from bottom to up. Our model is naturally more sensitive to local variations and can better generalize to unseen problem types. Extensive evaluations on Math23k and SVAMP datasets demonstrate the accuracy and robustness of our method.

Multi-Modal Knowledge Graph Construction and Application: A Survey

Feb 11, 2022

Abstract:Recent years have witnessed the resurgence of knowledge engineering which is featured by the fast growth of knowledge graphs. However, most of existing knowledge graphs are represented with pure symbols, which hurts the machine's capability to understand the real world. The multi-modalization of knowledge graphs is an inevitable key step towards the realization of human-level machine intelligence. The results of this endeavor are Multi-modal Knowledge Graphs (MMKGs). In this survey on MMKGs constructed by texts and images, we first give definitions of MMKGs, followed with the preliminaries on multi-modal tasks and techniques. We then systematically review the challenges, progresses and opportunities on the construction and application of MMKGs respectively, with detailed analyses of the strength and weakness of different solutions. We finalize this survey with open research problems relevant to MMKGs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge