Xuedou Xiao

DetVPCC: RoI-based Point Cloud Sequence Compression for 3D Object Detection

Feb 07, 2025Abstract:While MPEG-standardized video-based point cloud compression (VPCC) achieves high compression efficiency for human perception, it struggles with a poor trade-off between bitrate savings and detection accuracy when supporting 3D object detectors. This limitation stems from VPCC's inability to prioritize regions of different importance within point clouds. To address this issue, we propose DetVPCC, a novel method integrating region-of-interest (RoI) encoding with VPCC for efficient point cloud sequence compression while preserving the 3D object detection accuracy. Specifically, we augment VPCC to support RoI-based compression by assigning spatially non-uniform quality levels. Then, we introduce a lightweight RoI detector to identify crucial regions that potentially contain objects. Experiments on the nuScenes dataset demonstrate that our approach significantly improves the detection accuracy. The code and demo video are available in supplementary materials.

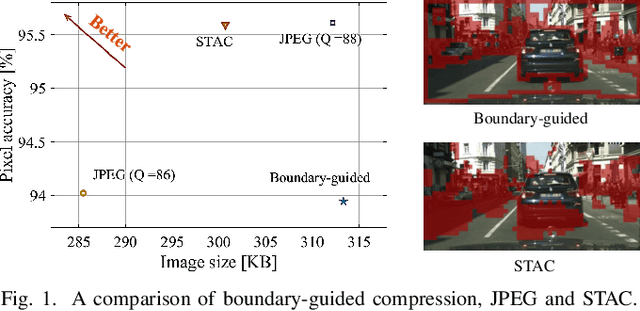

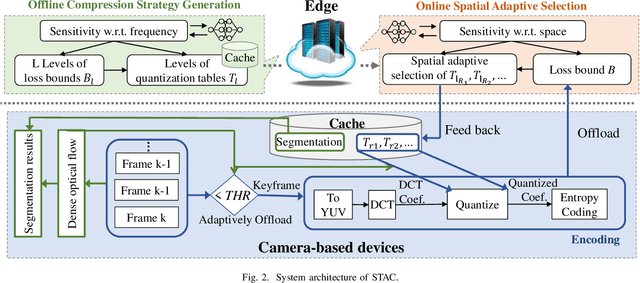

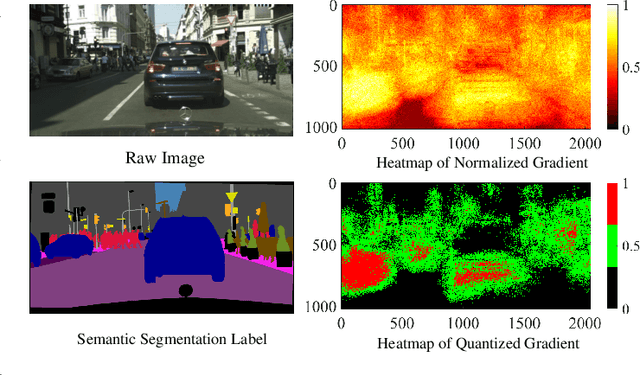

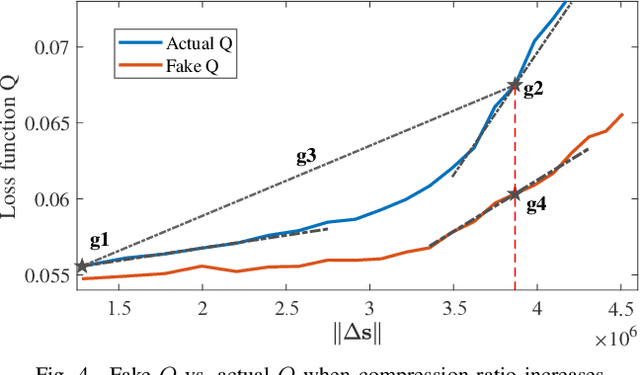

DNN-Driven Compressive Offloading for Edge-Assisted Semantic Video Segmentation

Mar 28, 2022

Abstract:Deep learning has shown impressive performance in semantic segmentation, but it is still unaffordable for resource-constrained mobile devices. While offloading computation tasks is promising, the high traffic demands overwhelm the limited bandwidth. Existing compression algorithms are not fit for semantic segmentation, as the lack of obvious and concentrated regions of interest (RoIs) forces the adoption of uniform compression strategies, leading to low compression ratios or accuracy. This paper introduces STAC, a DNN-driven compression scheme tailored for edge-assisted semantic video segmentation. STAC is the first to exploit DNN's gradients as spatial sensitivity metrics for spatial adaptive compression and achieves superior compression ratio and accuracy. Yet, it is challenging to adapt this content-customized compression to videos. Practical issues include varying spatial sensitivity and huge bandwidth consumption for compression strategy feedback and offloading. We tackle these issues through a spatiotemporal adaptive scheme, which (1) takes partial strategy generation operations offline to reduce communication load, and (2) propagates compression strategies and segmentation results across frames through dense optical flow, and adaptively offloads keyframes to accommodate video content. We implement STAC on a commodity mobile device. Experiments show that STAC can save up to 20.95% of bandwidth without losing accuracy, compared to the state-of-the-art algorithm.

Sensor-Augmented Neural Adaptive Bitrate Video Streaming on UAVs

Sep 23, 2019

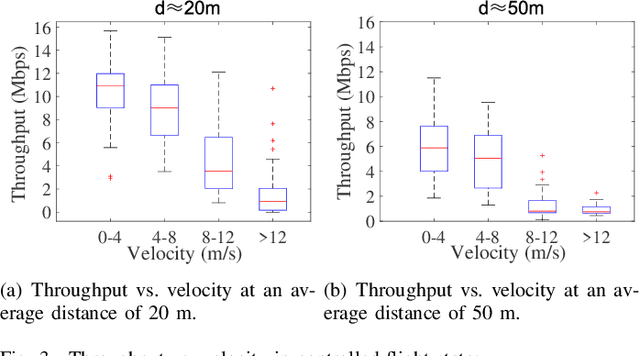

Abstract:Recent advances in unmanned aerial vehicle (UAV) technology have revolutionized a broad class of civil and military applications. However, the designs of wireless technologies that enable real-time streaming of high-definition video between UAVs and ground clients present a conundrum. Most existing adaptive bitrate (ABR) algorithms are not optimized for the air-to-ground links, which usually fluctuate dramatically due to the dynamic flight states of the UAV. In this paper, we present SA-ABR, a new sensor-augmented system that generates ABR video streaming algorithms with the assistance of various kinds of inherent sensor data that are used to pilot UAVs. By incorporating the inherent sensor data with network observations, SA-ABR trains a deep reinforcement learning (DRL) model to extract salient features from the flight state information and automatically learn an ABR algorithm to adapt to the varying UAV channel capacity through the training process. SA-ABR does not rely on any assumptions or models about UAV's flight states or the environment, but instead, it makes decisions by exploiting temporal properties of past throughput through the long short-term memory (LSTM) to adapt itself to a wide range of highly dynamic environments. We have implemented SA-ABR in a commercial UAV and evaluated it in the wild. We compare SA-ABR with a variety of existing state-of-the-art ABR algorithms, and the results show that our system outperforms the best known existing ABR algorithm by 21.4% in terms of the average quality of experience (QoE) reward.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge