Taobin Chen

Sensor-Augmented Neural Adaptive Bitrate Video Streaming on UAVs

Sep 23, 2019

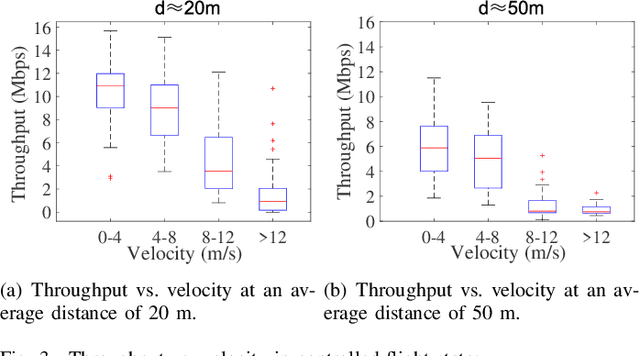

Abstract:Recent advances in unmanned aerial vehicle (UAV) technology have revolutionized a broad class of civil and military applications. However, the designs of wireless technologies that enable real-time streaming of high-definition video between UAVs and ground clients present a conundrum. Most existing adaptive bitrate (ABR) algorithms are not optimized for the air-to-ground links, which usually fluctuate dramatically due to the dynamic flight states of the UAV. In this paper, we present SA-ABR, a new sensor-augmented system that generates ABR video streaming algorithms with the assistance of various kinds of inherent sensor data that are used to pilot UAVs. By incorporating the inherent sensor data with network observations, SA-ABR trains a deep reinforcement learning (DRL) model to extract salient features from the flight state information and automatically learn an ABR algorithm to adapt to the varying UAV channel capacity through the training process. SA-ABR does not rely on any assumptions or models about UAV's flight states or the environment, but instead, it makes decisions by exploiting temporal properties of past throughput through the long short-term memory (LSTM) to adapt itself to a wide range of highly dynamic environments. We have implemented SA-ABR in a commercial UAV and evaluated it in the wild. We compare SA-ABR with a variety of existing state-of-the-art ABR algorithms, and the results show that our system outperforms the best known existing ABR algorithm by 21.4% in terms of the average quality of experience (QoE) reward.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge