Xue-She Wang

Magic-Me: Identity-Specific Video Customized Diffusion

Feb 14, 2024

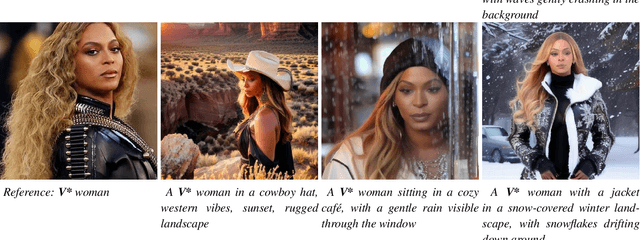

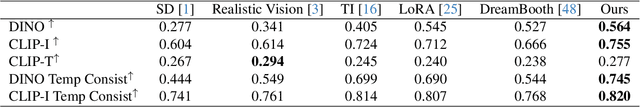

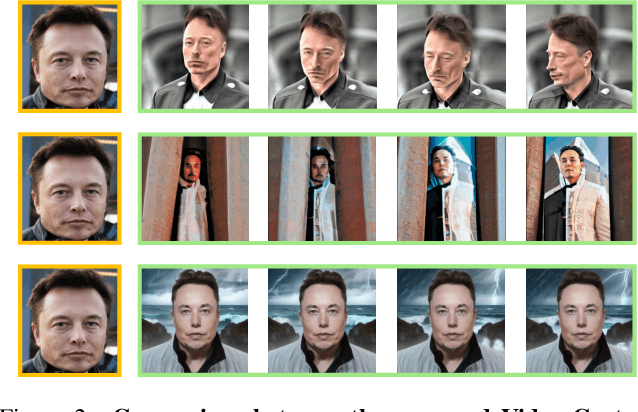

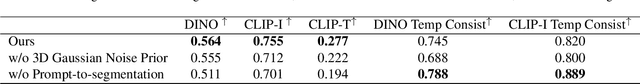

Abstract:Creating content for a specific identity (ID) has shown significant interest in the field of generative models. In the field of text-to-image generation (T2I), subject-driven content generation has achieved great progress with the ID in the images controllable. However, extending it to video generation is not well explored. In this work, we propose a simple yet effective subject identity controllable video generation framework, termed Video Custom Diffusion (VCD). With a specified subject ID defined by a few images, VCD reinforces the identity information extraction and injects frame-wise correlation at the initialization stage for stable video outputs with identity preserved to a large extent. To achieve this, we propose three novel components that are essential for high-quality ID preservation: 1) an ID module trained with the cropped identity by prompt-to-segmentation to disentangle the ID information and the background noise for more accurate ID token learning; 2) a text-to-video (T2V) VCD module with 3D Gaussian Noise Prior for better inter-frame consistency and 3) video-to-video (V2V) Face VCD and Tiled VCD modules to deblur the face and upscale the video for higher resolution. Despite its simplicity, we conducted extensive experiments to verify that VCD is able to generate stable and high-quality videos with better ID over the selected strong baselines. Besides, due to the transferability of the ID module, VCD is also working well with finetuned text-to-image models available publically, further improving its usability. The codes are available at https://github.com/Zhen-Dong/Magic-Me.

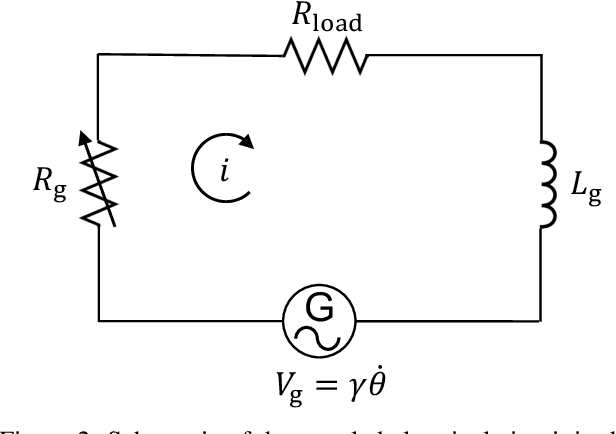

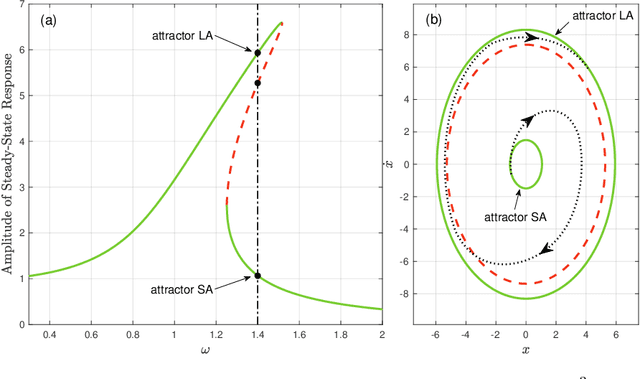

Attractor Selection in Nonlinear Energy Harvesting Using Deep Reinforcement Learning

Oct 03, 2020

Abstract:Recent research efforts demonstrate that the intentional use of nonlinearity enhances the capabilities of energy harvesting systems. One of the primary challenges that arise in nonlinear harvesters is that nonlinearities can often result in multiple attractors with both desirable and undesirable responses that may co-exist. This paper presents a nonlinear energy harvester which is based on translation-to-rotational magnetic transmission and exhibits coexisting attractors with different levels of electric power output. In addition, a control method using deep reinforcement learning was proposed to realize attractor switching between coexisting attractors with constrained actuation.

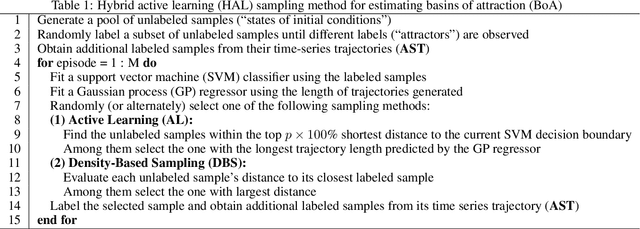

A Data-Efficient Sampling Method for Estimating Basins of Attraction Using Hybrid Active Learning (HAL)

Mar 24, 2020

Abstract:Although basins of attraction (BoA) diagrams are an insightful tool for understanding the behavior of nonlinear systems, generating these diagrams is either computationally expensive with simulation or difficult and cost prohibitive experimentally. This paper introduces a data-efficient sampling method for estimating BoA. The proposed method is based upon hybrid active learning (HAL) and is designed to find and label the "informative" samples, which efficiently determine the boundary of BoA. It consists of three primary parts: 1) additional sampling on trajectories (AST) to maximize the number of samples obtained from each simulation or experiment; 2) an active learning (AL) algorithm to exploit the local boundary of BoA; and 3) a density-based sampling (DBS) method to explore the global boundary of BoA. An example of estimating the BoA for a bistable nonlinear system is presented to show the high efficiency of our HAL sampling method.

Constrained Attractor Selection Using Deep Reinforcement Learning

Sep 26, 2019

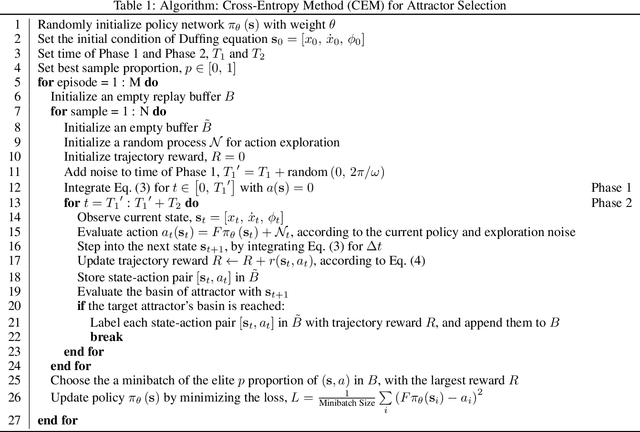

Abstract:This paper describes an approach for attractor selection in nonlinear dynamical systems with constrained actuation. Attractor selection is achieved using two different deep reinforcement learning methods: 1) the cross-entropy method (CEM) and 2) the deep deterministic policy gradient (DDPG) method. The framework and algorithms for applying these control methods are presented. Experiments were performed on a Duffing oscillator as it is a classic nonlinear dynamical system with multiple attractors. Both methods achieve attractor selection under various control constraints. While these methods have nearly identical success rates, the DDPG method has the advantages a high learning rate, low performance variance, and offers a smooth control approach. This experiment demonstrates the applicability of reinforcement learning to constrained attractor selection problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge