James D. Turner

A Data-Efficient Sampling Method for Estimating Basins of Attraction Using Hybrid Active Learning (HAL)

Mar 24, 2020

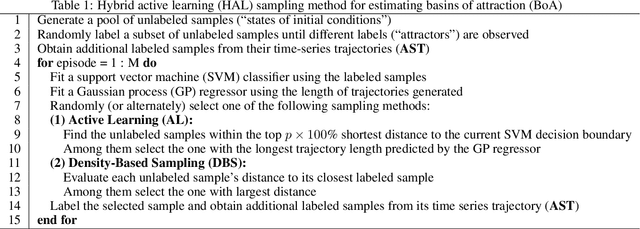

Abstract:Although basins of attraction (BoA) diagrams are an insightful tool for understanding the behavior of nonlinear systems, generating these diagrams is either computationally expensive with simulation or difficult and cost prohibitive experimentally. This paper introduces a data-efficient sampling method for estimating BoA. The proposed method is based upon hybrid active learning (HAL) and is designed to find and label the "informative" samples, which efficiently determine the boundary of BoA. It consists of three primary parts: 1) additional sampling on trajectories (AST) to maximize the number of samples obtained from each simulation or experiment; 2) an active learning (AL) algorithm to exploit the local boundary of BoA; and 3) a density-based sampling (DBS) method to explore the global boundary of BoA. An example of estimating the BoA for a bistable nonlinear system is presented to show the high efficiency of our HAL sampling method.

Constrained Attractor Selection Using Deep Reinforcement Learning

Sep 26, 2019

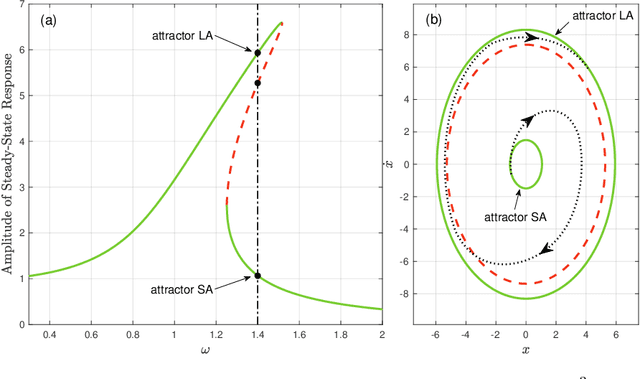

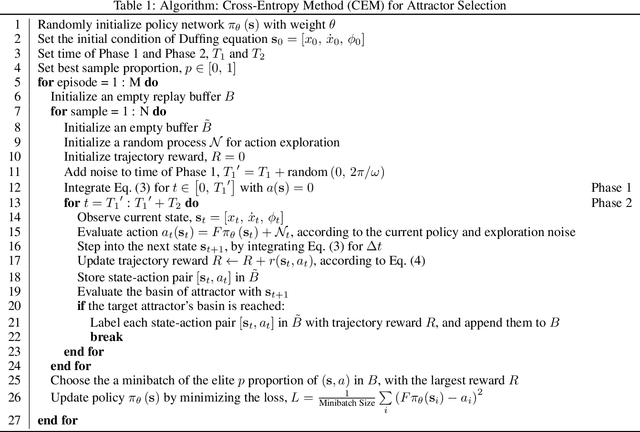

Abstract:This paper describes an approach for attractor selection in nonlinear dynamical systems with constrained actuation. Attractor selection is achieved using two different deep reinforcement learning methods: 1) the cross-entropy method (CEM) and 2) the deep deterministic policy gradient (DDPG) method. The framework and algorithms for applying these control methods are presented. Experiments were performed on a Duffing oscillator as it is a classic nonlinear dynamical system with multiple attractors. Both methods achieve attractor selection under various control constraints. While these methods have nearly identical success rates, the DDPG method has the advantages a high learning rate, low performance variance, and offers a smooth control approach. This experiment demonstrates the applicability of reinforcement learning to constrained attractor selection problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge