Xizi Chen

Partial Knowledge Distillation for Alleviating the Inherent Inter-Class Discrepancy in Federated Learning

Nov 23, 2024

Abstract:Substantial efforts have been devoted to alleviating the impact of the long-tailed class distribution in federated learning. In this work, we observe an interesting phenomenon that weak classes consistently exist even for class-balanced learning. These weak classes, different from the minority classes in the previous works, are inherent to data and remain fairly consistent for various network structures and learning paradigms. The inherent inter-class accuracy discrepancy can reach over 36.9% for federated learning on the FashionMNIST and CIFAR-10 datasets, even when the class distribution is balanced both globally and locally. In this study, we empirically analyze the potential reason for this phenomenon. Furthermore, a class-specific partial knowledge distillation method is proposed to improve the model's classification accuracy for weak classes. In this approach, knowledge transfer is initiated upon the occurrence of specific misclassifications within certain weak classes. Experimental results show that the accuracy of weak classes can be improved by 10.7%, reducing the inherent interclass discrepancy effectively.

A 137.5 TOPS/W SRAM Compute-in-Memory Macro with 9-b Memory Cell-Embedded ADCs and Signal Margin Enhancement Techniques for AI Edge Applications

Jul 19, 2023Abstract:In this paper, we propose a high-precision SRAM-based CIM macro that can perform 4x4-bit MAC operations and yield 9-bit signed output. The inherent discharge branches of SRAM cells are utilized to apply time-modulated MAC and 9-bit ADC readout operations on two bit-line capacitors. The same principle is used for both MAC and A-to-D conversion ensuring high linearity and thus supporting large number of analog MAC accumulations. The memory cell-embedded ADC eliminates the use of separate ADCs and enhances energy and area efficiency. Additionally, two signal margin enhancement techniques, namely the MAC-folding and boosted-clipping schemes, are proposed to further improve the CIM computation accuracy.

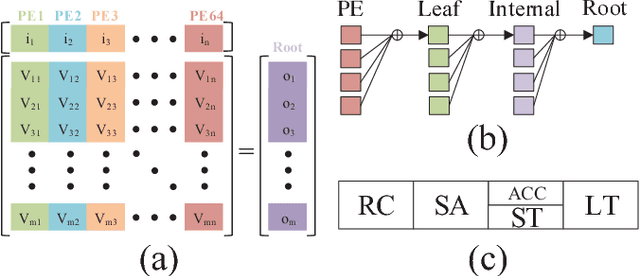

Tight Compression: Compressing CNN Through Fine-Grained Pruning and Weight Permutation for Efficient Implementation

Apr 03, 2021

Abstract:The unstructured sparsity after pruning poses a challenge to the efficient implementation of deep learning models in existing regular architectures like systolic arrays. On the other hand, coarse-grained structured pruning is suitable for implementation in regular architectures but tends to have higher accuracy loss than unstructured pruning when the pruned models are of the same size. In this work, we propose a model compression method based on a novel weight permutation scheme to fully exploit the fine-grained weight sparsity in the hardware design. Through permutation, the optimal arrangement of the weight matrix is obtained, and the sparse weight matrix is further compressed to a small and dense format to make full use of the hardware resources. Two pruning granularities are explored. In addition to the unstructured weight pruning, we also propose a more fine-grained subword-level pruning to further improve the compression performance. Compared to the state-of-the-art works, the matrix compression rate is significantly improved from 5.88x to 14.13x. As a result, the throughput and energy efficiency are improved by 2.75 and 1.86 times, respectively.

A Reconfigurable Winograd CNN Accelerator with Nesting Decomposition Algorithm for Computing Convolution with Large Filters

Feb 26, 2021

Abstract:Recent literature found that convolutional neural networks (CNN) with large filters perform well in some applications such as image semantic segmentation. Winograd transformation helps to reduce the number of multiplications in a convolution but suffers from numerical instability when the convolution filter size gets large. This work proposes a nested Winograd algorithm to iteratively decompose a large filter into a sequence of 3x3 tiles which can then be accelerated with a 3x3 Winograd algorithm. Compared with the state-of-art OLA-Winograd algorithm, the proposed algorithm reduces the multiplications by 1.41 to 3.29 times for computing 5x5 to 9x9 convolutions.

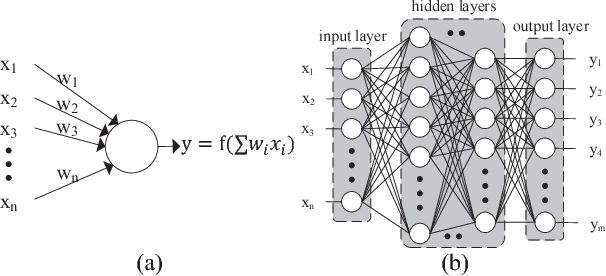

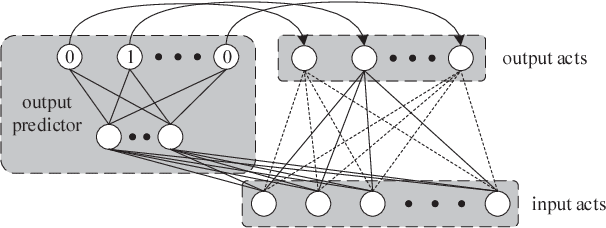

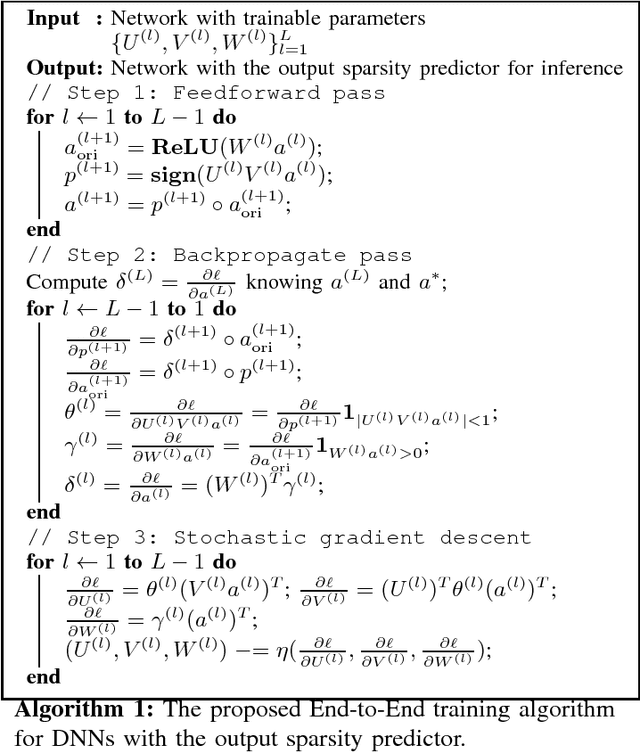

SparseNN: An Energy-Efficient Neural Network Accelerator Exploiting Input and Output Sparsity

Nov 03, 2017

Abstract:Contemporary Deep Neural Network (DNN) contains millions of synaptic connections with tens to hundreds of layers. The large computation and memory requirements pose a challenge to the hardware design. In this work, we leverage the intrinsic activation sparsity of DNN to substantially reduce the execution cycles and the energy consumption. An end-to-end training algorithm is proposed to develop a lightweight run-time predictor for the output activation sparsity on the fly. From our experimental results, the computation overhead of the prediction phase can be reduced to less than 5% of the original feedforward phase with negligible accuracy loss. Furthermore, an energy-efficient hardware architecture, SparseNN, is proposed to exploit both the input and output sparsity. SparseNN is a scalable architecture with distributed memories and processing elements connected through a dedicated on-chip network. Compared with the state-of-the-art accelerators which only exploit the input sparsity, SparseNN can achieve a 10%-70% improvement in throughput and a power reduction of around 50%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge