Xiong Xiong

Physics-Informed Neural Networks and Neural Operators for Parametric PDEs: A Human-AI Collaborative Analysis

Nov 06, 2025

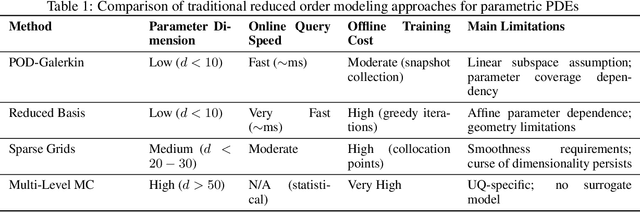

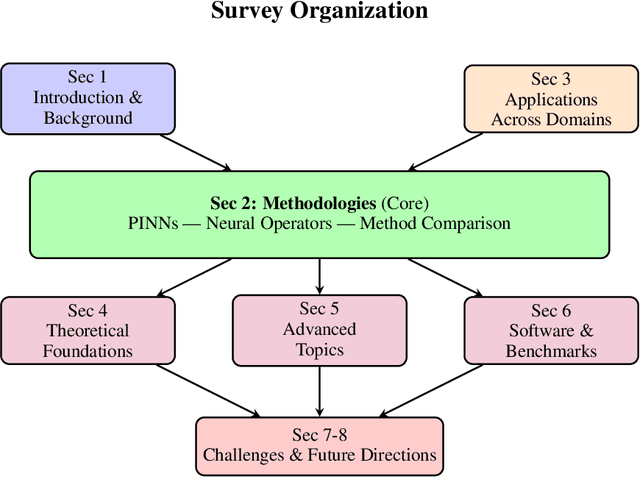

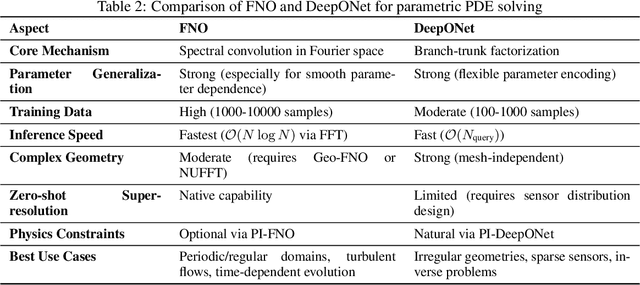

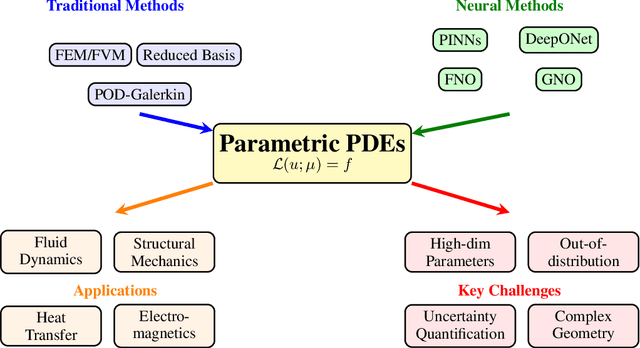

Abstract:PDEs arise ubiquitously in science and engineering, where solutions depend on parameters (physical properties, boundary conditions, geometry). Traditional numerical methods require re-solving the PDE for each parameter, making parameter space exploration prohibitively expensive. Recent machine learning advances, particularly physics-informed neural networks (PINNs) and neural operators, have revolutionized parametric PDE solving by learning solution operators that generalize across parameter spaces. We critically analyze two main paradigms: (1) PINNs, which embed physical laws as soft constraints and excel at inverse problems with sparse data, and (2) neural operators (e.g., DeepONet, Fourier Neural Operator), which learn mappings between infinite-dimensional function spaces and achieve unprecedented generalization. Through comparisons across fluid dynamics, solid mechanics, heat transfer, and electromagnetics, we show neural operators can achieve computational speedups of $10^3$ to $10^5$ times faster than traditional solvers for multi-query scenarios, while maintaining comparable accuracy. We provide practical guidance for method selection, discuss theoretical foundations (universal approximation, convergence), and identify critical open challenges: high-dimensional parameters, complex geometries, and out-of-distribution generalization. This work establishes a unified framework for understanding parametric PDE solvers via operator learning, offering a comprehensive, incrementally updated resource for this rapidly evolving field

Separated-Variable Spectral Neural Networks: A Physics-Informed Learning Approach for High-Frequency PDEs

Aug 01, 2025Abstract:Solving high-frequency oscillatory partial differential equations (PDEs) is a critical challenge in scientific computing, with applications in fluid mechanics, quantum mechanics, and electromagnetic wave propagation. Traditional physics-informed neural networks (PINNs) suffer from spectral bias, limiting their ability to capture high-frequency solution components. We introduce Separated-Variable Spectral Neural Networks (SV-SNN), a novel framework that addresses these limitations by integrating separation of variables with adaptive spectral methods. Our approach features three key innovations: (1) decomposition of multivariate functions into univariate function products, enabling independent spatial and temporal networks; (2) adaptive Fourier spectral features with learnable frequency parameters for high-frequency capture; and (3) theoretical framework based on singular value decomposition to quantify spectral bias. Comprehensive evaluation on benchmark problems including Heat equation, Helmholtz equation, Poisson equations and Navier-Stokes equations demonstrates that SV-SNN achieves 1-3 orders of magnitude improvement in accuracy while reducing parameter count by over 90\% and training time by 60\%. These results establish SV-SNN as an effective solution to the spectral bias problem in neural PDE solving. The implementation will be made publicly available upon acceptance at https://github.com/xgxgnpu/SV-SNN.

Improved Motor Imagery Classification Using Adaptive Spatial Filters Based on Particle Swarm Optimization Algorithm

Oct 29, 2023Abstract:As a typical self-paced brain-computer interface (BCI) system, the motor imagery (MI) BCI has been widely applied in fields such as robot control, stroke rehabilitation, and assistance for patients with stroke or spinal cord injury. Many studies have focused on the traditional spatial filters obtained through the common spatial pattern (CSP) method. However, the CSP method can only obtain fixed spatial filters for specific input signals. Besides, CSP method only focuses on the variance difference of two types of electroencephalogram (EEG) signals, so the decoding ability of EEG signals is limited. To obtain more effective spatial filters for better extraction of spatial features that can improve classification to MI-EEG, this paper proposes an adaptive spatial filter solving method based on particle swarm optimization algorithm (PSO). A training and testing framework based on filter bank and spatial filters (FBCSP-ASP) is designed for MI EEG signal classification. Comparative experiments are conducted on two public datasets (2a and 2b) from BCI competition IV, which show the outstanding average recognition accuracy of FBCSP-ASP. The proposed method has achieved significant performance improvement on MI-BCI. The classification accuracy of the proposed method has reached 74.61% and 81.19% on datasets 2a and 2b, respectively. Compared with the baseline algorithm (FBCSP), the proposed algorithm improves 11.44% and 7.11% on two datasets respectively. Furthermore, the analysis based on mutual information, t-SNE and Shapley values further proves that ASP features have excellent decoding ability for MI-EEG signals, and explains the improvement of classification performance by the introduction of ASP features.

Enhancing Motor Imagery Decoding in Brain Computer Interfaces using Riemann Tangent Space Mapping and Cross Frequency Coupling

Oct 29, 2023

Abstract:Objective: Motor Imagery (MI) serves as a crucial experimental paradigm within the realm of Brain Computer Interfaces (BCIs), aiming to decoding motor intentions from electroencephalogram (EEG) signals. Method: Drawing inspiration from Riemannian geometry and Cross-Frequency Coupling (CFC), this paper introduces a novel approach termed Riemann Tangent Space Mapping using Dichotomous Filter Bank with Convolutional Neural Network (DFBRTS) to enhance the representation quality and decoding capability pertaining to MI features. DFBRTS first initiates the process by meticulously filtering EEG signals through a Dichotomous Filter Bank, structured in the fashion of a complete binary tree. Subsequently, it employs Riemann Tangent Space Mapping to extract salient EEG signal features within each sub-band. Finally, a lightweight convolutional neural network is employed for further feature extraction and classification, operating under the joint supervision of cross-entropy and center loss. To validate the efficacy, extensive experiments were conducted using DFBRTS on two well-established benchmark datasets: the BCI competition IV 2a (BCIC-IV-2a) dataset and the OpenBMI dataset. The performance of DFBRTS was benchmarked against several state-of-the-art MI decoding methods, alongside other Riemannian geometry-based MI decoding approaches. Results: DFBRTS significantly outperforms other MI decoding algorithms on both datasets, achieving a remarkable classification accuracy of 78.16% for four-class and 71.58% for two-class hold-out classification, as compared to the existing benchmarks.

Capped norm linear discriminant analysis and its applications

Nov 04, 2020

Abstract:Classical linear discriminant analysis (LDA) is based on squared Frobenious norm and hence is sensitive to outliers and noise. To improve the robustness of LDA, in this paper, we introduce capped l_{2,1}-norm of a matrix, which employs non-squared l_2-norm and "capped" operation, and further propose a novel capped l_{2,1}-norm linear discriminant analysis, called CLDA. Due to the use of capped l_{2,1}-norm, CLDA can effectively remove extreme outliers and suppress the effect of noise data. In fact, CLDA can be also viewed as a weighted LDA. CLDA is solved through a series of generalized eigenvalue problems with theoretical convergency. The experimental results on an artificial data set, some UCI data sets and two image data sets demonstrate the effectiveness of CLDA.

Multi-user Resource Control with Deep Reinforcement Learning in IoT Edge Computing

Jun 19, 2019

Abstract:By leveraging the concept of mobile edge computing (MEC), massive amount of data generated by a large number of Internet of Things (IoT) devices could be offloaded to MEC server at the edge of wireless network for further computational intensive processing. However, due to the resource constraint of IoT devices and wireless network, both the communications and computation resources need to be allocated and scheduled efficiently for better system performance. In this paper, we propose a joint computation offloading and multi-user scheduling algorithm for IoT edge computing system to minimize the long-term average weighted sum of delay and power consumption under stochastic traffic arrival. We formulate the dynamic optimization problem as an infinite-horizon average-reward continuous-time Markov decision process (CTMDP) model. One critical challenge in solving this MDP problem for the multi-user resource control is the curse-of-dimensionality problem, where the state space of the MDP model and the computation complexity increase exponentially with the growing number of users or IoT devices. In order to overcome this challenge, we use the deep reinforcement learning (RL) techniques and propose a neural network architecture to approximate the value functions for the post-decision system states. The designed algorithm to solve the CTMDP problem supports semi-distributed auction-based implementation, where the IoT devices submit bids to the BS to make the resource control decisions centrally. Simulation results show that the proposed algorithm provides significant performance improvement over the baseline algorithms, and also outperforms the RL algorithms based on other neural network architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge