Xinze Zhang

Rethinking Recurrent Neural Networks for Time Series Forecasting: A Reinforced Recurrent Encoder with Prediction-Oriented Proximal Policy Optimization

Jan 07, 2026Abstract:Time series forecasting plays a crucial role in contemporary engineering information systems for supporting decision-making across various industries, where Recurrent Neural Networks (RNNs) have been widely adopted due to their capability in modeling sequential data. Conventional RNN-based predictors adopt an encoder-only strategy with sliding historical windows as inputs to forecast future values. However, this approach treats all time steps and hidden states equally without considering their distinct contributions to forecasting, leading to suboptimal performance. To address this limitation, we propose a novel Reinforced Recurrent Encoder with Prediction-oriented Proximal Policy Optimization, RRE-PPO4Pred, which significantly improves time series modeling capacity and forecasting accuracy of the RNN models. The core innovations of this method are: (1) A novel Reinforced Recurrent Encoder (RRE) framework that enhances RNNs by formulating their internal adaptation as a Markov Decision Process, creating a unified decision environment capable of learning input feature selection, hidden skip connection, and output target selection; (2) An improved Prediction-oriented Proximal Policy Optimization algorithm, termed PPO4Pred, which is equipped with a Transformer-based agent for temporal reasoning and develops a dynamic transition sampling strategy to enhance sampling efficiency; (3) A co-evolutionary optimization paradigm to facilitate the learning of the RNN predictor and the policy agent, providing adaptive and interactive time series modeling. Comprehensive evaluations on five real-world datasets indicate that our method consistently outperforms existing baselines, and attains accuracy better than state-of-the-art Transformer models, thus providing an advanced time series predictor in engineering informatics.

GLM-4.5: Agentic, Reasoning, and Coding (ARC) Foundation Models

Aug 08, 2025Abstract:We present GLM-4.5, an open-source Mixture-of-Experts (MoE) large language model with 355B total parameters and 32B activated parameters, featuring a hybrid reasoning method that supports both thinking and direct response modes. Through multi-stage training on 23T tokens and comprehensive post-training with expert model iteration and reinforcement learning, GLM-4.5 achieves strong performance across agentic, reasoning, and coding (ARC) tasks, scoring 70.1% on TAU-Bench, 91.0% on AIME 24, and 64.2% on SWE-bench Verified. With much fewer parameters than several competitors, GLM-4.5 ranks 3rd overall among all evaluated models and 2nd on agentic benchmarks. We release both GLM-4.5 (355B parameters) and a compact version, GLM-4.5-Air (106B parameters), to advance research in reasoning and agentic AI systems. Code, models, and more information are available at https://github.com/zai-org/GLM-4.5.

Reinforced Decoder: Towards Training Recurrent Neural Networks for Time Series Forecasting

Jun 14, 2024

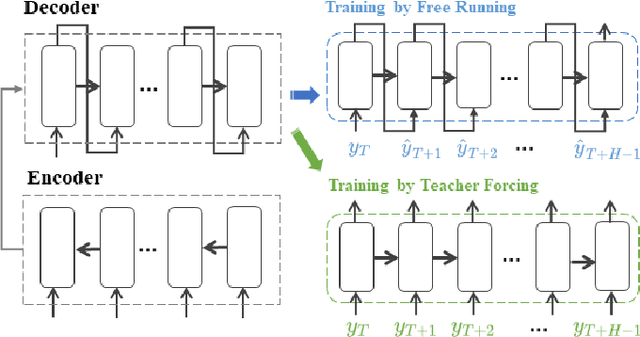

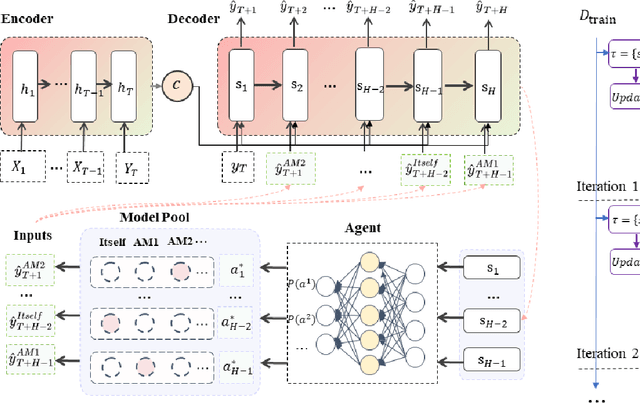

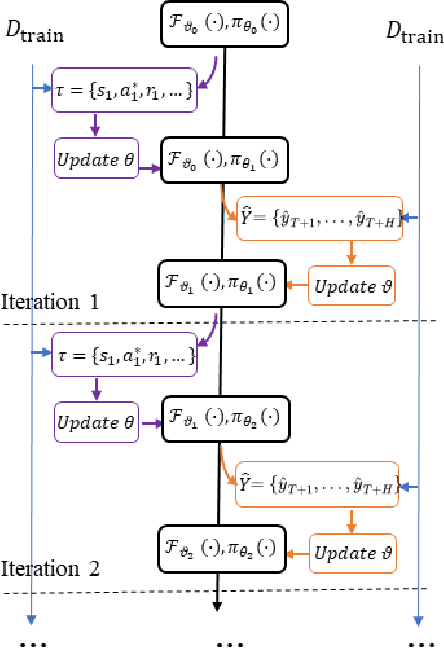

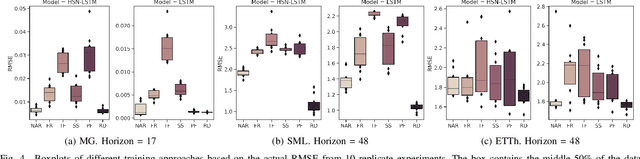

Abstract:Recurrent neural network-based sequence-to-sequence models have been extensively applied for multi-step-ahead time series forecasting. These models typically involve a decoder trained using either its previous forecasts or the actual observed values as the decoder inputs. However, relying on self-generated predictions can lead to the rapid accumulation of errors over multiple steps, while using the actual observations introduces exposure bias as these values are unavailable during the extrapolation stage. In this regard, this study proposes a novel training approach called reinforced decoder, which introduces auxiliary models to generate alternative decoder inputs that remain accessible when extrapolating. Additionally, a reinforcement learning algorithm is utilized to dynamically select the optimal inputs to improve accuracy. Comprehensive experiments demonstrate that our approach outperforms representative training methods over several datasets. Furthermore, the proposed approach also exhibits promising performance when generalized to self-attention-based sequence-to-sequence forecasting models.

Error-feedback Stochastic Configuration Strategy on Convolutional Neural Networks for Time Series Forecasting

Feb 03, 2020

Abstract:Despite the superiority of convolutional neural networks demonstrated in time series modeling and forecasting, it has not been fully explored on the design of the neural network architecture as well as the tuning of the hyper-parameters. Inspired by the iterative construction strategy for building a random multilayer perceptron, we propose a novel Error-feedback Stochastic Configuration (ESC) strategy to construct a random Convolutional Neural Network (ESC-CNN) for time series forecasting task, which builds the network architecture adaptively. The ESC strategy suggests that random filters and neurons of the error-feedback fully connected layer are incrementally added in a manner that they can steadily compensate the prediction error during the construction process, and a filter selection strategy is introduced to secure that ESC-CNN holds the universal approximation property, providing helpful information at each iterative process for the prediction. The performance of ESC-CNN is justified on its prediction accuracy for one-step-ahead and multi-step-ahead forecasting tasks. Comprehensive experiments on a synthetic dataset and two real-world datasets show that the proposed ESC-CNN not only outperforms the state-of-art random neural networks, but also exhibits strong predictive power in comparison to trained Convolution Neural Networks and Long Short-Term Memory models, demonstrating the effectiveness of ESC-CNN in time series forecasting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge