Xinjun Cai

SHARK: A Lightweight Model Compression Approach for Large-scale Recommender Systems

Aug 18, 2023

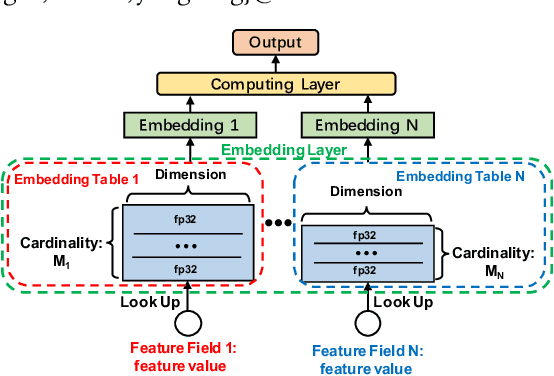

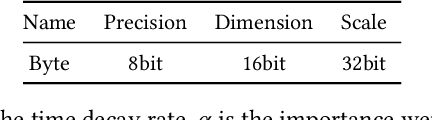

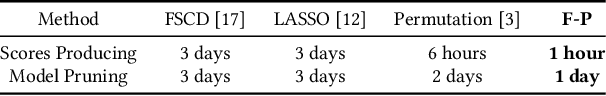

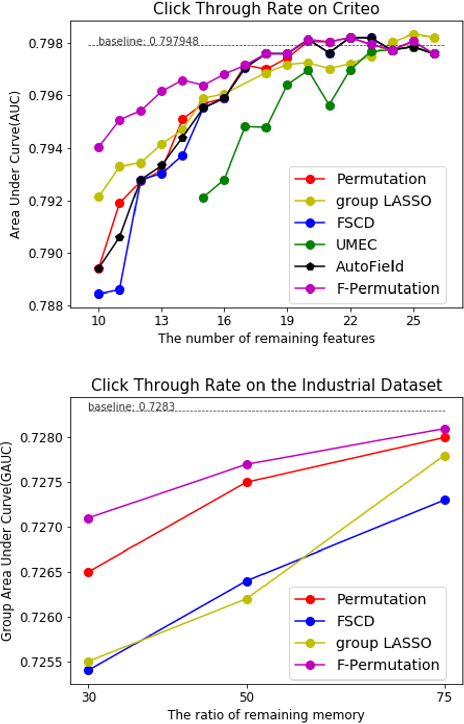

Abstract:Increasing the size of embedding layers has shown to be effective in improving the performance of recommendation models, yet gradually causing their sizes to exceed terabytes in industrial recommender systems, and hence the increase of computing and storage costs. To save resources while maintaining model performances, we propose SHARK, the model compression practice we have summarized in the recommender system of industrial scenarios. SHARK consists of two main components. First, we use the novel first-order component of Taylor expansion as importance scores to prune the number of embedding tables (feature fields). Second, we introduce a new row-wise quantization method to apply different quantization strategies to each embedding. We conduct extensive experiments on both public and industrial datasets, demonstrating that each component of our proposed SHARK framework outperforms previous approaches. We conduct A/B tests in multiple models on Kuaishou, such as short video, e-commerce, and advertising recommendation models. The results of the online A/B test showed SHARK can effectively reduce the memory footprint of the embedded layer. For the short-video scenarios, the compressed model without any performance drop significantly saves 70% storage and thousands of machines, improves 30\% queries per second (QPS), and has been deployed to serve hundreds of millions of users and process tens of billions of requests every day.

HMSG: Heterogeneous Graph Neural Network based on Metapath Subgraph Learning

Sep 07, 2021

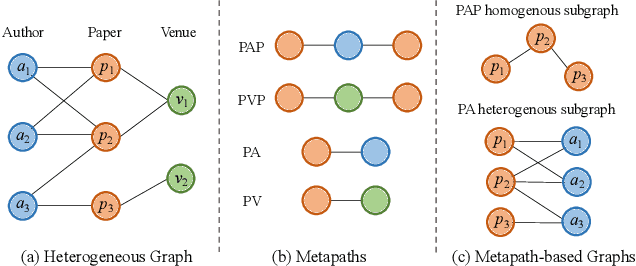

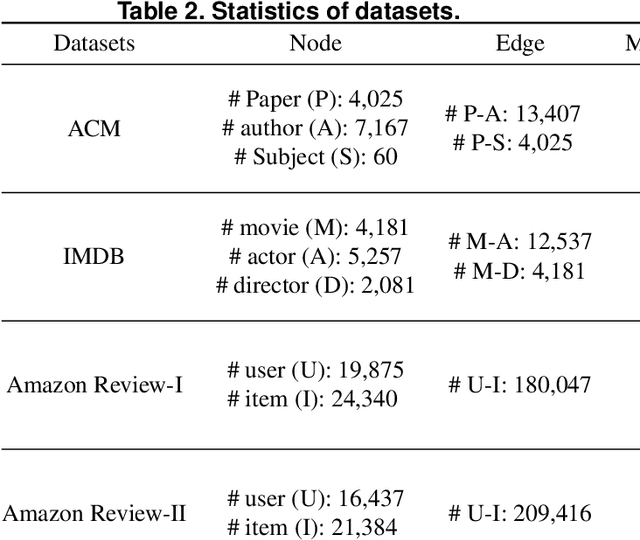

Abstract:Many real-world data can be represented as heterogeneous graphs with different types of nodes and connections. Heterogeneous graph neural network model aims to embed nodes or subgraphs into low-dimensional vector space for various downstream tasks such as node classification, link prediction, etc. Although several models were proposed recently, they either only aggregate information from the same type of neighbors, or just indiscriminately treat homogeneous and heterogeneous neighbors in the same way. Based on these observations, we propose a new heterogeneous graph neural network model named HMSG to comprehensively capture structural, semantic and attribute information from both homogeneous and heterogeneous neighbors. Specifically, we first decompose the heterogeneous graph into multiple metapath-based homogeneous and heterogeneous subgraphs, and each subgraph associates specific semantic and structural information. Then message aggregation methods are applied to each subgraph independently, so that information can be learned in a more targeted and efficient manner. Through a type-specific attribute transformation, node attributes can also be transferred among different types of nodes. Finally, we fuse information from subgraphs together to get the complete representation. Extensive experiments on several datasets for node classification, node clustering and link prediction tasks show that HMSG achieves the best performance in all evaluation metrics than state-of-the-art baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge