XinJun Mao

Self-Supervised Exploration via Temporal Inconsistency in Reinforcement Learning

Aug 24, 2022

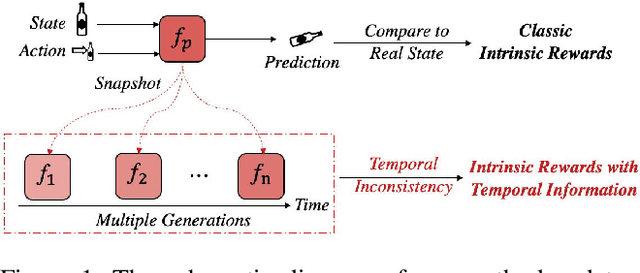

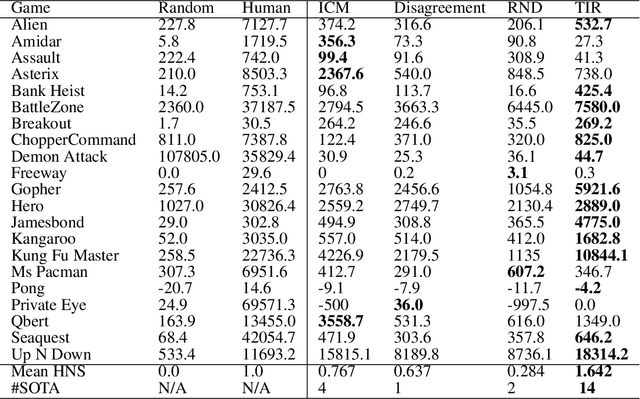

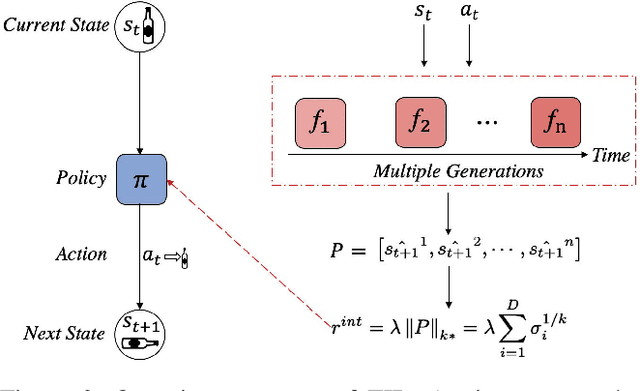

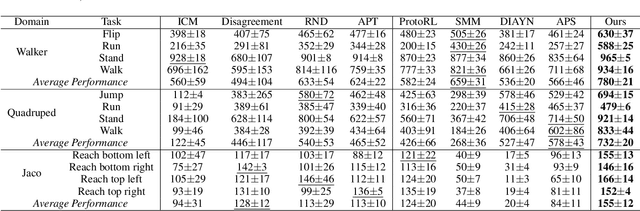

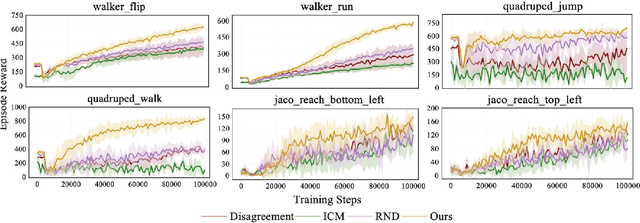

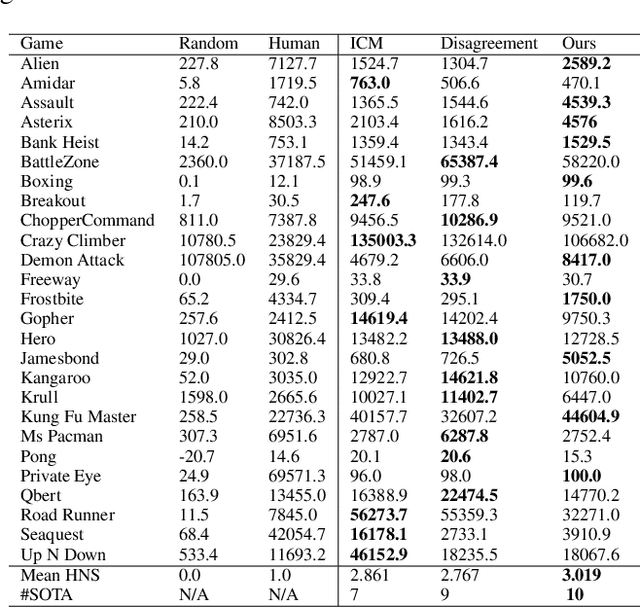

Abstract:In real-world scenarios, reinforcement learning under sparse-reward synergistic settings has remained challenging, despite surging interests in this field. Previous attempts suggest that intrinsic reward can alleviate the issue caused by sparsity. In this paper, we present a novel intrinsic reward that is inspired by human learning, as humans evaluate curiosity by comparing current observations with historical knowledge. Specifically, we train a self-supervised prediction model and save a set of snapshots of the model parameters, without incurring addition training cost. Then we employ nuclear norm to evaluate the temporal inconsistency between the predictions of different snapshots, which can be further deployed as the intrinsic reward. Moreover, a variational weighting mechanism is proposed to assign weight to different snapshots in an adaptive manner. We demonstrate the efficacy of the proposed method in various benchmark environments. The results suggest that our method can provide overwhelming state-of-the-art performance compared with other intrinsic reward-based methods, without incurring additional training costs and maintaining higher noise tolerance. Our code will be released publicly to enhance reproducibility.

Dynamic Memory-based Curiosity: A Bootstrap Approach for Exploration

Aug 24, 2022

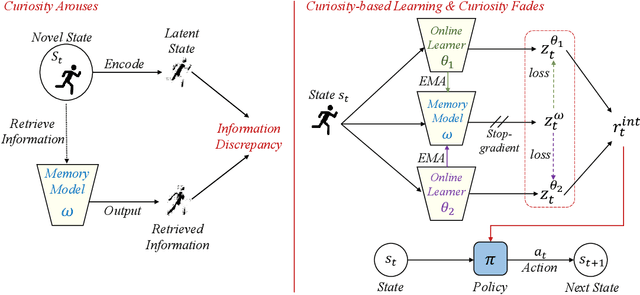

Abstract:The sparsity of extrinsic rewards poses a serious challenge for reinforcement learning (RL). Currently, many efforts have been made on curiosity which can provide a representative intrinsic reward for effective exploration. However, the challenge is still far from being solved. In this paper, we present a novel curiosity for RL, named DyMeCu, which stands for Dynamic Memory-based Curiosity. Inspired by human curiosity and information theory, DyMeCu consists of a dynamic memory and dual online learners. The curiosity arouses if memorized information can not deal with the current state, and the information gap between dual learners can be formulated as the intrinsic reward for agents, and then such state information can be consolidated into the dynamic memory. Compared with previous curiosity methods, DyMeCu can better mimic human curiosity with dynamic memory, and the memory module can be dynamically grown based on a bootstrap paradigm with dual learners. On multiple benchmarks including DeepMind Control Suite and Atari Suite, large-scale empirical experiments are conducted and the results demonstrate that DyMeCu outperforms competitive curiosity-based methods with or without extrinsic rewards. We will release the code to enhance reproducibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge