YiYing Li

Dynamic Memory-based Curiosity: A Bootstrap Approach for Exploration

Aug 24, 2022

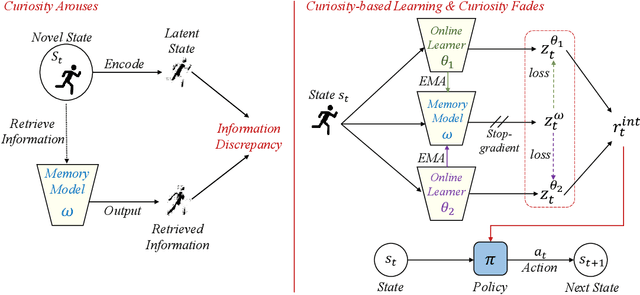

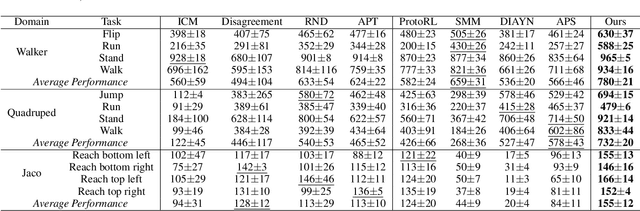

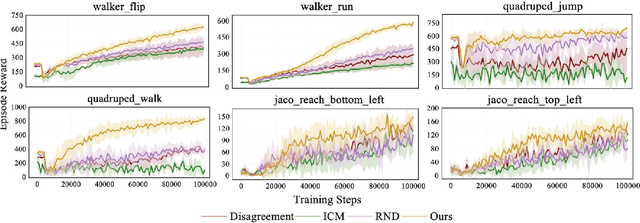

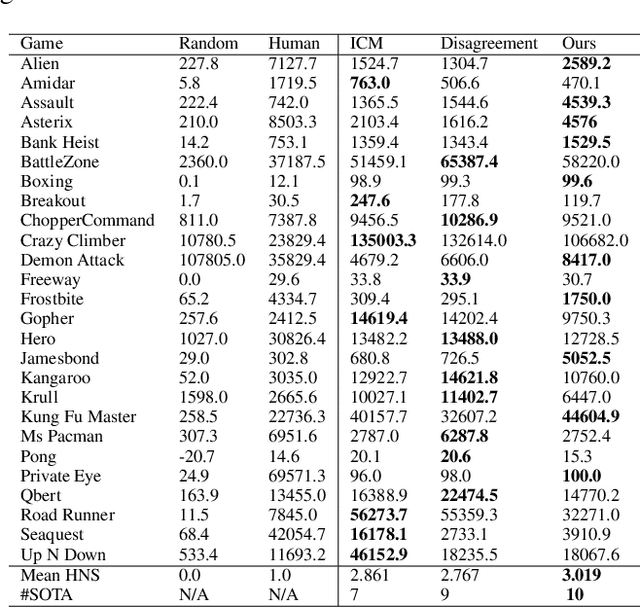

Abstract:The sparsity of extrinsic rewards poses a serious challenge for reinforcement learning (RL). Currently, many efforts have been made on curiosity which can provide a representative intrinsic reward for effective exploration. However, the challenge is still far from being solved. In this paper, we present a novel curiosity for RL, named DyMeCu, which stands for Dynamic Memory-based Curiosity. Inspired by human curiosity and information theory, DyMeCu consists of a dynamic memory and dual online learners. The curiosity arouses if memorized information can not deal with the current state, and the information gap between dual learners can be formulated as the intrinsic reward for agents, and then such state information can be consolidated into the dynamic memory. Compared with previous curiosity methods, DyMeCu can better mimic human curiosity with dynamic memory, and the memory module can be dynamically grown based on a bootstrap paradigm with dual learners. On multiple benchmarks including DeepMind Control Suite and Atari Suite, large-scale empirical experiments are conducted and the results demonstrate that DyMeCu outperforms competitive curiosity-based methods with or without extrinsic rewards. We will release the code to enhance reproducibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge