Xiliang Lin

Adaptive Experimentation with Delayed Binary Feedback

Feb 02, 2022

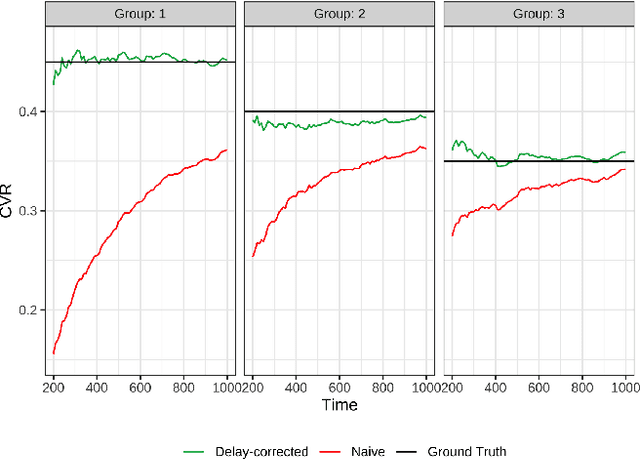

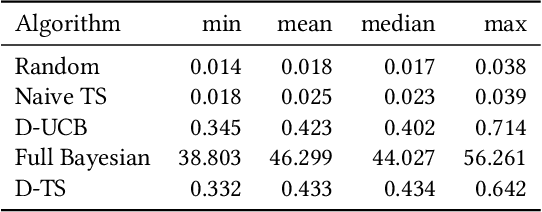

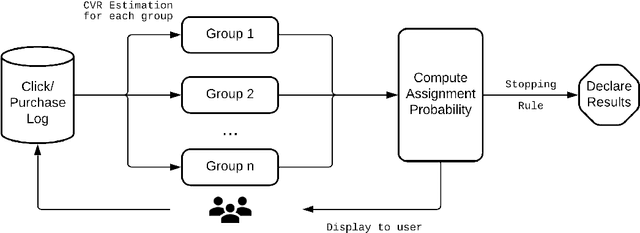

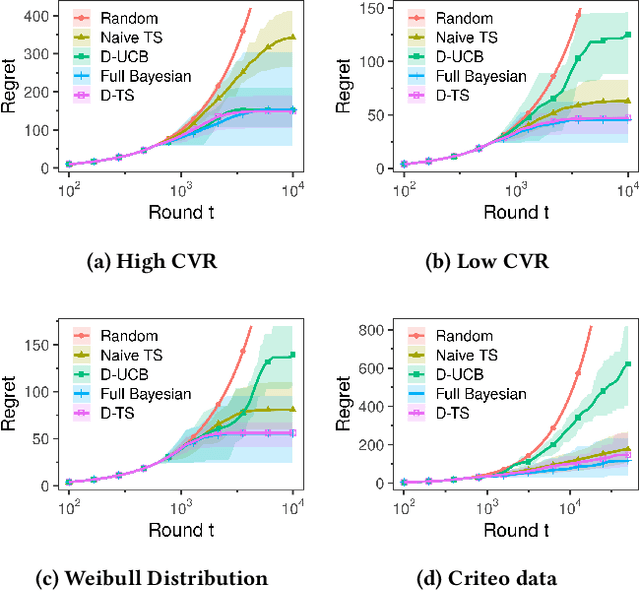

Abstract:Conducting experiments with objectives that take significant delays to materialize (e.g. conversions, add-to-cart events, etc.) is challenging. Although the classical "split sample testing" is still valid for the delayed feedback, the experiment will take longer to complete, which also means spending more resources on worse-performing strategies due to their fixed allocation schedules. Alternatively, adaptive approaches such as "multi-armed bandits" are able to effectively reduce the cost of experimentation. But these methods generally cannot handle delayed objectives directly out of the box. This paper presents an adaptive experimentation solution tailored for delayed binary feedback objectives by estimating the real underlying objectives before they materialize and dynamically allocating variants based on the estimates. Experiments show that the proposed method is more efficient for delayed feedback compared to various other approaches and is robust in different settings. In addition, we describe an experimentation product powered by this algorithm. This product is currently deployed in the online experimentation platform of JD.com, a large e-commerce company and a publisher of digital ads.

Blending Advertising with Organic Content in E-Commerce: A Virtual Bids Optimization Approach

May 28, 2021

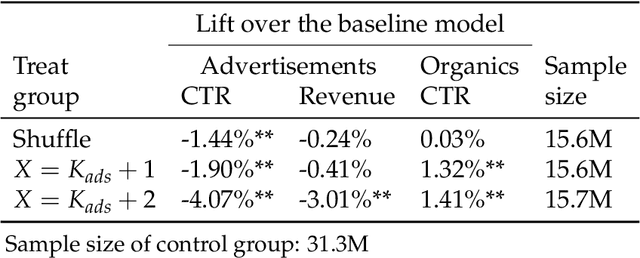

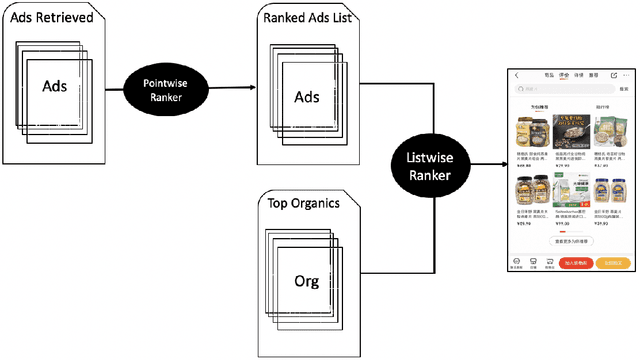

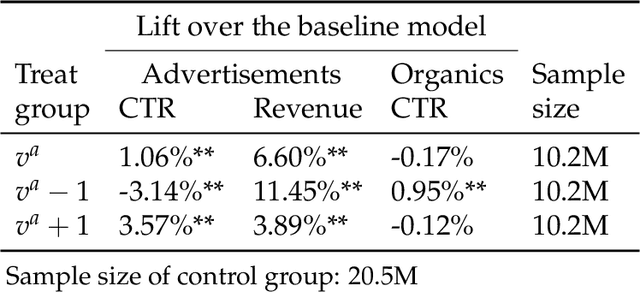

Abstract:In e-commerce platforms, sponsored and non-sponsored content are jointly displayed to users and both may interactively influence their engagement behavior. The former content helps advertisers achieve their marketing goals and provides a stream of ad revenue to the platform. The latter content contributes to users' engagement with the platform, which is key to its long-term health. A burning issue for e-commerce platform design is how to blend advertising with content in a way that respects these interactions and balances these multiple business objectives. This paper describes a system developed for this purpose in the context of blending personalized sponsored content with non-sponsored content on the product detail pages of JD.COM, an e-commerce company. This system has three key features: (1) Optimization of multiple competing business objectives through a new virtual bids approach and the expressiveness of the latent, implicit valuation of the platform for the multiple objectives via these virtual bids. (2) Modeling of users' click behavior as a function of their characteristics, the individual characteristics of each sponsored content and the influence exerted by other sponsored and non-sponsored content displayed alongside through a deep learning approach; (3) Consideration of externalities in the allocation of ads, thereby making it directly compatible with a Vickrey-Clarke-Groves (VCG) auction scheme for the computation of payments in the presence of these externalities. The system is currently deployed and serving all traffic through JD.COM's mobile application. Experiments demonstrating the performance and advantages of the system are presented.

Comparison Lift: Bandit-based Experimentation System for Online Advertising

Sep 16, 2020

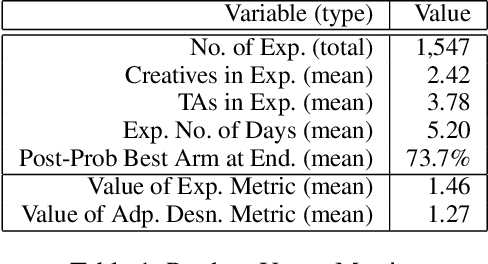

Abstract:Comparison Lift is an experimentation-as-a-service (EaaS) application for testing online advertising audiences and creatives at JD.com. Unlike many other EaaS tools that focus primarily on fixed sample A/B testing, Comparison Lift deploys a custom bandit-based experimentation algorithm. The advantages of the bandit-based approach are two-fold. First, it aligns the randomization induced in the test with the advertiser's goals from testing. Second, by adapting experimental design to information acquired during the test, it reduces substantially the cost of experimentation to the advertiser. Since launch in May 2019, Comparison Lift has been utilized in over 1,500 experiments. We estimate that utilization of the product has helped increase click-through rates of participating advertising campaigns by 46% on average. We estimate that the adaptive design in the product has generated 27% more clicks on average during testing compared to a fixed sample A/B design. Both suggest significant value generation and cost savings to advertisers from the product.

Online Evaluation of Audiences for Targeted Advertising via Bandit Experiments

Jul 18, 2019

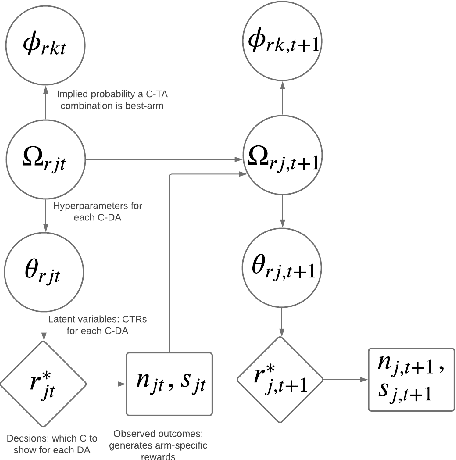

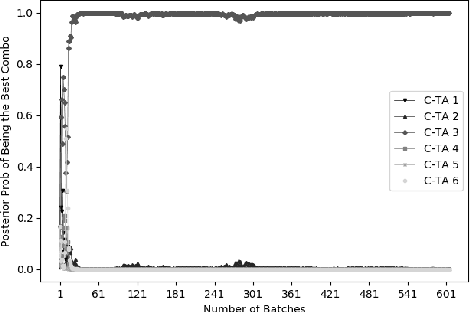

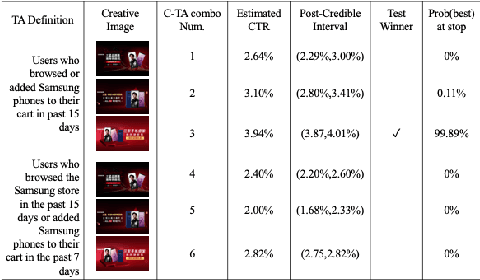

Abstract:Firms implementing digital advertising campaigns face a complex problem in determining the right match between their advertising creatives and target audiences. Typical solutions to the problem have leveraged non-experimental methods, or used "split-testing" strategies that have not explicitly addressed the complexities induced by targeted audiences that can potentially overlap with one another. This paper presents an adaptive algorithm that addresses the problem via online experimentation. The algorithm is set up as a contextual bandit and addresses the overlap issue by partitioning the target audiences into disjoint, non-overlapping sub-populations. It learns an optimal creative display policy in the disjoint space, while assessing in parallel which creative has the best match in the space of possibly overlapping target audiences. Experiments show that the proposed method is more efficient compared to naive "split-testing" or non-adaptive "A/B/n" testing based methods. We also describe a testing product we built that uses the algorithm. The product is currently deployed on the advertising platform of JD.com, an eCommerce company and a publisher of digital ads in China.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge