Xibing Zuo

S2C: Learning Noise-Resistant Differences for Unsupervised Change Detection in Multimodal Remote Sensing Images

Feb 18, 2025

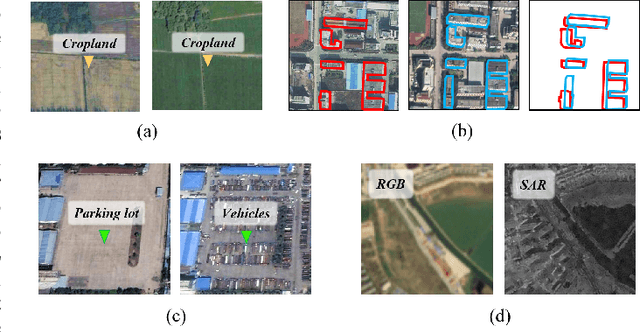

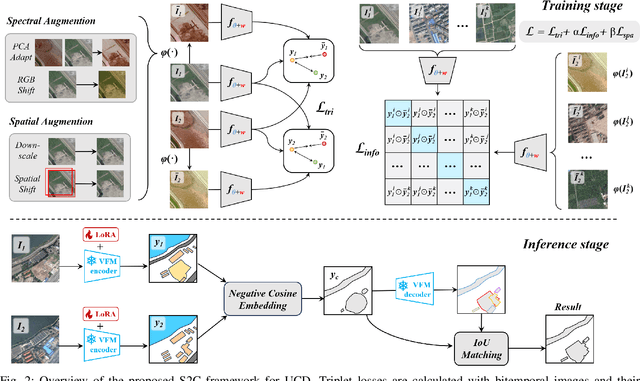

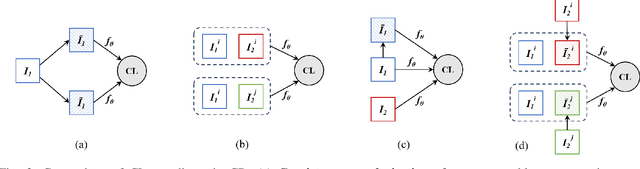

Abstract:Unsupervised Change Detection (UCD) in multimodal Remote Sensing (RS) images remains a difficult challenge due to the inherent spatio-temporal complexity within data, and the heterogeneity arising from different imaging sensors. Inspired by recent advancements in Visual Foundation Models (VFMs) and Contrastive Learning (CL) methodologies, this research aims to develop CL methodologies to translate implicit knowledge in VFM into change representations, thus eliminating the need for explicit supervision. To this end, we introduce a Semantic-to-Change (S2C) learning framework for UCD in both homogeneous and multimodal RS images. Differently from existing CL methodologies that typically focus on learning multi-temporal similarities, we introduce a novel triplet learning strategy that explicitly models temporal differences, which are crucial to the CD task. Furthermore, random spatial and spectral perturbations are introduced during the training to enhance robustness to temporal noise. In addition, a grid sparsity regularization is defined to suppress insignificant changes, and an IoU-matching algorithm is developed to refine the CD results. Experiments on four benchmark CD datasets demonstrate that the proposed S2C learning framework achieves significant improvements in accuracy, surpassing current state-of-the-art by over 31\%, 9\%, 23\%, and 15\%, respectively. It also demonstrates robustness and sample efficiency, suitable for training and adaptation of various Visual Foundation Models (VFMs) or backbone neural networks. The relevant code will be available at: github.com/DingLei14/S2C.

Active Deep Densely Connected Convolutional Network for Hyperspectral Image Classification

Sep 01, 2020

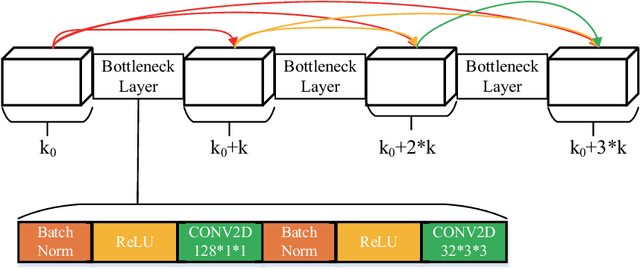

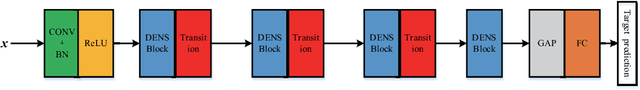

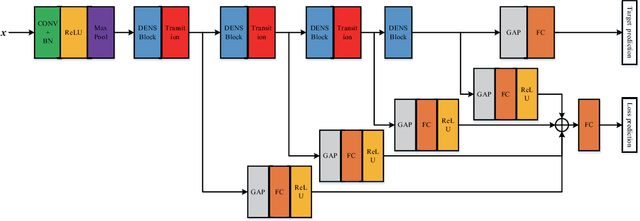

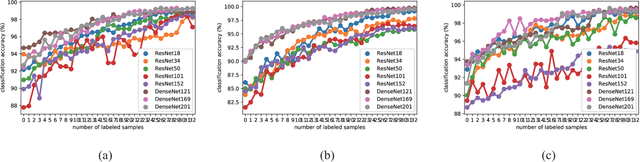

Abstract:Deep learning based methods have seen a massive rise in popularity for hyperspectral image classification over the past few years. However, the success of deep learning is attributed greatly to numerous labeled samples. It is still very challenging to use only a few labeled samples to train deep learning models to reach a high classification accuracy. An active deep-learning framework trained by an end-to-end manner is, therefore, proposed by this paper in order to minimize the hyperspectral image classification costs. First, a deep densely connected convolutional network is considered for hyperspectral image classification. Different from the traditional active learning methods, an additional network is added to the designed deep densely connected convolutional network to predict the loss of input samples. Then, the additional network could be used to suggest unlabeled samples that the deep densely connected convolutional network is more likely to produce a wrong label. Note that the additional network uses the intermediate features of the deep densely connected convolutional network as input. Therefore, the proposed method is an end-to-end framework. Subsequently, a few of the selected samples are labelled manually and added to the training samples. The deep densely connected convolutional network is therefore trained using the new training set. Finally, the steps above are repeated to train the whole framework iteratively. Extensive experiments illustrates that the method proposed could reach a high accuracy in classification after selecting just a few samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge