Xiaona Lin

More Reliable AI Solution: Breast Ultrasound Diagnosis Using Multi-AI Combination

Jan 07, 2021

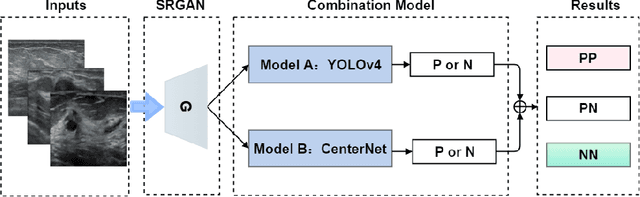

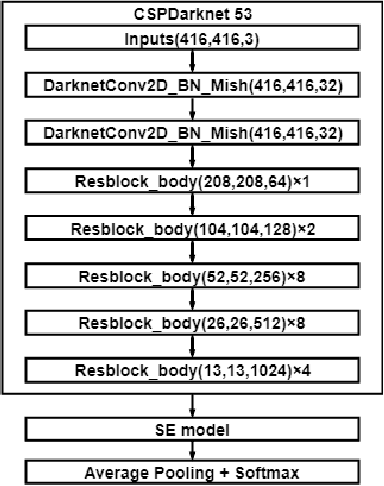

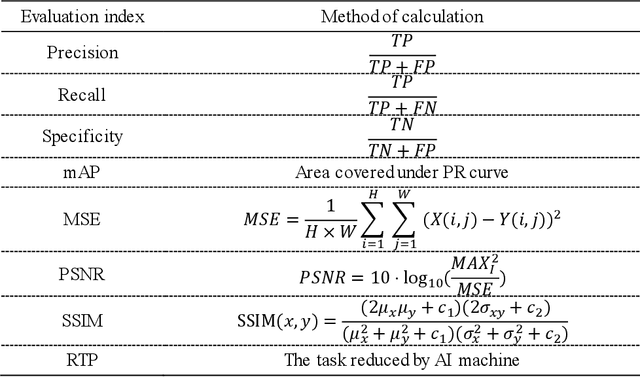

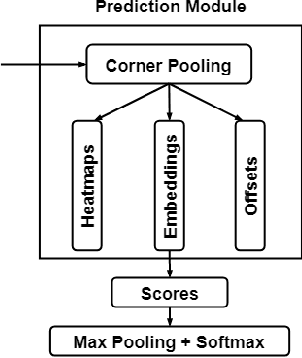

Abstract:Objective: Breast cancer screening is of great significance in contemporary women's health prevention. The existing machines embedded in the AI system do not reach the accuracy that clinicians hope. How to make intelligent systems more reliable is a common problem. Methods: 1) Ultrasound image super-resolution: the SRGAN super-resolution network reduces the unclearness of ultrasound images caused by the device itself and improves the accuracy and generalization of the detection model. 2) In response to the needs of medical images, we have improved the YOLOv4 and the CenterNet models. 3) Multi-AI model: based on the respective advantages of different AI models, we employ two AI models to determine clinical resuls cross validation. And we accept the same results and refuses others. Results: 1) With the help of the super-resolution model, the YOLOv4 model and the CenterNet model both increased the mAP score by 9.6% and 13.8%. 2) Two methods for transforming the target model into a classification model are proposed. And the unified output is in a specified format to facilitate the call of the molti-AI model. 3) In the classification evaluation experiment, concatenated by the YOLOv4 model (sensitivity 57.73%, specificity 90.08%) and the CenterNet model (sensitivity 62.64%, specificity 92.54%), the multi-AI model will refuse to make judgments on 23.55% of the input data. Correspondingly, the performance has been greatly improved to 95.91% for the sensitivity and 96.02% for the specificity. Conclusion: Our work makes the AI model more reliable in medical image diagnosis. Significance: 1) The proposed method makes the target detection model more suitable for diagnosing breast ultrasound images. 2) It provides a new idea for artificial intelligence in medical diagnosis, which can more conveniently introduce target detection models from other fields to serve medical lesion screening.

Semi-supervised Breast Lesion Detection in Ultrasound Video Based on Temporal Coherence

Jul 16, 2019

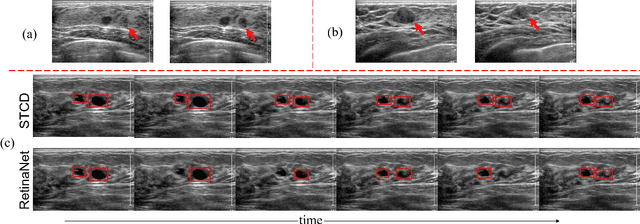

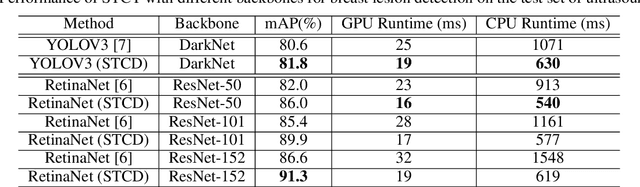

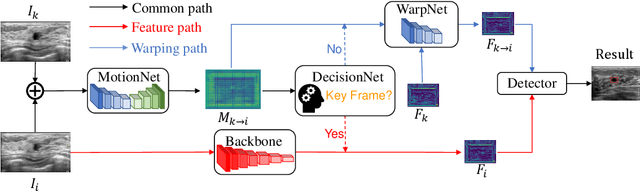

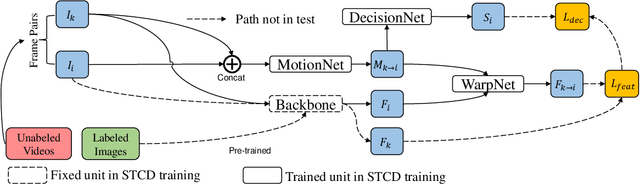

Abstract:Breast lesion detection in ultrasound video is critical for computer-aided diagnosis. However, detecting lesion in video is quite challenging due to the blurred lesion boundary, high similarity to soft tissue and lack of video annotations. In this paper, we propose a semi-supervised breast lesion detection method based on temporal coherence which can detect the lesion more accurately. We aggregate features extracted from the historical key frames with adaptive key-frame scheduling strategy. Our proposed method accomplishes the unlabeled videos detection task by leveraging the supervision information from a different set of labeled images. In addition, a new WarpNet is designed to replace both the traditional spatial warping and feature aggregation operation, leading to a tremendous increase in speed. Experiments on 1,060 2D ultrasound sequences demonstrate that our proposed method achieves state-of-the-art video detection result as 91.3% in mean average precision and 19 ms per frame on GPU, compared to a RetinaNet based detection method in 86.6% and 32 ms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge