Xiaojun Hu

VideoMemory: Toward Consistent Video Generation via Memory Integration

Jan 07, 2026Abstract:Maintaining consistent characters, props, and environments across multiple shots is a central challenge in narrative video generation. Existing models can produce high-quality short clips but often fail to preserve entity identity and appearance when scenes change or when entities reappear after long temporal gaps. We present VideoMemory, an entity-centric framework that integrates narrative planning with visual generation through a Dynamic Memory Bank. Given a structured script, a multi-agent system decomposes the narrative into shots, retrieves entity representations from memory, and synthesizes keyframes and videos conditioned on these retrieved states. The Dynamic Memory Bank stores explicit visual and semantic descriptors for characters, props, and backgrounds, and is updated after each shot to reflect story-driven changes while preserving identity. This retrieval-update mechanism enables consistent portrayal of entities across distant shots and supports coherent long-form generation. To evaluate this setting, we construct a 54-case multi-shot consistency benchmark covering character-, prop-, and background-persistent scenarios. Extensive experiments show that VideoMemory achieves strong entity-level coherence and high perceptual quality across diverse narrative sequences.

Dual-Stream Pyramid Registration Network

Sep 26, 2019

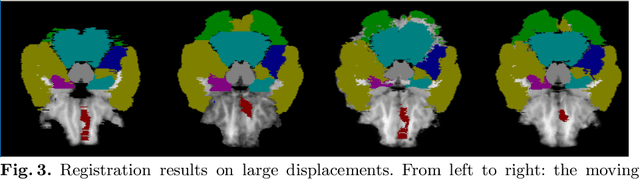

Abstract:We propose a Dual-Stream Pyramid Registration Network (referred as Dual-PRNet) for unsupervised 3D medical image registration. Unlike recent CNN-based registration approaches, such as VoxelMorph, which explores a single-stream encoder-decoder network to compute a registration fields from a pair of 3D volumes, we design a two-stream architecture able to compute multi-scale registration fields from convolutional feature pyramids. Our contributions are two-fold: (i) we design a two-stream 3D encoder-decoder network which computes two convolutional feature pyramids separately for a pair of input volumes, resulting in strong deep representations that are meaningful for deformation estimation; (ii) we propose a pyramid registration module able to predict multi-scale registration fields directly from the decoding feature pyramids. This allows it to refine the registration fields gradually in a coarse-to-fine manner via sequential warping, and enable the model with the capability for handling significant deformations between two volumes, such as large displacements in spatial domain or slice space. The proposed Dual-PRNet is evaluated on two standard benchmarks for brain MRI registration, where it outperforms the state-of-the-art approaches by a large margin, e.g., having improvements over recent VoxelMorph [2] with 0.683->0.778 on the LPBA40, and 0.511->0.631 on the Mindboggle101, in term of average Dice score.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge