Xiaochen Guo

An In-Memory Analog Computing Co-Processor for Energy-Efficient CNN Inference on Mobile Devices

May 24, 2021

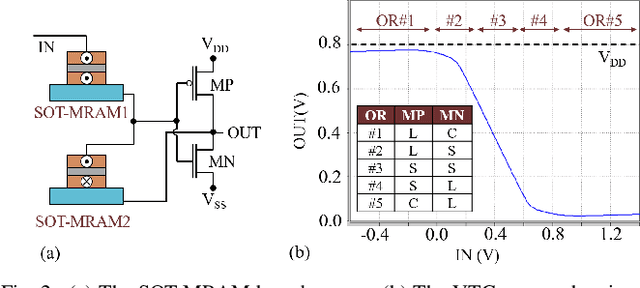

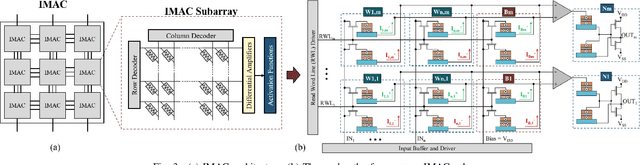

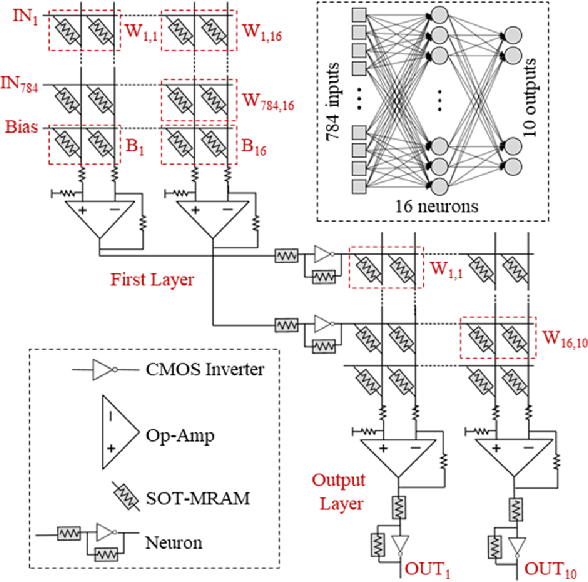

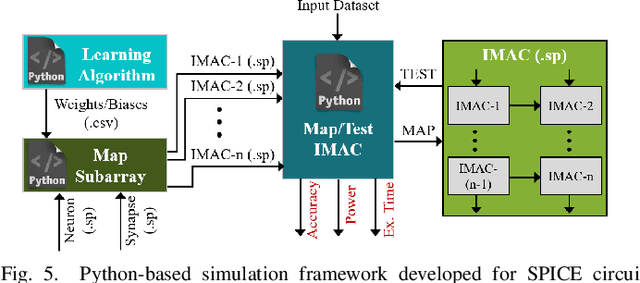

Abstract:In this paper, we develop an in-memory analog computing (IMAC) architecture realizing both synaptic behavior and activation functions within non-volatile memory arrays. Spin-orbit torque magnetoresistive random-access memory (SOT-MRAM) devices are leveraged to realize sigmoidal neurons as well as binarized synapses. First, it is shown the proposed IMAC architecture can be utilized to realize a multilayer perceptron (MLP) classifier achieving orders of magnitude performance improvement compared to previous mixed-signal and digital implementations. Next, a heterogeneous mixed-signal and mixed-precision CPU-IMAC architecture is proposed for convolutional neural networks (CNNs) inference on mobile processors, in which IMAC is designed as a co-processor to realize fully-connected (FC) layers whereas convolution layers are executed in CPU. Architecture-level analytical models are developed to evaluate the performance and energy consumption of the CPU-IMAC architecture. Simulation results exhibit 6.5% and 10% energy savings for CPU-IMAC based realizations of LeNet and VGG CNN models, for MNIST and CIFAR-10 pattern recognition tasks, respectively.

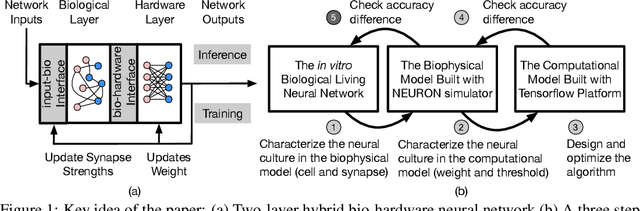

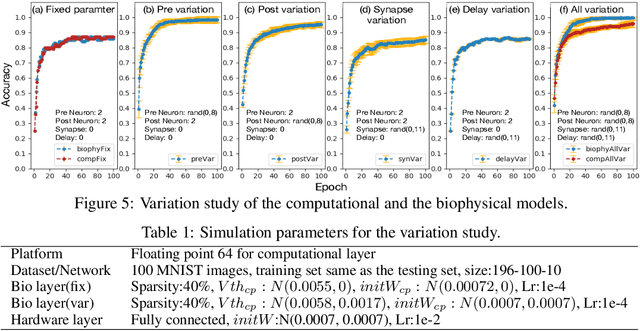

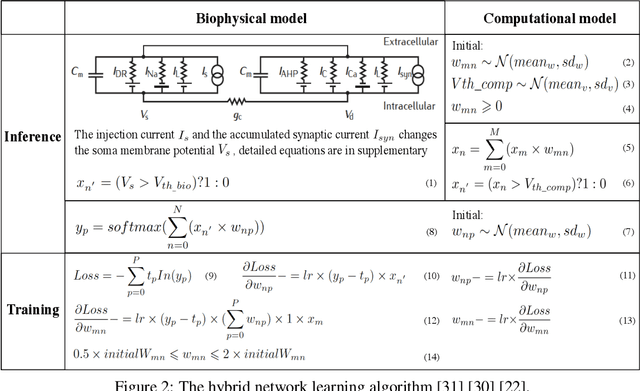

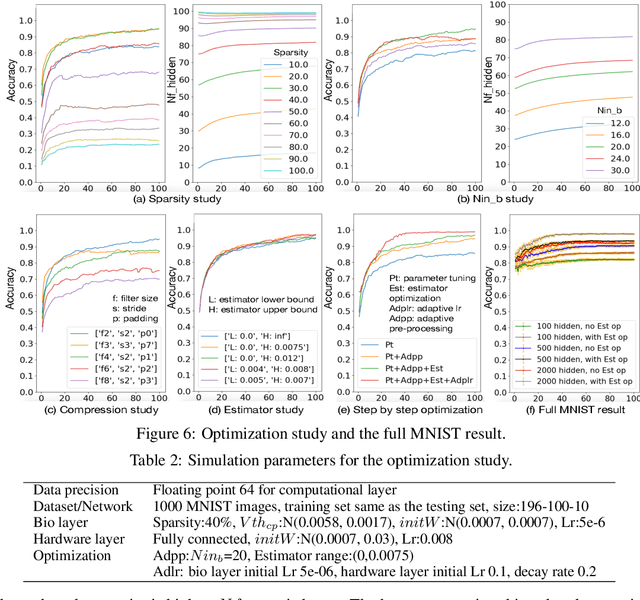

Inference with Hybrid Bio-hardware Neural Networks

May 28, 2019

Abstract:To understand the learning process in brains, biologically plausible algorithms have been explored by modeling the detailed neuron properties and dynamics. On the other hand, simplified multi-layer models of neural networks have shown great success on computational tasks such as image classification and speech recognition. However, the computational models that can achieve good accuracy for these learning applications are very different from the bio-plausible models. This paper studies whether a bio-plausible model of a in vitro living neural network can be used to perform machine learning tasks and achieve good inference accuracy. A novel two-layer bio-hardware hybrid neural network is proposed. The biological layer faithfully models variations of synapses, neurons, and network sparsity in in vitro living neural networks. The hardware layer is a computational fully-connected layer that tunes parameters to optimize for accuracy. Several techniques are proposed to improve the inference accuracy of the proposed hybrid neural network. For instance, an adaptive pre-processing technique helps the proposed neural network to achieve good learning accuracy for different living neural network sparsity. The proposed hybrid neural network with realistic neuron parameters and variations achieves a 98.3% testing accuracy for the handwritten digit recognition task on the full MNIST dataset.

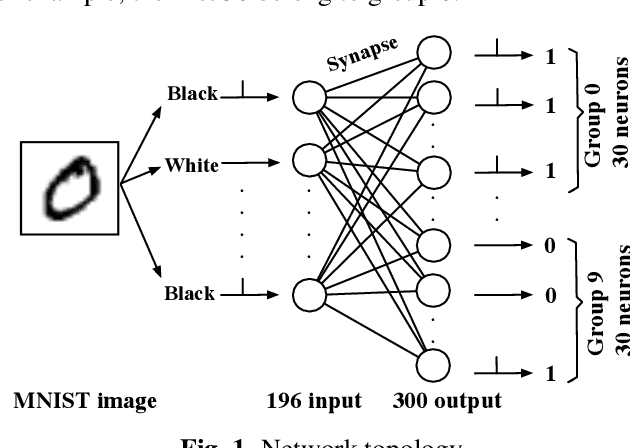

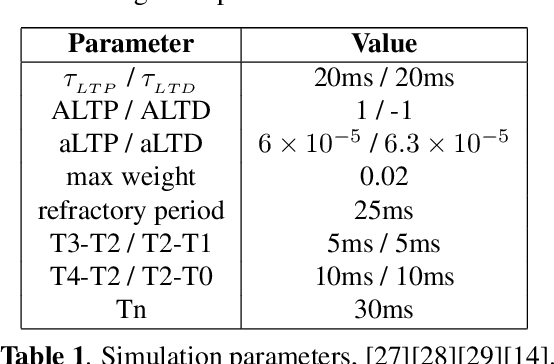

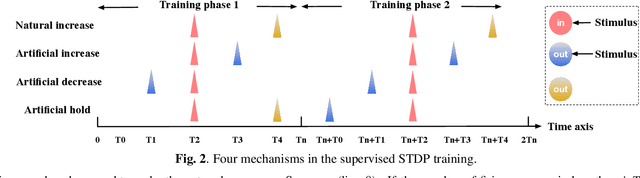

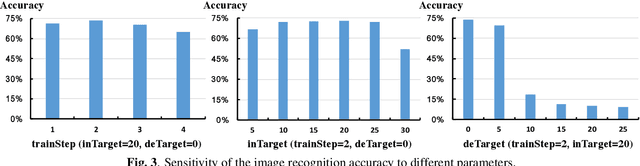

A Supervised STDP-based Training Algorithm for Living Neural Networks

Mar 21, 2018

Abstract:Neural networks have shown great potential in many applications like speech recognition, drug discovery, image classification, and object detection. Neural network models are inspired by biological neural networks, but they are optimized to perform machine learning tasks on digital computers. The proposed work explores the possibilities of using living neural networks in vitro as basic computational elements for machine learning applications. A new supervised STDP-based learning algorithm is proposed in this work, which considers neuron engineering constrains. A 74.7% accuracy is achieved on the MNIST benchmark for handwritten digit recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge